Interact – Reactive Environments

The idea for this project was developed in the context of a workshop I attended during last week called

In this workshop, I was part of a cluster called Reactive Acoustic Environments whose objective was to utilize the technological infrastructure of EMPAC, in Rensselaer Polytechnic Institute in Troy in order to develop reactive systems responding to acoustic energy.

cluster leaders: Zackery Belanger, J Seth Edwards, Guillermo Bernal, Eric Ameres

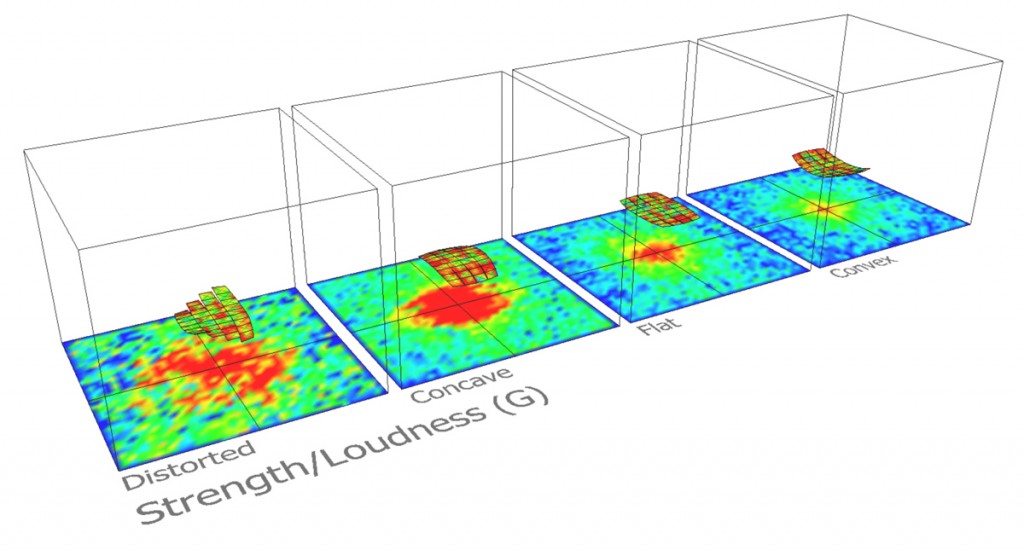

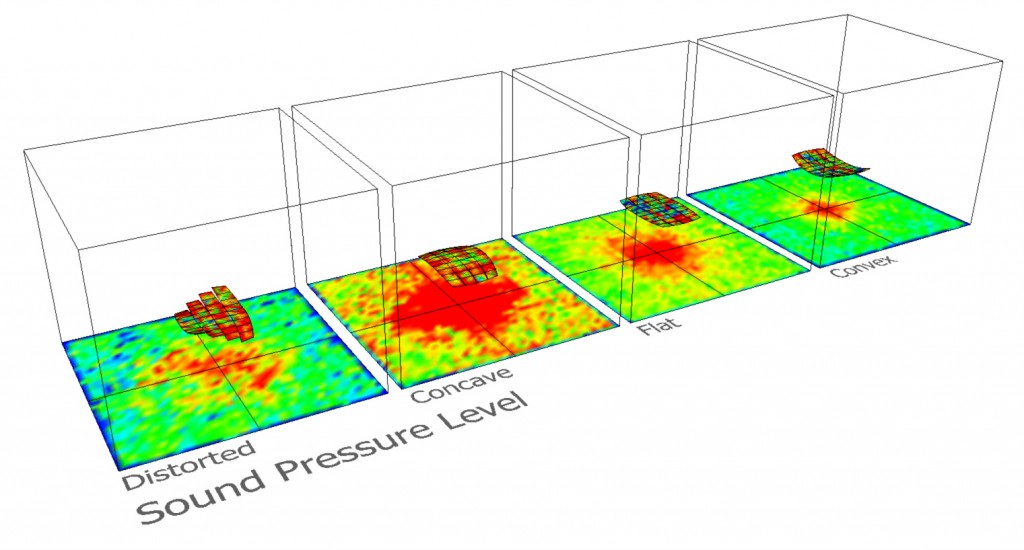

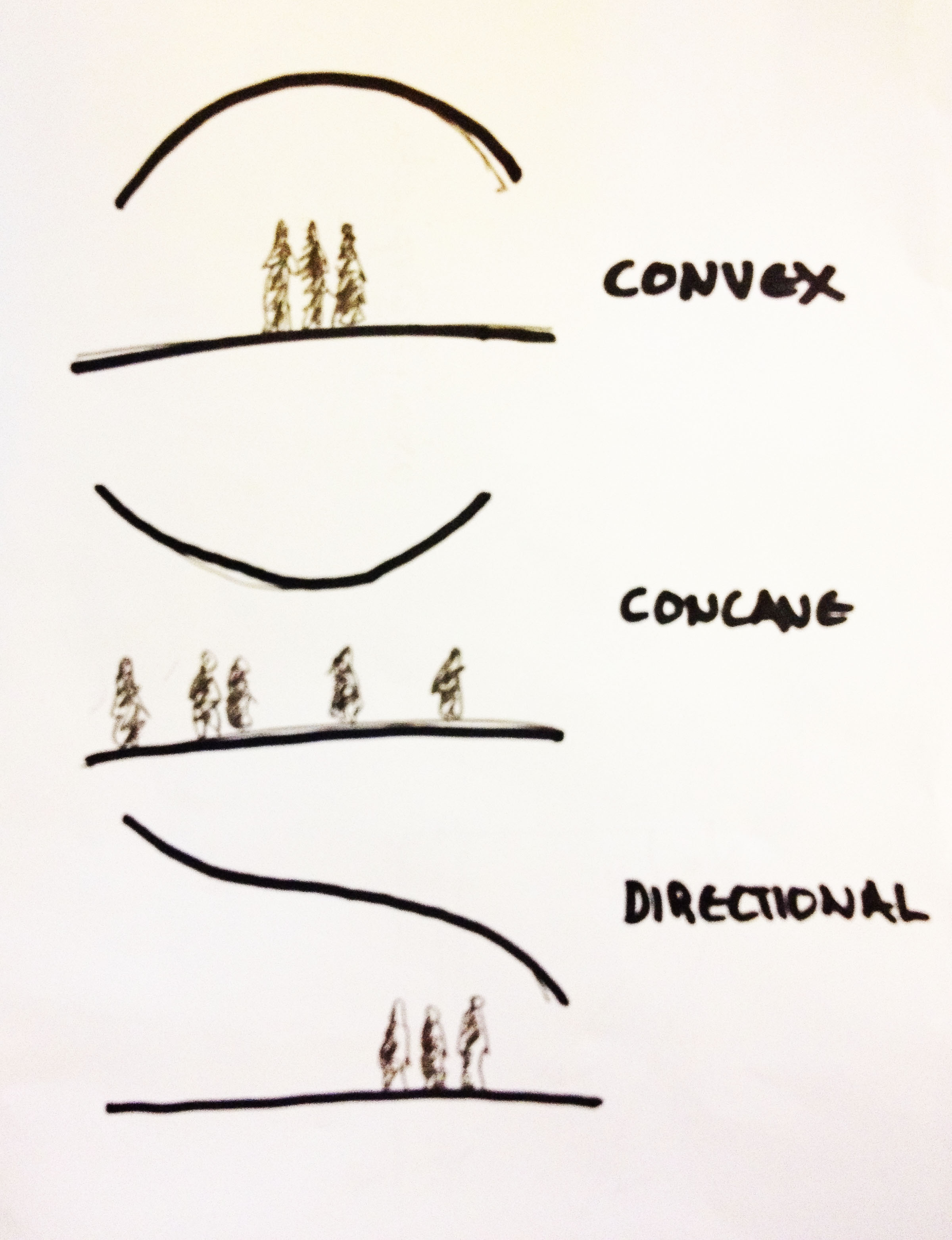

During the workshop acoustic simulations were developed by the team about the different shape configurations the canopy could take.

images source:

During the workshop I started working on the idea of making the canopy interactive in relation to the movement of the people below. By recognizing different crowd configurations the canopy would take different forms to accommodate different acoustic conditions.

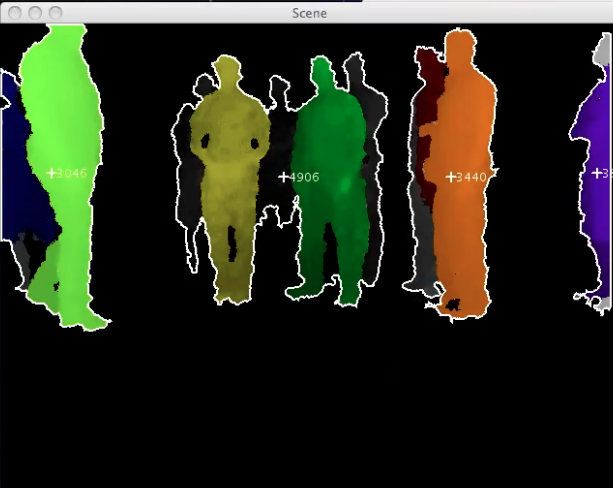

I started working using processing and kinect. The libraries I initially used were SimpleOpenNI to extract the depth map and OpenCV to perform blob detection the 2-dimensional SceneImage kinect input. However this approach was a bit problematic, since it was not using the depth data for the blob detection; the result was that openCV regarded people that were standing next to each other as one blob.

1st attempt: PROCESSING + openNI + openCV

2nd attempt: PROCESSING + openNI

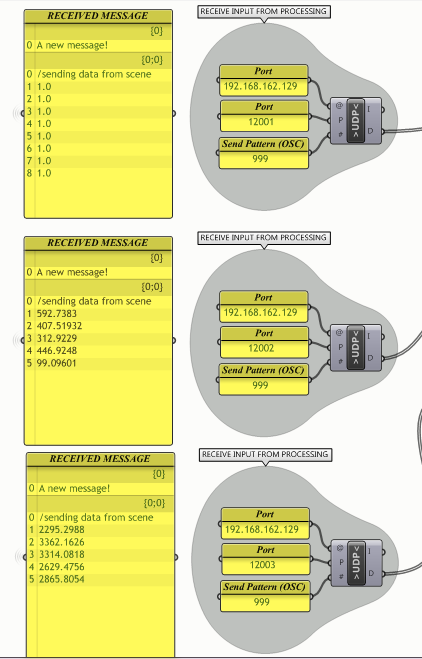

Later on, I realized that the OpenNI library actually already contains the blob detection information; thus I rewrote the processing script using only OpenNI. Since I do not have access any more to the actual canopy, I tested the blob detection script on a kinect video captured here at CMU and the interaction on a 3d model developed in grasshopper. Processing sends signals to Grasshopper via the oscP5 library. Grasshopper receives signals through the gHowl library. In Grasshopper the Weaverbird library is also used for the mesh geometry.

GRASSHOPPER + gHOWL

To determine the values according to which the canopy is moving, I used the data taken from the kinect imput for each of the detected people. For each person, the centroid point of its mass was used to compute the standard deviation of the distribution of detected people in the x and y direction. A small standard deviation represents a more dense crowd while a big standard deviation represents a sparse crowd. These values along with the position of the mean centroid point determined the movement of the canopy. Below some of the conditions attempted to include in the interaction behavior.

The site installation itself is very impressive, and I would have liked a bit more background as to the control system/range of motion/etc, because it’s hard to analyze the effectiveness of the control system without knowing the limitations of the hardware

Excellent process documentation. I also like that the interaction with the piece is not really on an individual basis, but is determined by a crowd. I also think it’s very interesting that you can change the acoustics of the room to suit it’s occupants; either helping them to have private conversations, or by being more disruptive and broadcasting their conversations to the room.+1 My one criticism is mostly about the presentation. It took a while for us to understand the acoustic nature of the sculpture, which turned it from being a cool interactive sculpture, to being an awesome social experiment. Really great idea.

I’m a little confused about how the centroids identified from the Kinect are being translated to the motion of the canopy thing in Grasshopper.

It would have been really helpful if you could show a sketch of what were you trying to achieve. It is extremely helpful, especially when you describe a project that you are still working on.

^ Agreed, giving more background and goals of the project as a whole would be very helpful.

Ahh, the part that wasn’t clear for me until the end was that the canopy is designed to channel sound in the space. As an interactive sculpture alone, I didn’t think the movements were very satisfying, but they’re much better knowing that the intent is to manipulate sound, not look interesting.

Dynamically modifiying the acoustic properties of the space is a cool space. Could be interesting to play with dividing the space and using the division of sound to shape interaction of visitors to the space as well

I am confused with how canopy thing works with Kinect, but I think the idea is great and it could develop further into a bigger project.

I’d read the shit out of that paper.+20

Assesment: observational study doing a pseudo A/B testing with the sculpture static versus interactive. As well as contextual inquiries at acoustically designed buildings. IDK.

Nice mixture of programming environments…. Processing to Grasshopper over OSC, eventually to MaxMSP…

Very nice idea to measure the crowd — to understand DISTRIBUTIONS of people (e.g. with their standard deviations) as conrol values for the interactive kinetic sculpture.

I didn’t realize — it’s not just a pretty kinetic canopy sculpture, it’s a variable acoustic reflector.

There are papers that can be written about this.

*agreed

I want to see this in real life! Plus I imagine that a much much larger crowd will yield much more interesting and dynamic result. The sound aspect would be quite a very neat feature especially when coupled with a large crowd.

This is an awesome project. I would be very excited to see your code reapplied to the kinetic sculpture. Your sketches and documentation are wonderful.

I didn’t realize it was based audio space. I first thought you had meant that the kinectic tranformations were based on weather or physical effect. THEREFORE this project is really really interesting. I think it is obvious that your presentation needs to better articulated for what you are thinking about and discussing. Both talking about the technology and beauty of the work, but also the practicallity.