Final Project – The Human Theremin

The Human Theremin

By Duncan Boehle and Nick Inzucchi

The Human Theremin is an interactive installation that generates binaural audio in a virtual space. It uses a Kinect and two Wiimotes to detect the motion of the user’s hands, and Ableton Live synthesizes binaural sounds based on the hand motion.

Video Summary

[vimeo http://vimeo.com/42057276 w=600&h=338]

Software Pipeline

The full pipeline uses several pieces of software to convert the motion into sound. We use OpenNI sensor drivers for Kinect in order to detect the user’s head and hand positions in 3D space and to draw the body depth map in the visualization. We use OSCulator to detect the button presses and acceleration of the Wiimotes, which sends along the data it receives via OSC.

We then have an OpenFrameworks app that receives this sensor data, which renders the visualization and sends along relevant motion data to Ableton Live. The app hooks into OpenNI’s drivers with a C++ interface, and listens for OSCulator’s data using OFX’s built-in OSC plugin.

Our Ableton Live set is equipped with Max for Live, which allows us to use complex Max patches to manipulate the synthesized sound. The patch listens for motion and position data from our OFX app, and creates a unique sound for each of the four Wiimote buttons we listen for. The roll, pitch, and acceleration of the controller affect the LFO rate, distortion, and frequency of the sounds, respectively. When the user releases the button, the sound exists in the space until it slowly decays away, or the user creates a new sound by pressing the same button again.

The sounds are sent through a binaural Max patch created by Vincent Choqueuse, which spatializes the sound into full 3D. The patch uses a head-centered “interaural-polar” coordinate system, which requires our OFX app to convert the position data from the world coordinate system given by the Kinect. The system is described in detail by the CIPIC Interface Laboratory, which supplied the HRTF data for the binaural patch. The azimuth, elevation, and distance of the hands are relative to the user’s head, which is assumed to be level in the XZ-plane and facing in the same direction as the user’s shoulders. This computation allows the user to wander around a large space, facing any direction, and be able to hear the sounds as if they were suspended in the virtual space.

Reception

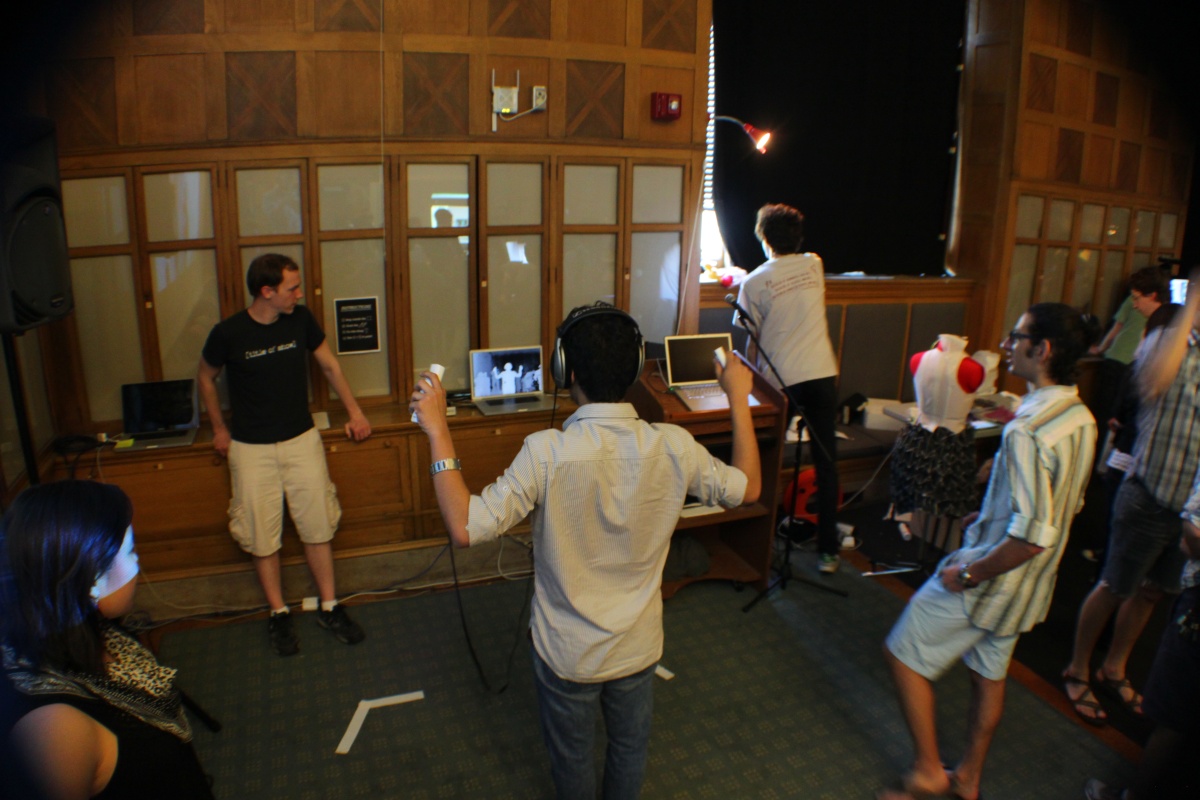

We set up the installation during the Interactive Art and Computational Design Final Show on May 3rd, and a lot of visitors got a chance to try out our project.

The reception was very positive, and nearly everyone who participated was really impressed with how easily they were immersed into the virtual soundscape with the binaural audio. We experimented with having no visual feedback, because the system is based primarily on audio, but we found that showing users their depth map helped them initially orient their body, and they later felt comfortable moving through the space without looking at the display.

Some participants only tried moving their hands around their head for a minute, and then felt like they had experienced enough of the project. Many others, however, explored far more of the sound design, placing sounds while walking around and frantically moving their hands to try to experiment with the music of the system. One person looked like a legitimate performer while he danced with the Wiimotes, and said that he wished the system could use speakers instead of headphones so that more people could appreciate the sounds simultaneously.

Conclusion

Although we received primarily positive feedback, there are still a few directions that we could take the project to make a more compelling experience. Based on one suggestion, it would be interesting to try to create more of a performance piece where pointing in space would create sounds along the outside of a room, using an ambisonic speaker array. Other participants suggested that they wanted more musical control, so we could change some of the sounds to have simpler tones and more distinct pitches, to facilitate creating real music. The direction of the project would certainly depend on what type of space it could be set up in, since it would have far different musicality in a club-like atmosphere than it would as an installation in an art exhibition.

Over all, we achieved our goal of creating an immersive, unique sound experience in a virtual space, and we look forward to experimenting with the technologies we discovered along the way.