Polished Kinect Music Machine

My first idea for a final project would be to extend Project 3’s Kinect beat machine into a much more polished, useful, and creative work.

One of the biggest issues with the first implementation was the accuracy and responsiveness. I think this could be remedied by looking into alternative methods for virtual drumsticks. I originally used OSCeleton to transmit joint data to OpenFrameworks, so using Synapse as a base to eliminate the inter-process communication delay might be somewhat helpful. This has the added benefit of giving access to the original depth data, which could be used for even more responsive hand data. Since the hand joint’s movement is slightly smoothed automatically by OpenNI, its position lags behind the apparently hand location in the depth field. Instead of only using the hand joint’s position, I could use it as a seed for a search for the lowest depth value that seems to be close to the hand, which might directly correspond to the user’s movement much faster.

I also would want to have a more intuitive instrument layout, with more varied sounds, and have it respond to the strength of the hit, to change the volume or create effects like echoing. I also would want to be able to visualize the individual looping tracks, and give the user the ability to delete or modify them, instead of having them play forever until they are all deleted.

In addition, being able to switch instruments or layouts could also make for much more dynamic music. Creating a virtual keyboard could add a lot more musicality to the purely rhythmic compositions.

This type of project extension could certainly be a month’s worth of work, but it might not be all that satisfying, since most of what I’ve proposed would complicated the interface beyond that of a simple toy, but it might not be quite useful or creative enough for someone who genuinely wanted to compose a drum beat or song.

Binaural Audio World

I was very inspired by two projects in the audio realm: Deep Sea and Audio Space.

Deep Sea uses equipment (a blind gas mask with headphones and a microphone) to make the player feel vulnerable to the unknown sea around them, inducing claustrophobia and a negative feedback loop of breathing. I was inspired by how it didn’t use video in order to create an emotionally powerful experience.

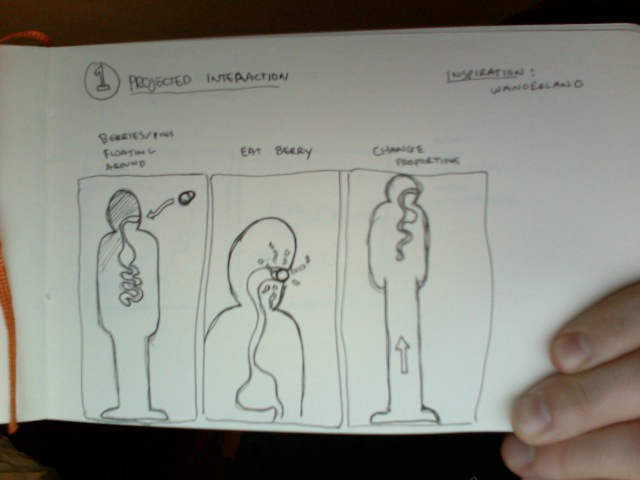

Audio Space was an art exhibit that used head tracking, binaural audio reproduction, and recording to create a space for exploring and creating sounds. Visitors would “paint” the room with sound by speaking into the microphone, which would correspond to the 3D position that they were in while they were speaking it, which would be realistically played back for any future visitors. That project lent itself very well to creating interesting collaborative experiences with only sound, and I was very hopeful that using binaural audio and head tracking together could work well.

My hope would be to create a narrative for a fictional space that the player must explore using only audio cues. By rotating the head in 3D space and simulating binaural sounds, I would be able to place 3D sound cues in a virtual room in order to craft an experience. I’d like to induce fear, similar to Deep Sea, and successfully combine head-tracking with binaural audio, like in Audio Space.

For technology, it would probably make the most sense to use a WiiMotion Plus controller to track the head’s orientation using it’s gyroscope and accelerometer, and receive the data via bluetooth. I would use Clunk, and open-source library for generating binaural sounds, and use Kinect with OpenNI to track the general location of the player’s head and body.

My biggest problem is that I don’t already have a narrative in mind for this virtual audio space. I would like to try the tech out to see if it works, and then craft a narrative that seems natural, but that seems like a very backwards approach.

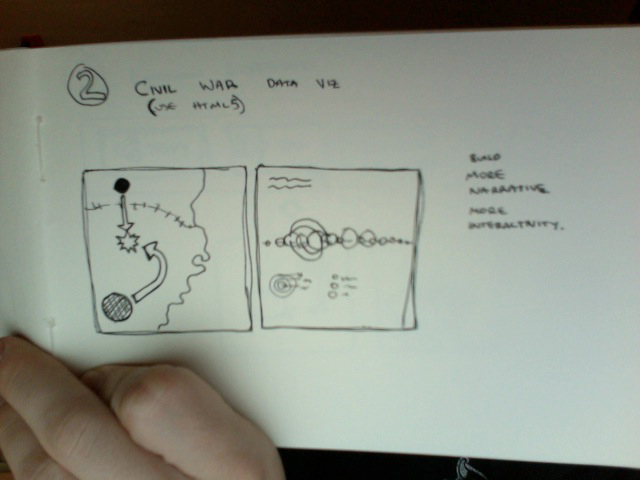

Location-Based Game

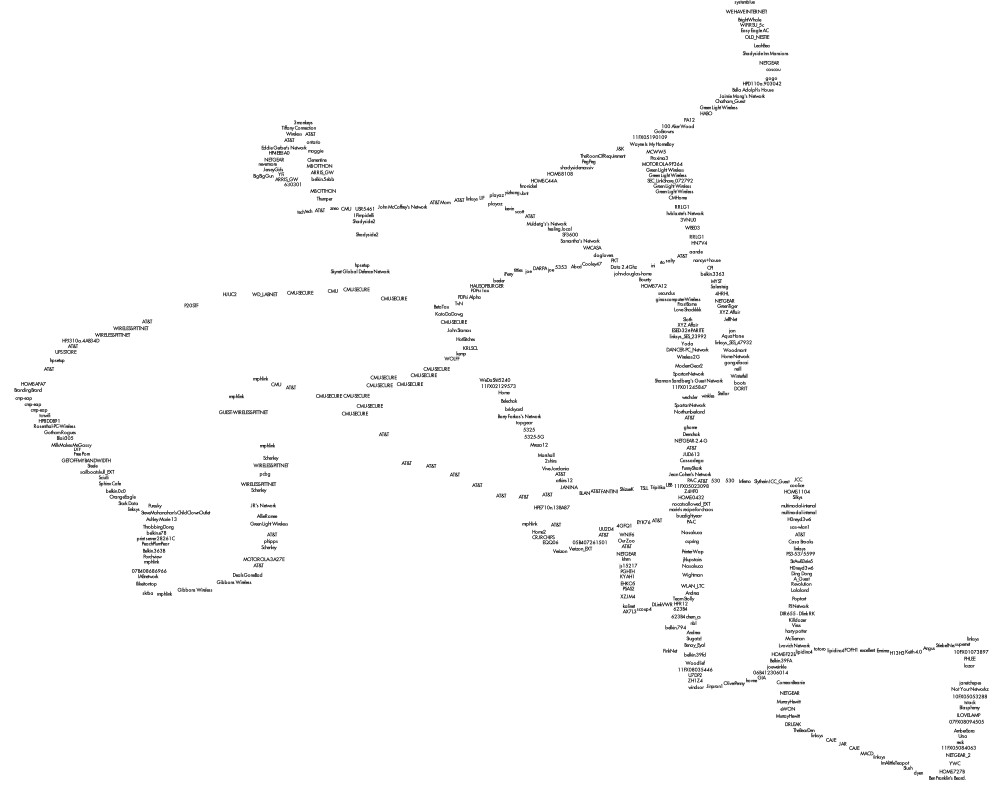

This is more of a concept idea than a specific brainstorm example, but I’ve been very intrigued with the prospect of making “social games” genuinely social. Using smartphones with GPS, it would be interesting to create a game that were significantly benefited by finding other players in real life using GPS and playing in proximity. Using API’s like Bump, this type of direct personal interaction could be a great way to break the ice between strangers.

My first rough idea for a game is collaboratively uncovering buried treasure hidden in real-world coordinates. The digging process would be significantly aided by having multiple players in the same area at once, but I’m not sure if the idea is at all feasible.

My other idea revolved around building castles or territory marked in real world locations, but I think which idea I would explore depends on whether collaboration works better for this type of game than competition.

The main problem with this idea is that I don’t have a great idea of the final result, and that the end game would have to be very polished in order to get real people to play it. Unfortunately, a significant number of people would have to play it in order to test the social effects of the game, which makes it a very difficult project to measure the success of.