1. ExR3 by Kyle McDonald and Elliot Woods

ExR3 is an installation involving a number of mirrors that reflect and refract geometric shapes found on the various walls of the room. As the user moves through the space, they explore and discover the shapes and the interrelation between them. This project is really interesting because the placement of the mirrors and the shapes were carefully calculated out using computer vision, reminding me of the way an architect would plan out the experience of a space.

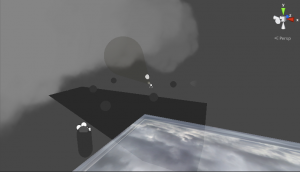

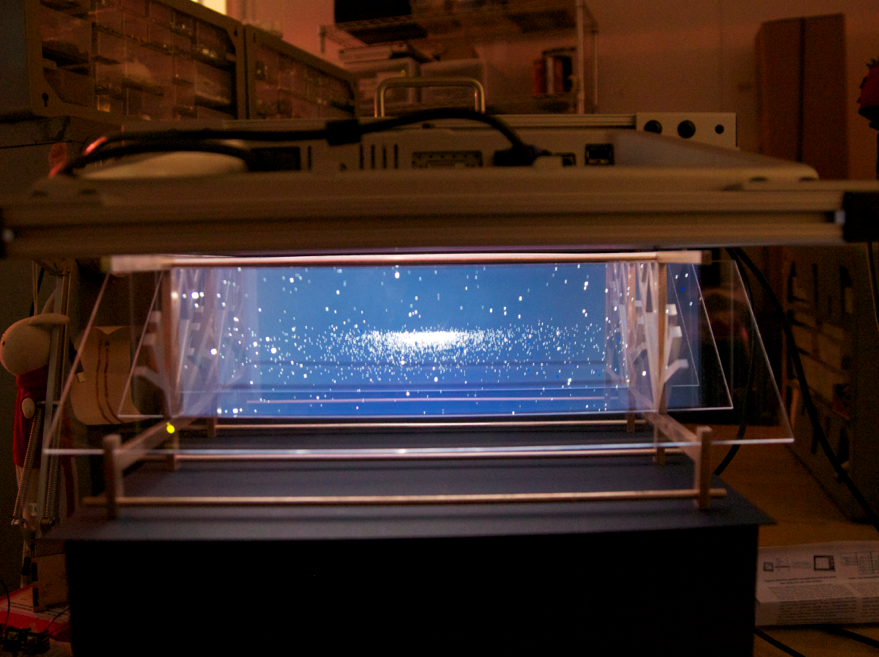

2. IllumiRoom by Microsoft

This is a really interesting project that allows the experience of a movie or video game to be expanded beyond the boundaries of a television screen and interact with the other objects in the room in a number of ways. The system uses a kinect to gauge the room and find the location and outlines of objects within the room. It definitely changes the experience of both the space and the game/movie being enjoyed but I would like to see some more interesting augmentations. The ones shown in the videos are somewhat predictive and I think that there is much more potential in this new system.

3. Smart Light by Google Creative Lab

This project is a series of explorations involving projecting (literally projecting) digital functionality onto analog objects in the real world. It is inspired by the idea of extending the knowledge based of the web outside of the computer and into the everyday world. I find this idea intriguing but it begs a few questions that I would hope would be explored in the future. Firstly, in the documentation, there is no indication of what is making the projection and I’m wondering how plausible it is to take this technology out of the lab and into the everyday world. If it is not, it is somewhat limiting and not addressing the question as well as it could. Secondly, in the second documentation video, they are using objects that seem to have been built for the sole purpose of these experiments. I prefer the ideas presented in the first video that suggest using this technology in conjunction with everyday objects.