Github: https://github.com/andybiar/SpongeFaceOSC

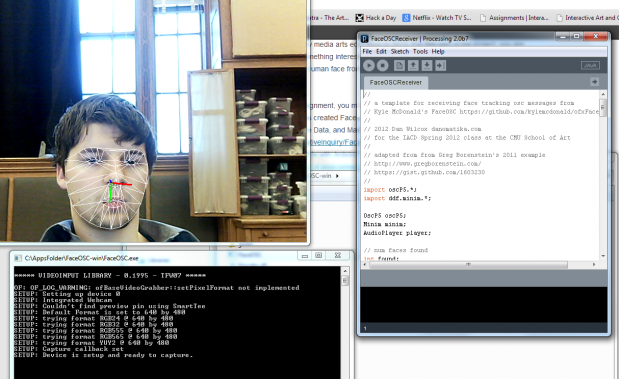

My FaceOSC project uses Open Sound Control to transmit the parameters of my face via the internet to my Processing sketch. My sketch uses the eyebrow and mouth data to determine the size of the alpha mask over an image of Spongebob Squarepants. Only when I am closest to the camera and my face is ridiculously wide open can I see the image in its entirety, at which point Spongebob’s laughter is triggered. Is he laughing at you, or with you?