FaceOSC connected to OSCulator, connected to Logic Pro. Generative Music.

Category Archives: face-osc

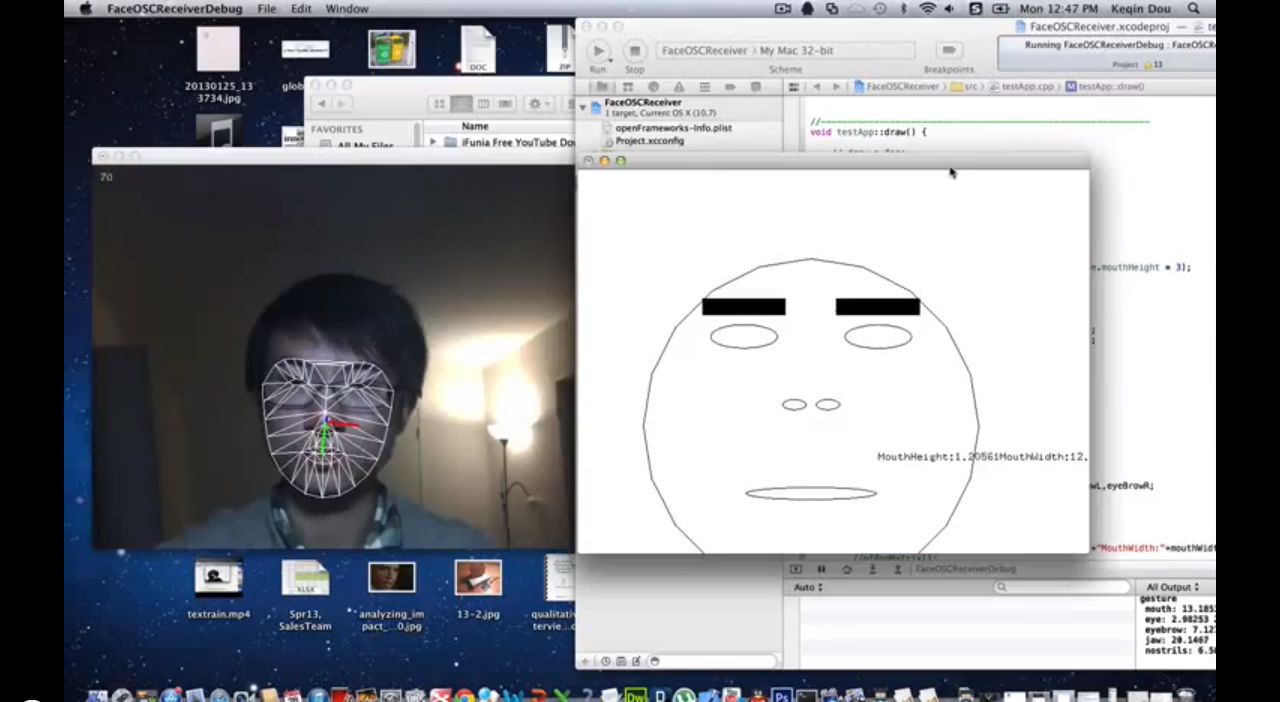

Keqin

28 Jan 2013

I call my FaceOSC the Symphony of the nature. I use the FaceOSC.app to capture the movement of the face in front of the computer and send it to FaceOSC receiver built in openframeworks. In this receiver, I use the mouth’s height and width and eyeflows’ position to trigger the different animals’ sounds. Such as if the mouth’s height exceeds some threshold, it will play the cow’s sound. And also there are birds and pig’s sound. So you can move your mouth and eye to make a animal’s sound Symphony.

Here’s the code link: https://github.com/doukooo/textrain

Dev

28 Jan 2013

Being a citizen of the Internet is no fun if you don’t look like one. BUT NOW YOU CAN!

Introducing TrollFaceOSC. TrollFaceOSC checks how wide you are smiling, how raised your eyebrows are, and even if you are mad. Given these values, a mapping is made to the appropriate face (At the moment there are 4 (and to be counting)).

So fairly basic stuff as far as programming goes. A lot of the code was inspired by the Processing example given by Dan (FaceOSCSmiley I believe). After I got the basics coded, I spent some time calibrating it to my face, and then voila.

In the future I hope to add more faces, and make the app more general. Imagine – you can take any youtube video and add a troll face to it! SUPER PRODUCTIVE!

Nathan

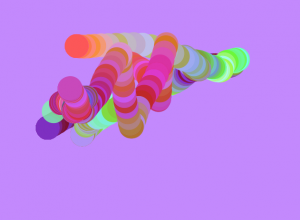

27 Jan 2013

This is so freaking fun. I was able to use the FaceOSC application along with simple sketch pad style processing sketch. I really believe in an interface that is very intuitive, if not, almost unnecessary. This project was the most beautiful of my Intensive, using colors coordinated in triples by mapping eyebrows and mouth width. Smiling and eye-raising are natural things that humans do and I wanted to make that the mainstay of the project. Watching yourself make a drawing is quite beautiful and I think they make the project very successful.

My code is here.

Kyna

27 Jan 2013

This project uses FaceOCS to track the position of your face, and maps it to a face in the Processing environment. It uses the orientation of your face to steer up, down, left and right on the screen. It also maps your mouth, and whether it is open or closed. By looking the direction you want the face to move in and opening your mouth, it is possible to eat the small glowing sprites that wander around the frame. They leave a splatter where they were eaten which fades with time. The bugs utilize a modified version of Daniel Shiffman’s boid class.

Git –> Soon, github hates me

Code?

The simple Splatter class:

class Splatter {

PVector loc;

int life;

PImage splat;

Splatter(PVector l, PImage s) {

loc = l;

life = 255;

splat = s;

}

void run() {

pushMatrix();

translate(loc.x, loc.y);

tint(255, 255, 255, life);

image(splat, 0, 0);

if (life > 0) life--;

popMatrix();

}

}

Function used to determine if the bug is within range of the mouth:

void eaten(Bug bug, float mouthWidth, float mouthHeight, PVector posePosition) {

if ((posePosition.x-(mouthWidth*4)-20 < = bug.loc.x) &&

(bug.loc.x <= (3*(mouthWidth*2)+posePosition.x)+10)) {

if ((posePosition.y+75 <= bug.loc.y) &&

(bug.loc.y <= posePosition.y+(mouthHeight*20)+75)) {

Splatter temp;

PVector tempLoc = new PVector(bug.loc.x, bug.loc.y);

bug.loc.x = random(-100, 0);

bug.loc.y = random(-100, 0);

score++;

if (bug.bug == lpic) {

temp = new Splatter(tempLoc, (loadImage("l" + (int)random(1, 3) + ".png")));

splatters.add(temp);

}

else if (bug.bug == fpic) {

temp = new Splatter(tempLoc, (loadImage("f" + (int)random(1, 3) + ".png")));

splatters.add(temp);

}

else if (bug.bug == spic) {

temp = new Splatter(tempLoc, (loadImage("s" + (int)random(1, 3) + ".png")));

splatters.add(temp);

}

}

}

}

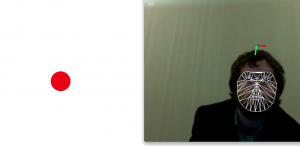

Michael

27 Jan 2013

OSC Face Ball from Mike Taylor on Vimeo.

This simple Processing demo uses facial movements from Kyle McDonald’s FaceOSC application and Dan Wilcox’s OSC template. At it’s core, this app is a very simple face-controlled physics simulator. As the user looks up, down, left, and right, forces are exerted on the ball which cause it to accelerate in the direction of the user’s gaze. Each time the ball hits the wall, it loses a fraction of its kinetic energy to avoid things from going out of control. There is certainly some room for additional functionality by taking advantage of facial features like mouth and eye height, but these were difficult to test because of faceOSC insisting that my mouth is my mustache.

The Processing code can be found here.

And here:

import oscP5.*;

OscP5 oscP5;

int found;

PVector poseOrientation;

float ballX = 640;

float ballVX = 0;

float ballY = 360;

float ballVY = 0;

float ballM =1;

void setup(){

size(1000,720);

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, "found", "/found");

oscP5.plug(this, "poseOrientation", "/pose/orientation");

poseOrientation = new PVector();

}

void draw(){

background(255);

if (found > 0) {

ballVX -= poseOrientation.y/ballM;

ballX += ballVX;

ballVY += poseOrientation.x/ballM;

ballY += ballVY;

// noStroke();

// lights();

// translate(ballX, 360, 0);

// sphere(25);

// println(ballX);

if (ballX>1000){

ballX=1000;

ballVX=-.7*ballVX;

}

if (ballX<0){

ballX=0;

ballVX=-.7*ballVX;

}

if (ballY>720){

ballY=720;

ballVY=-.7*ballVY;

}

if (ballY<0){

ballY=0;

ballVY=-.7*ballVY;

}

}

ellipseMode(CENTER);

noStroke();

fill(256,0,0);

ellipse(int(ballX),int(ballY),50, 50);

}

public void found(int i) {

found = i;

}

public void poseOrientation(float x, float y, float z) {

println("pose orientation\tX: " + x + " Y: " + y + " Z: " + z);

poseOrientation.set(x, y, z);

}

// all other OSC messages end up here

void oscEvent(OscMessage m) {

if (m.isPlugged() == false) {

}

}

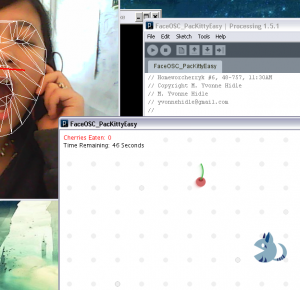

Yvonne

27 Jan 2013

Simple game using FaceOSC. I was originally going to combine FaceOSC with a pacman game I made for Jim’s class last semester, but I ran into difficulty with FaceOSC and collision mapping. So, instead, I combined FaceOSC commands with a really simple “point-and-shoot” game.

Basically, I am using my face position to move the character around the screen. When I open my mouth, the character opens its mouth, enabling it to eat the flying cherry.

Github Repository: https://github.com/yvonnehidle/faceOSC_pacKitty

Original Blog Post @ Arealess: http://www.arealess.com/working-with-faceosc/

Anna

26 Jan 2013

Tldr : All the jagged lines in FaceOSC’s mask-thingy made me think of teeth. The fact that I could control something by chewing also made me think of teeth. I really like oceans, bizarre villainous things, and distractingly shiny things. All these facts put together resulted in a Processing approximation of an anglerfish.

Title credit to classmate Kim Harvey, who saw me meddling with this at my desk and promptly exclaimed “It’s like Nemo’s Nightmare…!”

If you don’t know what she’s talking about, here, have some Pixar:

The full implementation of this is in shambles right now. My intent was to create a set of schooling fish that would direct themselves toward the “light”, which is controlled by the coordinates of your nose in FaceOSC. Right now, that doesn’t quite work, but I did manage to create a set of random ‘fishies’ that don’t exactly school but will move toward the approximate location of your nose using the overall facial position coordinates (pos.x, pos.y) instead. The other thing I wanted to do was enable ‘eating’ of the little fishies, by writing some kind of collision detection between them and the (hidden) mouth ellipse.

But like I said in my other post: I am so not that classy yet. If you don’t believe me, check out the scary anglerfish of a code below, or at the GitHub Repo.

// Nemo's Nightmare - a processing app using FaceOSC

// created by Anna von Reden, 2013

// for the IACD Spring 2013 class at the CMU School of Art

//

// created using a template for receiving face tracking osc messages from

// Kyle McDonald's FaceOSC https://github.com/kylemcdonald/ofxFaceTracker

//

// 2012 Dan Wilcox danomatika.com

// for the IACD Spring 2012 class at the CMU School of Art

//

// adapted from from Greg Borenstein's 2011 example

// http://www.gregborenstein.com/

// https://gist.github.com/1603230

//

//TEARDROP CURVE

//M. Kontopoulos (11.2010)

//Based on the parametric equation found at

//http://mathworld.wolfram.com/TeardropCurve.html

//

//STEERING BEHAVIOR

//Based on code examples by iainmaxwell, found here:

// http://www.supermanoeuvre.com/blog/?p=372

import oscP5.*;

OscP5 oscP5;

// num faces found

int found;

// pose

float poseScale;

PVector posePosition = new PVector();

PVector poseOrientation = new PVector();

// gesture

float mouthHeight;

float mouthWidth;

float eyeLeft;

float eyeRight;

float eyebrowLeft;

float eyebrowRight;

float jaw;

float nostrils;

// shape constants & variables

float r = 5;

float a = 5;

ArrayList nemos; // an arraylist to store all of our nemos!

PVector shiny; // The shiny will be the current position of the mouse!

int numnemos = 50;

int stageWidth = 640; // size of the environment in the X direction

int stageHeight = 480; // size of the environment in the Y direction

void setup() {

size(stageWidth, stageHeight, P3D);

frameRate(30);

nemos = new ArrayList(); // make our arraylist to store our nemos

shiny = new PVector(stageWidth/2, stageHeight/2, 0); // make a starting shiny

// loop to make our nemos!

for (int i = 0; i < numnemos; i++) { nemos.add( new Nemo() ); } smooth(); oscP5 = new OscP5(this, 8338); oscP5.plug(this, "found", "/found"); oscP5.plug(this, "poseScale", "/pose/scale"); oscP5.plug(this, "posePosition", "/pose/position"); oscP5.plug(this, "poseOrientation", "/pose/orientation"); oscP5.plug(this, "mouthWidthReceived", "/gesture/mouth/width"); oscP5.plug(this, "mouthHeightReceived", "/gesture/mouth/height"); oscP5.plug(this, "eyeLeftReceived", "/gesture/eye/left"); oscP5.plug(this, "eyeRightReceived", "/gesture/eye/right"); oscP5.plug(this, "eyebrowLeftReceived", "/gesture/eyebrow/left"); oscP5.plug(this, "eyebrowRightReceived", "/gesture/eyebrow/right"); oscP5.plug(this, "jawReceived", "/gesture/jaw"); oscP5.plug(this, "nostrilsReceived", "/gesture/nostrils"); } void draw() { background(0, 0, 30); noStroke(); if (found > 0) {

for (int i = 0; i < nemos.size(); i++) { Nemo A = (Nemo) nemos.get(i); A.run(); // Pass the population of nemos to the nemo! } translate(posePosition.x, posePosition.y); scale(poseScale); shiny = new PVector(posePosition.x, posePosition.y-50, 0); // if mouse is pressed then update shiny // fill(140, 180, 240); // stroke(0,0,30); // ellipse(-10, eyeLeft * -9, 5, 4); //ellipse(10, eyeRight * -9, 5, 4); //fill(0,0,30); //ellipse(0, 20, mouthWidth*5, mouthHeight * 5); noStroke(); fill(25, 45, 45); beginShape(TRIANGLES); vertex(mouthWidth-2, mouthHeight*5); vertex(mouthWidth+2, mouthHeight*5); vertex(mouthWidth+6, mouthHeight); vertex(mouthWidth-10, mouthHeight*6); vertex(mouthWidth-6, mouthHeight*2); vertex(mouthWidth-2, mouthHeight*5); vertex(mouthWidth-14, mouthHeight*6); vertex(mouthWidth-12, mouthHeight*2); vertex(mouthWidth-10, mouthHeight*6); vertex(mouthWidth-18, mouthHeight*6); vertex(mouthWidth-16, mouthHeight*2); vertex(mouthWidth-14, mouthHeight*6); vertex(mouthWidth-26, mouthHeight*5); vertex(mouthWidth-22, mouthHeight*2); vertex(mouthWidth-18, mouthHeight*6); vertex(mouthWidth-34, mouthHeight); vertex(mouthWidth-30, mouthHeight*5); vertex(mouthWidth-26, mouthHeight*5); vertex(mouthWidth-2, mouthHeight*-7); vertex(mouthWidth+2, (mouthHeight*-5)+20); vertex(mouthWidth+6, mouthHeight*-3); vertex(mouthWidth-10, mouthHeight*-9); vertex(mouthWidth-6, (mouthHeight*-5)+20); vertex(mouthWidth-2, mouthHeight*-7); vertex(mouthWidth-14, mouthHeight*-9); vertex(mouthWidth-12, (mouthHeight*-5)+20); vertex(mouthWidth-10, mouthHeight*-9); vertex(mouthWidth-18, mouthHeight*-9); vertex(mouthWidth-16, (mouthHeight*-5)+20); vertex(mouthWidth-14, mouthHeight*-9); vertex(mouthWidth-26, mouthHeight*-7); vertex(mouthWidth-22, (mouthHeight*-5)+20); vertex(mouthWidth-18, mouthHeight*-9); vertex(mouthWidth-34, mouthHeight*-3); vertex(mouthWidth-30, (mouthHeight*-5)+20); vertex(mouthWidth-26, mouthHeight*-7); endShape(); fill(180, 200, 100); beginShape(); for (int i=0; i<360; i++) { float x = (nostrils*-1)/4 + sin( radians(i) ) * pow(sin(radians(i)/2), 1.5) *r; float y = (nostrils*-1)/4 + cos( radians(i) ) *r; vertex(x+3, -y-15); } endShape(); //ellipse(0, nostrils * -1, 10, 10); } } class Nemo { PVector pos, vel, acc; float maxVel, maxForce, nearTheShiny; int nemoSize; Nemo() { pos = new PVector( random(0, width), random(0, height), 0 ); vel = new PVector( random(-1, 1), random(-1, 1), 0 ); acc = new PVector(0, 0, 0); maxVel = random(.5, 1.0); maxForce = random(0.2, 1.5); nearTheShiny = 200; nemoSize = 20; } void run() { seek(shiny.get(), nearTheShiny, true); // update position vel.add(acc); // add the acceleration to the velocity vel.limit(maxVel); // clip the velocity to a maximum allowable pos.add(vel); // add velocity to position acc.set(0, 0, 0); // make sure we set acceleration back to zero! toroidalBorders(); render(); } //Get to the Shiny! void seek(PVector shiny, float threshold, boolean slowDown) { acc.add( steer(shiny, threshold, slowDown) ); } //Steering PVector steer (PVector shiny, float threshold, boolean slowDown ) { PVector steerForce; // The steering vector shiny.sub(pos); float d2 = shiny.mag(); if ( d2 > 0 && d2 < threshold) {

shiny.normalize();

if ( (slowDown) && d2 < threshold/2 ) shiny.mult( maxVel * (threshold/stageWidth) );

else shiny.mult(maxVel);

shiny.sub(vel);

steerForce = shiny.get();

steerForce.limit(maxForce);

}

else {

steerForce = new PVector(0, 0, 0);

}

return steerForce;

}

//keep fishies on screen

void toroidalBorders() {

if (pos.x < 0 ) pos.x = stageWidth; if (pos.x > stageWidth) pos.x = 0;

if (pos.y < 0 ) pos.y = stageHeight; if (pos.y > stageHeight) pos.y = 0;

}

void render() {

stroke(120, 190, 200);

fill(120, 190, 200);

ellipse(pos.x, pos.y, nemoSize/(int(poseScale)+1), nemoSize/(int(poseScale)+1));

line(pos.x, pos.y, pos.x-(vel.x*nemoSize/(int(poseScale)+1)), pos.y-(vel.y*nemoSize/(int(poseScale)+1)) );

}

}

// OSC CALLBACK FUNCTIONS

public void found(int i) {

println("found: " + i);

found = i;

}

public void poseScale(float s) {

println("scale: " + s);

poseScale = s;

}

public void posePosition(float x, float y) {

println("pose position\tX: " + x + " Y: " + y );

posePosition.set(x, y, 0);

}

public void poseOrientation(float x, float y, float z) {

println("pose orientation\tX: " + x + " Y: " + y + " Z: " + z);

poseOrientation.set(x, y, z);

}

public void mouthWidthReceived(float w) {

println("mouth Width: " + w);

mouthWidth = w;

}

public void mouthHeightReceived(float h) {

println("mouth height: " + h);

mouthHeight = h;

}

public void eyeLeftReceived(float f) {

println("eye left: " + f);

eyeLeft = f;

}

public void eyeRightReceived(float f) {

println("eye right: " + f);

eyeRight = f;

}

public void eyebrowLeftReceived(float f) {

println("eyebrow left: " + f);

eyebrowLeft = f;

}

public void eyebrowRightReceived(float f) {

println("eyebrow right: " + f);

eyebrowRight = f;

}

public void jawReceived(float f) {

println("jaw: " + f);

jaw = f;

}

public void nostrilsReceived(float f) {

println("nostrils: " + f);

nostrils = f;

}

// all other OSC messages end up here

void oscEvent(OscMessage m) {

if (m.isPlugged() == false) {

println("UNPLUGGED: " + m);

}

}

Robb

25 Jan 2013

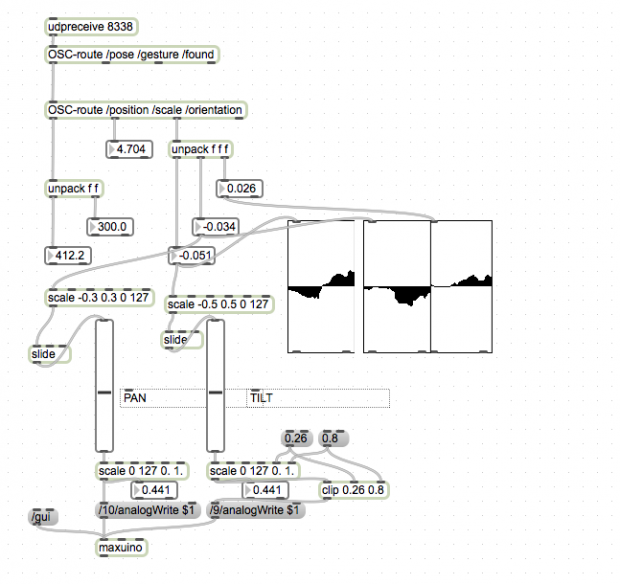

Using my face to control a pan/tilt mirror.

faceOSC, MaxMSP, maxuino, two servos, a teensy 2.0 running standard firmata, and a shard of glass combine to make a system of directing laser beams using your head.

Maxuino is useful for MAX to Arduino communication. It is developed by CMU art professor Ali Momeni.

I used MAX because it is really easy to smooth and visualize data. This project took very little time.

Maxuino actually expects OSC formatted messages, meaning that my patch is simply translating OSC to OSC and then to Firmata.

Ersatz

24 Jan 2013

Lines of Emotion

Recently I read how drawn lines can express emotion, for example more curved lines express calm mood and more harsh, straight lines could express excitement . This application takes users face expression values via Kyle McDonalds’ FaceOSC and transform them into animated lines. For example, your mouth control how curved the lines should be, your eyes the speed they move, your eyebrows the distance between them.