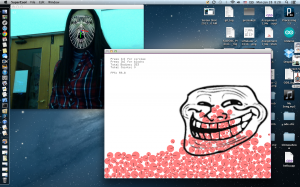

rainbowVomit from Andrew Bueno on Vimeo.

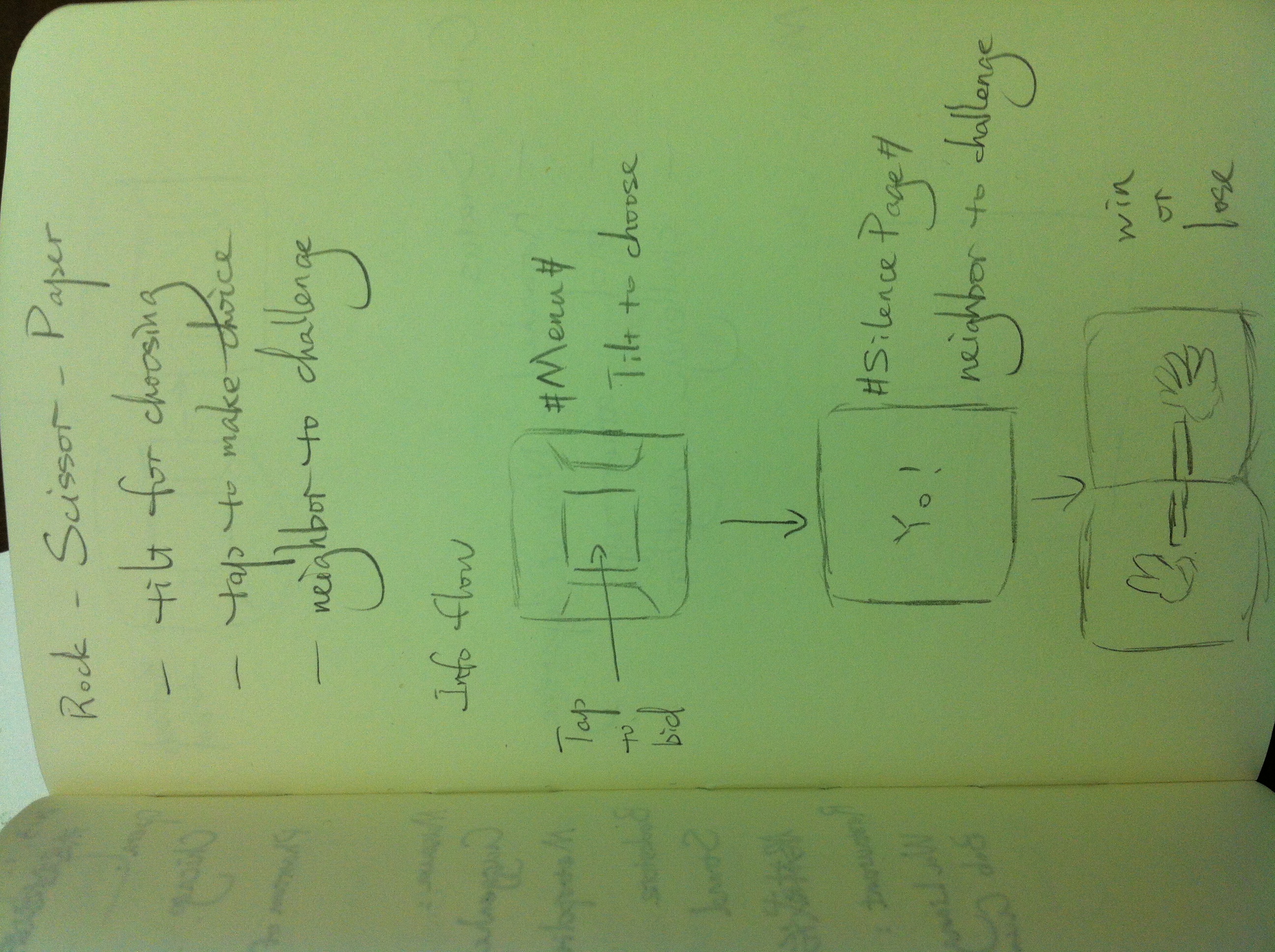

After talking about my old waterboarding clock processing app in class the other day, I got a bit nostalgic for that old, lo-fi aesthetic. Thus, the crapsaccharine rainbow vomit app. I hooked up the FaceOSCSyphon app to Dan’s FaceOSC template for processing. I kept track mainly of the mouth. When its tracking points become wide enough apart, the vomit begins. It quite a bit of figuring out – Caroline Record was a huge help. Another source of help was a fellow on openProcessing – he is properly credited in my code. Without him, the app wouldn’t have that special brand of ridiculous.

https://github.com/buenoIsHere/RainbowVomit

//

// a template for receiving face tracking osc messages from

// Kyle McDonald's FaceOSC https://github.com/kylemcdonald/ofxFaceTracker

//

// 2012 Dan Wilcox danomatika.com

// for the IACD Spring 2012 class at the CMU School of Art

//

// adapted from from Greg Borenstein's 2011 example

// http://www.gregborenstein.com/

// https://gist.github.com/1603230

//

// Addendum from Bueno: Thank you florian on openFrameworks for the code!

import codeanticode.syphon.*;

import oscP5.*;

SyphonClient client;

OscP5 oscP5;

float[] rawPoints = new float[131];

float cWidth;

float cHeight;

PImage img;

Wave wav;

// num faces found

int found;

// pose

float poseScale;

PVector posePosition = new PVector();

PVector poseOrientation = new PVector();

// gesture

float mouthHeight;

float mouthWidth;

float eyeLeft;

float eyeRight;

float eyebrowLeft;

float eyebrowRight;

float jaw;

float nostrils;

void setup() {

size(640, 480, P3D);

background (random (256), random (256), 255, random (256));

smooth();

frameRate(30);

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, "found", "/found");

oscP5.plug(this, "rawDataReceived", "/raw");

client = new SyphonClient(this, "FaceOSC");

colorMode(HSB);

wav = new Wave(width/2, height/2, 25, PI/18, 0);

}

void draw() {

if (client.available())

{

// The first time getImage() is called with

// a null argument, it will initialize the PImage

// object with the correct size.

//img = client.getImage(img); // load the pixels array with the updated image info (slow)

img = client.getImage(img, false); // does not load the pixels array (faster)

image(img,0,0);

//background(255,0,0,0);

if(found > 0) {

float lastNum = 0;

int Idx = 0;

noFill();

noStroke();

if(dist(rawPoints[122], rawPoints[123], rawPoints[128], rawPoints[129]) > 25)

{

wav.paint = true;

}

else

{

wav.paint = false;

}

wav.offsetX = (rawPoints[120] + rawPoints[124])/2;

wav.offsetY = (rawPoints[121] + rawPoints[125])/2;

wav.amplitude = rawPoints[124] - rawPoints[120] - 40;

wav.display();

wav.update();

// ellipse (rawPoints[130],rawPoints[131], 2,2);

}

else

{

println ("not found");

}

}

}

void mousePressed() {

background (random (256), random (256), 255, random (256));

}

// OSC CALLBACK FUNCTIONS

public void found(int i) {

println("found: " + i);

found = i;

}

public void rawDataReceived(float [] f){

for (int i = 0; i < f.length; i++)

{

rawPoints[i] = f[i];

}

}

// all other OSC messages end up here

void oscEvent(OscMessage m) {

if (m.isPlugged() == false) {

println("UNPLUGGED: " + m);

}

}

/* OpenProcessing Tweak of *@*http://www.openprocessing.org/sketch/46795*@* */

/* !do not delete the line above, required for linking your tweak if you re-upload */

/* Some fun with the sin function

once you press the left button of the mouse, you can modulate

the scale and the frequency of a sine wave*/

// I tried to keep things clean, using OOP

//i'm 100% not sure about the grammar and the vocabulary in the comments

//this script is inspired by the awesome book "PROCESSING a Programming Handbook for Visual Designers and Artists"

// written by Casey Reas and Ben Fry

class Wave {

float offsetX; // this will position our wave on the X axis

float offsetY;

float amplitude;// this is for the wave height

float frequency;//this will set the frequency(from batshit fast to insanely zoombastic)

float angle;// it's an angle, every body knows that!

boolean paint;

//constructor

Wave(float offX, float offY, float amp, float fre, float ang) {

offsetX = offX;

offsetY = offY;

amplitude = amp;

frequency = fre;

angle = ang;

paint = false;

}

void display() {

// for each pixel on the x axis, A.K.A: on all the width of the screen

for (float y = offsetY; y < = height ; y+=.2) {

/*So this is when it's getting all maths and stuff

it's a neat formula that updates the y position of the ellipse you'll draw

so, after some movement, it will look like a wave*/

float posx = offsetX + (sin(angle)*(amplitude + map(y, offsetY, height,20,100)));

/*you draw an ellipse

the y position is increasing everytime you loop

the x position is going up and down and up and down, and...

my ellipse is 5 pixel high and 10 pixel width. this is how I like my ellipse*/

ellipse(posx, y, 10,10);

/* a nice and easy way to get RAINBOW colours

(don't forget to set the colorMode to HSB in the setup function)

h will set the hue

the h value is calculated wirh a sin function and is mapped in order to get a value between 0 and 130*/

float h = map(sin(angle), -1, 1, 0, 130);

//here is our h again, for hue. saturation and brightness are at 255

if(paint)

{

fill(h, 255, 255);

}

else

{

noFill();

}

//don't forget to increase the angle by adding frequency

// or your wave will be flat; you don't want that

angle += frequency/2;

}

}

/*this function will be called each frame

it modifies amplitude and frequency, depending on the x and y coordinates of the mouse,

once you press the left button*/

void update() {

}

}