Inspiration/ References:

- Here is an awesome article about how virtual reality does and does not create presence.

- Virtual presence through a map interface. Click

- making sense of maps. Click

- Obsessively documenting where you are and what you are doing. Surveillance. Click

- Gandhi in second life. click.

- Camille Utterback’s Liquid Time.

- (Bueno) Ah, could you imagine what it would be like to have a video recording spanning years?

- Love of listening to other people’s stories. click

- (Bueno) Archiving virtual worlds

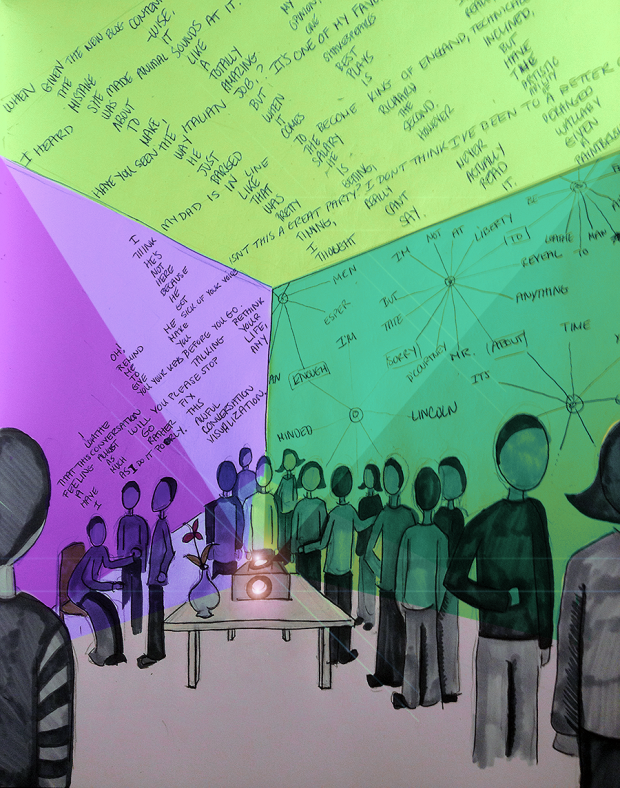

Thoughts:

- carnegie Mellon and “making your mark”. People really just past through places and I think there is a kind of nostalgia in that.

- (Bueno) I think an addendum to that thought is that what “scrubs” places of our presence really is just other people. Sure, nature reclaims everything eventually but there is a fantastic efficiency in the way human beings repurpose/re-experience space.

- artistic photos of old places

Technically Helpful:

- Guy who used street view and has code on git hub: http://notlion.github.com/streetview-stereographic/#o=-.448,.479,-.054,.753&z=1.361&mz=18&p=23.65276,119.51718

- Acii art street view: http://tllabs.io/asciistreetview/

Sound scrubbing library for processing.