faceOsc head orientation -> processing -> java Robot class -> types command rapidly while using rhino

I like rhino 3d. It is a very powerful nurbs modeler. There are certain commands, specifically join/explode, group/ungroup and trim/split, which are used all the time. To execute these commands one has to either click a button or type the command and press enter. Both take too long/I’m lazy.

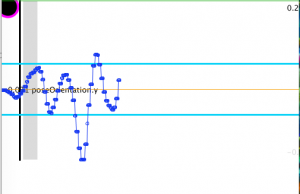

So I made this thingy that detects various head motions and triggers rhino commands. Processing takes in data about the orientation of the head about x,y,and z axis. Each signal has a running average, a relative threshold above and below that average, and a time zone (min and max time) in which the signal pattern can be considered a trigger. The signal pattern required is simple: the signal must cross the threshold and then return. The time that it takes to do this must fit within the time zone. In the video there are three graphs on the right side of the screen. They are in order, from the top, as x y and z. The light blue horizontal lines represent the relative threshold (+and-). The thin orangy line is the running average. The signal is dark blue when in bounds, light blue when below, and purple when above. The gray rectangle approximate the time zone, with the vertical black line as zero (it really should be at the right edge of each graph, but it seemed to cluttered).

Sometimes its rather glitchy. Especially in the video: the screen grab makes things run slow. Also, the x and y axis triggers are often confused. I have to hold my head pretty still. More effective signal processing would help. It would be awesome to be able to combine various triggers to have more commands, but this would be rather difficult. I did set up the structure so that various combinations of triggers for different channels (like eyebrows, mouth and jaw) could code for specific commands.