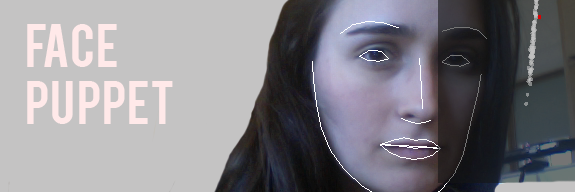

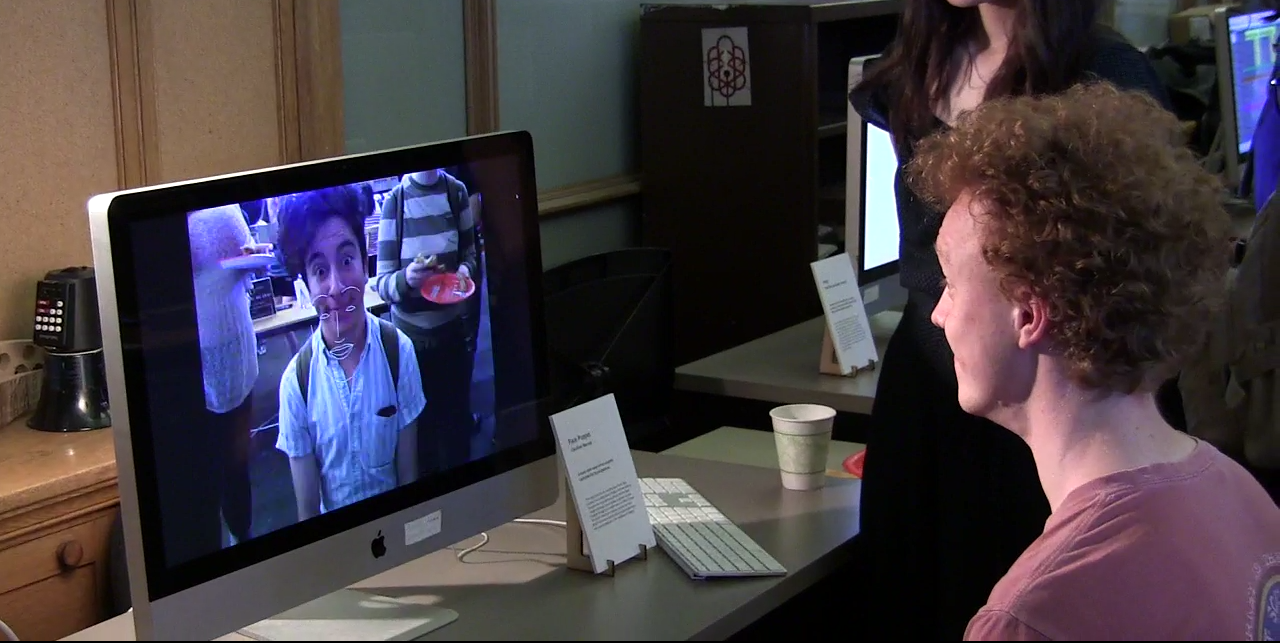

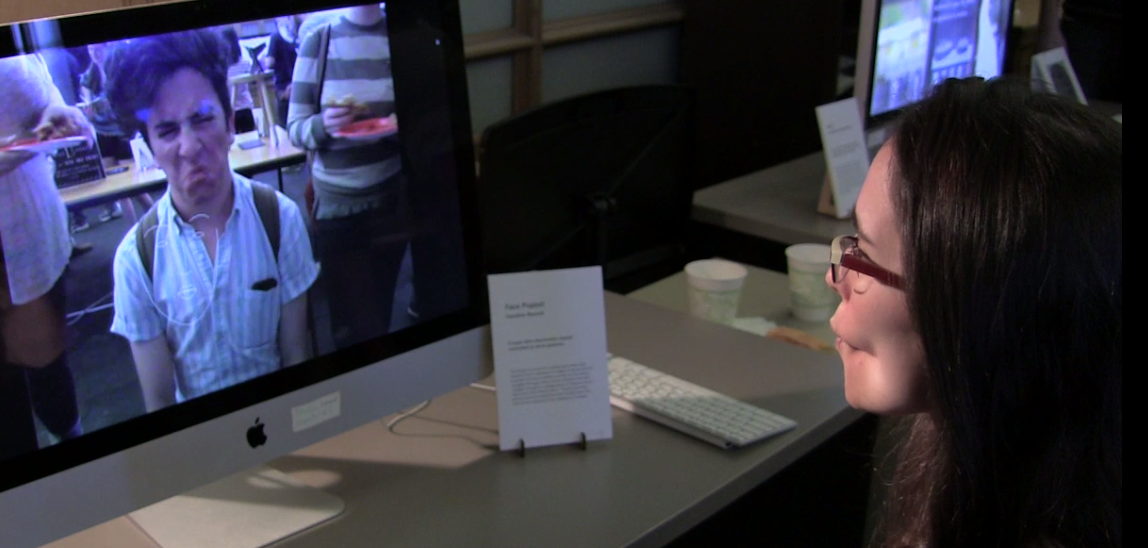

Face Puppet is a gesture controlled stop motion puppet. It captures the user’s facial expression every ten frames and finds the most similar facial expression it has in its database and displays the corresponding image on the screen. I am interested in using it as a framework to both create interactive portraits of characters and create collective systems in which identity is erased in the expression of the user’s gesture.

Face Puppet was written in Open Frameworks using Kyle McDonald’s Face Tracker and Dan Wilcox’s AppUtils. I created a system that had two modes; one for collecting pictures of different expressions, storing all the metadata about them, and visualizing my current database of expressions. And then another mode, the puppet mode, that analyzed the user’s current expression and found and displayed the face with the most similar expression.

Process

Making Face Puppet was quite the technical challenge! It was my first foray into Open Frameworks and the project involved some complicated math I hadn’t encountered before. I started out with the basic idea of a virtual stop motion puppet that would rely on rough gestural tracking.

As an initial experiment I used face OSC and Processing to do some quick mock-ups. I triggered a photograph to be taken every time the user’s face was set to a certain amount of openness. The results showed me the amount of variability I should expect from the face tracker.

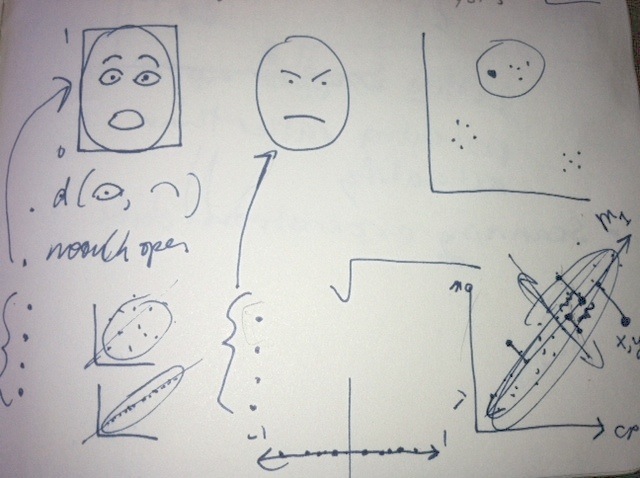

The first row and second row each have same amount of mouth openness.

The pictures in the last row are misidentifications.

I decided to move to Open Frameworks because I knew it would give me a greater degree of speed for a program that relied on a database. Once I was situated in Open Frameworks thanks to all the sage advice from Dan Wilcox my next big move was to figure out how I was going to support categorizing and finding the most similar images. Golan recommended using Singular Value Decomposition, a method for compressing multi-dimensional data so as to better understand the clustering of the different elements.

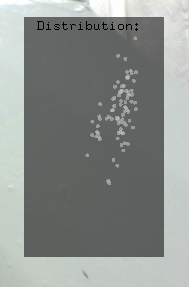

Most of my time in this project was spent figuring out and implementing SVD. I found this tutorial about implementing SVD in ruby particularly helpful. I found implementing it in Open Frameworks particularly grueling. I ended up using 4 different dimensions of data and compressing it down to two. I would of preferred to do more dimensions, but the compression got unreliable past a certain point of compression.

The result was a moderately accurate system for identifying and matching facial expressions. Now that I have an implementation of facial expression mapping, in future I would like to work on systematically masking and scaling each image.

Influences

Stop Motion by Ole Kristensen

Cheese by Christian Moeller

Ocean_v1. by Wolf Nkole Helzle

Stills of Project

Thank you too…

Golan for teaching me about Singular Value Decomposition and thank you Dan Wilcox for getting me started with Open Frameworks.