I have to walk through the radio station to get to campus every day, and there’s a small wooded area with lots of leaves on the ground (as there tends to be in the fall). I noticed that some of these leaves have weird holes in them–probably from small, hungry, bugs, but unsettling nonetheless. These creepy leaves reminded me of this phenomena coined trypophobia, where people find themselves intensely afraid of small, patterned holes such as those in lotus flowers or honeycomb. Small, patterned holes are also a regular natural occurrence, besides lotus flower and honeycomb they can be found in wood, plants, coral, sponges, and more. Clearly the art of generating small, patterned holes was worth investigation.

I have to walk through the radio station to get to campus every day, and there’s a small wooded area with lots of leaves on the ground (as there tends to be in the fall). I noticed that some of these leaves have weird holes in them–probably from small, hungry, bugs, but unsettling nonetheless. These creepy leaves reminded me of this phenomena coined trypophobia, where people find themselves intensely afraid of small, patterned holes such as those in lotus flowers or honeycomb. Small, patterned holes are also a regular natural occurrence, besides lotus flower and honeycomb they can be found in wood, plants, coral, sponges, and more. Clearly the art of generating small, patterned holes was worth investigation.

./wp-content/uploads/sites/2/2013/10/screen02.pdf

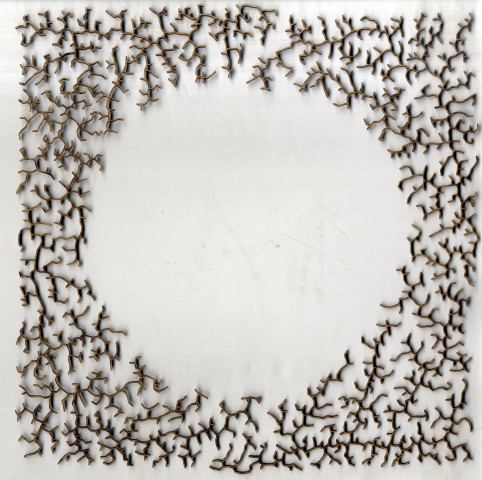

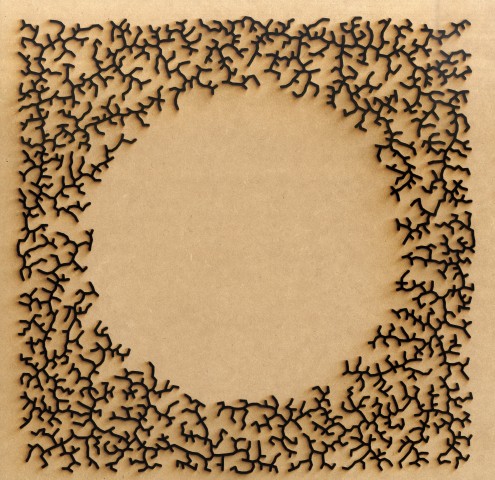

I ended up with two variants. The first of which has a lot more variation in size and shape, and completely fills the wooded area. I basically shot particles at a board and if they were too close to an edge or another particle they’d fall off. Not very simulation heavy!

import processing.pdf.*;

int inch = 60;

int margin = inch/2;

ArrayList blobs;

void setup() {

size(inch*12, inch*12, PDF, "screen05.pdf");

background(255);

noFill();

smooth();

float rad, x, y, maxr, minr;

float desiredseperation = 0;

boolean addBlob = true;

int points, attempts, maxblob;

blobs = new ArrayList();

minr = inch/8;

maxr = 1.5*inch;

maxblob = 300;

attempts = 0;

while ((blobs.size() != maxblob) && (attempts < 6000)) { rad = random(minr, maxr - map(blobs.size(), 0, maxblob, 0, maxr-minr+1)); points = int(random(5, 25 - map(blobs.size(), 0, maxblob, 0, 20))); //x = random(margin+rad, width-(margin+rad)); //y = random(margin+rad, height-(margin+rad)); x = (width/2) + random(0,inch*4)*cos(TWO_PI*random(0,2)); y = (height/2) + random(0,inch*4)*sin(TWO_PI*random(0,2)); addBlob = true; if (blobs.size() > 0) {

for (Blob other : blobs) {

desiredseperation = rad + other.r + (inch/8);

if (dist(x, y, other.cx, other.cy) < desiredseperation) {

addBlob = false;

attempts += 1;

}

}

if (addBlob) {

blobs.add(new Blob(rad, points, x, y));

attempts = 0;

}

}

if (blobs.size() == 0) {

blobs.add(new Blob(rad, points, x, y));

}

}

for (Blob b : blobs) {

b.display();

}

println(blobs.size());

}

void draw() {

// Exit the program

println("Finished.");

exit();

}

class Blob {

float r;

float cx, cy;

int points;

float px, py, pr, angle, offset;

Blob (float inr, int inpoints, float inx, float iny) {

r = inr; // random(inch/8, inch*2)

points = inpoints; // random(3, 12)

cx = inx;

cy = iny;

}

void display() {

beginShape();

offset = random(0,1);

for (int i = 0; i < points; i++) {

angle = TWO_PI*(i+offset)/points;

pr = random(.6, 1) * r;

px = cx + pr*cos(angle);

py = cy + pr*sin(angle);

curveVertex(px, py);

if ((i == 0) || (i == points-1)) {

curveVertex(px, py);

}

}

endShape(CLOSE);

}

}

./wp-content/uploads/sites/2/2013/10/frame-0214.pdf

The second one used Golan’s particle class to create a fleet of small holes that then drifted away from each other within a larger circle.

import processing.pdf.*;

boolean record;

int inch = 60;

ArrayList particles;

void setup() {

size(inch*12, inch*12);

background(255);

noFill();

smooth();

particles = new ArrayList();

for (int i = 0; i < 150; i++) { float rad = random(inch/8, inch/4); particles.add(new Particle(width/2 + random(-inch, inch), height/2 + random(-inch, inch), rad)); } } void draw() { if (record) { // Note that #### will be replaced with the frame number. Fancy! beginRecord(PDF, "frame-####.pdf"); } background(255); float gravityForceX = 0; float gravityForceY = 0.0; float mutualRepulsionAmount = inch/16; for (Particle p : particles) { for (Particle other : particles) { float desiredseperation = p.r + other.r + (inch/8); float dx = p.px - other.px; float dy = p.py - other.py; float dh = sqrt(dx*dx + dy*dy); if (dh > 1.0) {

float componentInX = dx/dh;

float componentInY = dy/dh;

float proportionToDistanceSquared = 1.0/(dh*dh);

float repulsionForcex = mutualRepulsionAmount * componentInX * proportionToDistanceSquared;

float repulsionForcey = mutualRepulsionAmount * componentInY * proportionToDistanceSquared;

p.addForce( repulsionForcex, repulsionForcey); // add in forces

other.addForce(-repulsionForcex, -repulsionForcey); // add in forces

}

/*if (dist(p.px, p.py, other.px, other.py) < desiredseperation) {

}*/

}

}

// update the particles

for (int i=0; i<particles.size(); i++) {

particles.get(i).bPeriodicBoundaries = false;

particles.get(i).update(); // update all locations

}

for (int i=0; i<particles.size(); i++) { particles.get(i).addForce(gravityForceX, gravityForceY); } for (Particle p : particles) { p.render(); } if (record) { endRecord(); record = false; } } // Use a keypress so thousands of files aren't created void mousePressed() { record = true; } class Particle { float r; float px; float py; float vx; float vy; float damping; float mass; boolean bLimitVelocities = true; boolean bPeriodicBoundaries = false; float margin = 2; float offset, qx, qy, qr, angle; // stuff float cx = width / 2; float cy = height / 2; float boundr = 300; // Constructor for the Particle Particle (float x, float y, float inr) { r = inr; px = x; py = y; vx = vy = 0; damping = 0.95; mass = 1.0; } // Add a force in. One step of Euler integration. void addForce (float fx, float fy) { float ax = fx / mass; float ay = fy / mass; vx += ax; vy += ay; } // Update the position. Another step of Euler integration. void update() { vx *= damping; vy *= damping; limitVelocities(); handleBoundaries(); px += vx; py += vy; } void limitVelocities() { if (bLimitVelocities) { float speed = sqrt(vx*vx + vy*vy); float maxSpeed = 6.0; if (speed > maxSpeed) {

vx *= maxSpeed/speed;

vy *= maxSpeed/speed;

}

}

}

void handleBoundaries() {

if (bPeriodicBoundaries) {

if (px > width ) px -= width;

if (px < 0 ) px += width; if (py > height) py -= height;

if (py < 0 ) py += height; } else { //super tenuous circular boundaries if (dist(cx, cy, px+vx, py+vy) > boundr - r) {

vx *= -1;

vy *= -1;

}

}

}

void render() {

float noiseVal = noise((mouseX)*80,

mouseY*80);

stroke(0);

beginShape();

offset = random(0,1);

for (int i = 0; i < 10; i++) {

angle = TWO_PI*(i+offset)/10;

qr = random(0.75, 1) * r;

qx = px + qr*cos(angle);

qy = py + qr*sin(angle);

curveVertex(qx, qy);

if ((i == 0) || (i == 10-1)) {

curveVertex(qx, qy);

}

}

endShape(CLOSE);

}

}

I have to walk through the radio station to get to campus every day, and there’s a small wooded area with lots of leaves on the ground (as there tends to be in the fall). I noticed that some of these leaves have weird holes in them–probably from small, hungry, bugs, but unsettling nonetheless. These creepy leaves reminded me of this phenomena coined trypophobia, where people find themselves intensely afraid of small, patterned holes such as those in lotus flowers or honeycomb. Small, patterned holes are also a regular natural occurrence, besides lotus flower and honeycomb they can be found in wood, plants, coral, sponges, and more. Clearly the art of generating small, patterned holes was worth investigation.

I have to walk through the radio station to get to campus every day, and there’s a small wooded area with lots of leaves on the ground (as there tends to be in the fall). I noticed that some of these leaves have weird holes in them–probably from small, hungry, bugs, but unsettling nonetheless. These creepy leaves reminded me of this phenomena coined trypophobia, where people find themselves intensely afraid of small, patterned holes such as those in lotus flowers or honeycomb. Small, patterned holes are also a regular natural occurrence, besides lotus flower and honeycomb they can be found in wood, plants, coral, sponges, and more. Clearly the art of generating small, patterned holes was worth investigation.