Short:

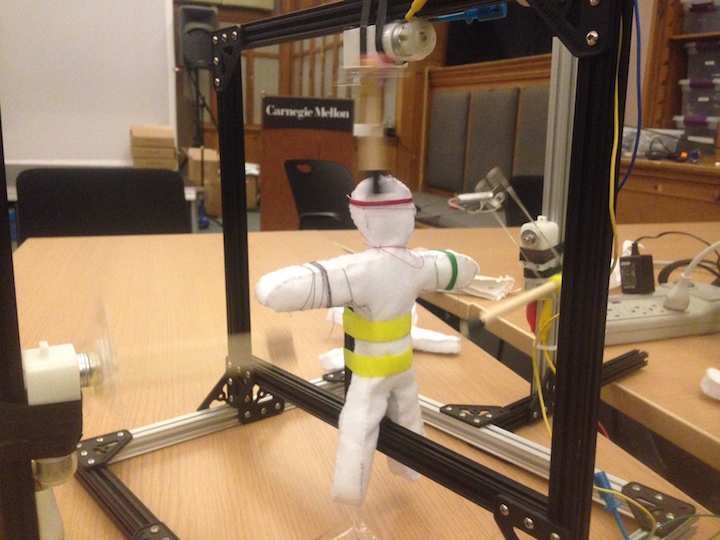

I made a visual sound doll wrapper.

Long:

I’m a fan of voodoo. (When I was five, I found a voodoo doll in New Orleans, and my parents didn’t tell me why it had a needle sticking out of its heart.)

I like the idea of a person’s body being composed of both tangible and intangible elements, so this sound visualizer was a straight forward way of doing so.

Sounds are first taken in and are then output through speed on one of three spinning wheels.

One speeds up and slows down as volume increases.

The second changes pace based on pitch.

The last switches from stopping to full speed based on a threshold of whether or not a piece of audio is “loud.”

This was created using Maxuino in Max.

This was created using Maxuino in Max.

Notes:

Thank you Ali Momeni for the technical support and the pulled pork (it changed me).