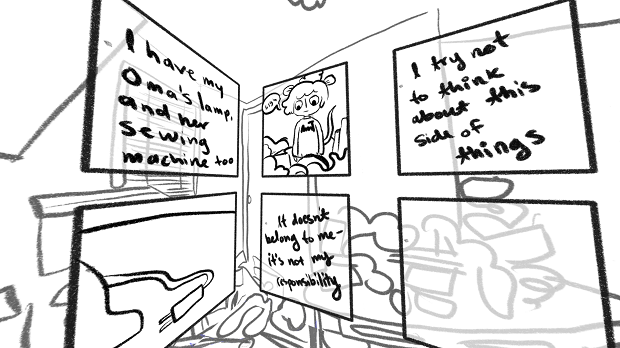

SkyComics is a pair of tools for drawing fully-surrounding immersive scenes. The first tool, an editor allows the user to look in all directions by dragging and zooming, and draw on their surrounding. It has tools for drawing lines and placing rectangular and circular guides. Multiple line colors can be used. The created drawing can be exported as a skybox formatted image for editing in digital painting software, and re-imported any number of times. The second tool, a viewer allows the user to view the created scene/scenes by right-clicking to progress through a list of images which can be edited in the source.

This project was created in collaboration with Caitlin Boyle. She created the scenes of room interiors. Two of these are shown below in the viewer.

The Inspiration

Caitlin’s inspiration for this project was derived from the idea of drawing an immersive hand-drawn comic that the viewer could navigate. Golan brought me this idea for me to make a tool for her to create her comic, without worry of the warping imposed by skyboxes.

What I learned

By doing this project, I was able to learn some new skills in the form of javascript and some javascript libraries. I had not yet worked with javascript and web development (outside of a few small sketches in p5.js), so this project gave me an opportunity to learn some of the pitfalls of javascript. One of these would be what some would consider an odd scoping system. Another would be the restrictions against file input and output and the complicated poorly documented HTML5 APIs which are used for file io. I had also not yet worked with ThreeJS, HammerJS, dat.gui, or JQuery. Each of these exposed me to how to use javascript libraries and how poorly documented even libraries from Google (dat.gui) can be.

What I did well

I feel that I was able to reach my goals on several aspects of the project. I feel I did a particularly good job on the view interaction. When scrolling a web-page on a touch device, users touch and drag the content. People are used to this experience. With the viewer and the editor, the user can touch and drag the surrounding environment around them. Also, in the editor pen and mouse events are separated from touch events in the editor, so the user can draw with the pen while dragging with their finger to move the scene. When no touch input is available or the user wishes to use the mouse or pen, the mouse/pen right-click can be used as touch events that are able move the scene. Also, pinching or scrolling can be used to zoom in and out.

Another thing I feel I did a good job at was the performance/efficiency. When drawing each part of a line from one frame to the next would be a separate threeJS object. As each object is iterated over for scene updates and other events, this created a huge performance problem. I created a simple system that batches strokes together in order to greatly reduce the number of objects created. This made it possible for the program to execute acceptably on both my phone and tablet.

What I didn’t do well

Initially the project I had planned for had many more features. One of these was the ability to click on regions and navigate to other scenes. I ran into problems with other parts of the project and the time I spent debugging issues far exceeded the time I thought I would spend implementing all of the planned features. Due to this project I’ve learned that I need to consider how much time it might take to debug issues or learn how to do new things.