#05 (Due 2/23)

No Looking Outwards — Study Your Tools Instead

This week, there is no Looking Outwards assignment. Instead, you are asked to spend a little time familiarizing yourself with various speech and language tools, and what they can do for you. For example:

- Dan Shiffman has some especially helpful videos for this. See “Recommended Tools” and the videos embedded below.

- The RiTa.js Reference, Gallery and Examples are worth studying carefully.

- The p5.speech.js Examples are worth studying.

A Speech Game • Conversation System • Poetic Interlocutor

Create a (whimsical/provocative/sublime/playful) interactive game, character, chatbot or other system that uses speech input and/or speech output. Graphics are optional.

For example, you might make a voice-controlled children’s book (see this example by Liam Walsh); a rhyming game; a voice-controlled painting tool; or a text adventure. (This list is not exhaustive.) Links to the examples I showed in class are here: https://github.com/golanlevin/lectures/tree/master/lecture_speech. Keep in mind that speech recognition is error-prone; you’ll have to find a way to embrace the glitch and embrace the lag —at least, for another couple of years.

Note: If you do use speech input, your project must respond to actual words. This means: an application that simply uses the participant’s microphone amplitude (volume) or audio pitch (from whistling, etc.) is not acceptable. Please discuss with the professor if you have questions.

Below is some helpful information about:

- Overview of Recommended Tools

- Tempate Code #1: Speech-Powered Etch-a-Sketch

- Tempate Code #2: Interactive Rhymer

- List of Deliverables

Overview of Recommended Tools

The main tools for this project are:

- p5.js (JavaScript)

- p5.speech.js

- RiTa.js

- nlp-compromise (optional)

p5.speech

Our main library for this project will be p5.speech by Luke DuBois from NYU. It performs both speech recognition (speech to text) and speech synthesis (text to speech). It provides access to a wide range of different voices (male, female, robotic, etc.), as well as controls for the speed and pitch of the synthesized voice. To use p5.speech, please read the following important instructions:

- You are advised to work with a local copy of p5.js on your laptop. Cloud-based online editors like the p5.Alpha editor or openProcessing will probably not support this addon, or not support it well. I recommend using a text editor like Sublime to edit your code. Note that p5.speech requires Chrome to run.

- Because accessing the microphone involves relaxing security restrictions in your browser, you’ll need to run your project by connecting to a local server. There are instructions for doing so here. (In other words, it won’t just work to double-click on an HTML file to run your program!) The speech toolkit connects to Google’s cloud service, so you may also have to disable ad blockers or other privacy filters. And don’t forget to allow use of your microphone when your browser asks.

- You’ll need to download p5.speech, from http://ability.nyu.edu/p5.js-speech/.

- Put p5.speech.js in your addons folder.

- Don’t forget to add the p5.speech.js script to your HTML web page, and make sure it is correctly linked in.

More information and code templates are below. I recommend you view Shiffman’s videos for more help, too: 10.3: Text-to-Speech with p5.Speech and 10.4: Speech Recognition with p5.Speech.

10.3: Text-to-Speech with p5.Speech

10.4: Speech Recognition with p5.Speech

RiTa.js

The RiTa library by Daniel Howe is able to break a word into syllables; is able to know what a word rhymes with; is able to tag parts of speech, and much more. It works seamlessly with p5.js. Dan Shiffman provides an overview of using RiTA with p5.js.

NLP-Compromise

NLP-Compromise is a JavaScript “natural language processing” library called which allows you to change the tense of verbs, pluralize nouns, negate statements, and perform other types of language-based operations.

Template Code #1: Speech Etch-A-Sketch

Here is a program (based on the p5.speech example) which uses the speech recognizer to control an etch-a-sketch drawing game. Some things to note:

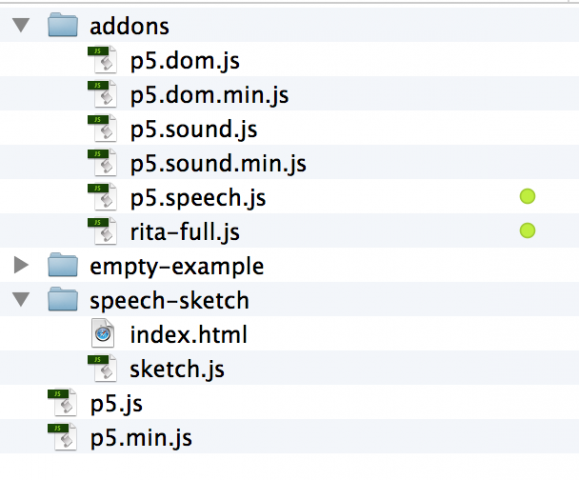

- Observe how my files are set up, in the screenshot below of my p5 directory. Pay special attention to the fact that I have placed p5.speech.js in the “addons” folder.

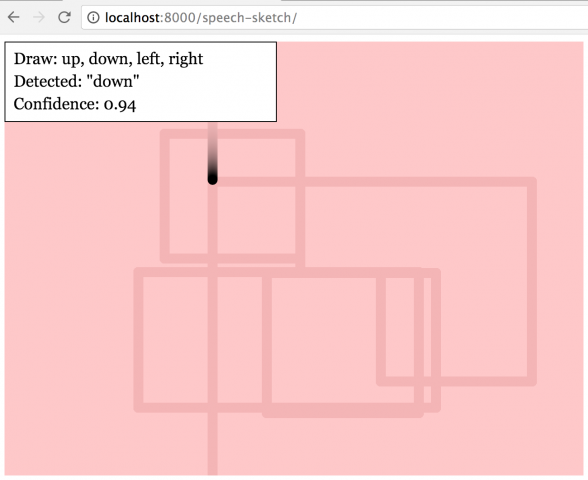

- In order to run the program, I have launched a local server from my p5 directory. You can see this in the screenshot of my Chrome browser, below.

- I have added a function to my p5 sketch, initializeMySpeechRecognizer(), to reset the speech recognizer in case it freezes up. This is currently triggered by pressing the spacebar, but you could also make it reset on a periodic schedule if you needed.

The structure of my file hierarchy:

How it looks when it’s running in the browser:

Here is the program’s index.html file:

<!DOCTYPE html>

<html>

<head>

<script src="../p5.js"></script>

<script src="../addons/p5.speech.js"></script>

<script src="sketch.js"></script>

</head>

<body>

</body>

</html> |

Here is sketch.js:

// ETCH-A-SKETCH with SPEECH // speech recognizer variables var mySpeechRecognizer; var mostRecentSpokenWord; var mostRecentConfidence; // game variables var x, y; var dx, dy; //========================================= function setup() { createCanvas(640, 480); mostRecentConfidence = 0; mostRecentSpokenWord = ""; initializeMySpeechRecognizer(); x = width/2; y = height/2; dx = 0; dy = 0; } //========================================= function initializeMySpeechRecognizer(){ mySpeechRecognizer = new p5.SpeechRec('en-US'); // These are important settings to experiment with mySpeechRecognizer.continuous = true; // Do continuous recognition mySpeechRecognizer.interimResults = false; // Allow partial recognition (faster, less accurate) mySpeechRecognizer.onResult = parseResult; // The speech recognition callback function mySpeechRecognizer.start(); // Start the recognition engine. Requires an internet connection! console.log(mySpeechRecognizer); } //========================================= function draw() { background(255, 200, 200, 12); // Draw instructions and detected speech: fill(255); rect(0,0, 300,88); fill(0); textFont("Georgia"); textSize(18); textAlign(LEFT); text("Draw: up, down, left, right", 10, 25); text("Detected: \"" + mostRecentSpokenWord + "\"", 10, 50); text("Confidence: " + nf(mostRecentConfidence,1,2), 10, 75); // Draw & move ellipse according to spoken commands fill(0,0,0); ellipse(x, y, 10,10); x+=dx; y+=dy; if(x<0) x = width; if(y<0) y = height; if(x>width) x = 0; if(y>height) y = 0; } //========================================= function keyPressed(){ if (key === ' '){ // Press the spacebar to reset the speech recognizer. // This is helpful in case it freezes up for some reason. // If you have a lot of freezes, consider automating this. initializeMySpeechRecognizer(); } if (key === 'X'){ // Reset the game background(255, 200, 200); x = width/2; y = height/2; dx = 0; dy = 0; } } //========================================= function parseResult() { // The Recognition system will often append words into phrases. // So the hack here is to only use the last word: console.log (mySpeechRecognizer.resultString); mostRecentSpokenWord = mySpeechRecognizer.resultString.split(' ').pop(); mostRecentConfidence = mySpeechRecognizer.resultConfidence; if (mostRecentSpokenWord.indexOf("left" )!==-1) { dx=-1; dy=0; } else if(mostRecentSpokenWord.indexOf("right")!==-1) { dx=1; dy=0; } else if(mostRecentSpokenWord.indexOf("up" )!==-1) { dx=0; dy=-1; } else if(mostRecentSpokenWord.indexOf("down" )!==-1) { dx=0; dy=1; } } |

Here’s a video of me using the system. I throw some other words in there (“hamster”) to show you that it’s recognizing.

Template Code #2: Interactive Rhymer

This program listens to what the user says; fetches a list of words that rhyme with the most recent detected word; and speaks a randomly selected rhyming word from that list. Some things to note:

- I documented this with headphones on. If I hadn’t, the speech synthesizer would tend to trigger the speech recognizer. There are ways around this (such as writing some timing latches).

- I documented this with the ScreenFlick capture tool, which makes it easy to grab both microphone audio and system audio. Your mileage may vary.

Here’s the program’s index.html file. Note how it includes links to both RiTa and p5.speech.

<!DOCTYPE html>

<html>

<head>

<script src="../p5.js"></script>

<script src="../addons/p5.speech.js"></script>

<script src="../addons/rita-full.js"></script>

<script src="sketch.js"></script>

</head>

<body>

</body>

</html> |

Here’s the p5.js code in sketch.js:

// A program that says a word which rhymes // with the most recent word you said. // The speech recognizer var mySpeechRecognizer; var mostRecentSpokenWord; var mostRecentConfidence; // The speech synthesizer var myVoiceSynthesizer; // The RiTa Lexicon var myRitaLexicon; var bFoundRhymeWithMostRecentWord = true; var aWordThatRhymesWithMostRecentWord = ""; var arrayOfRhymingWords = []; //========================================= function setup() { createCanvas(320, 320); // Make the speech recognizer mostRecentConfidence = 0; mostRecentSpokenWord = ""; initializeMySpeechRecognizer(); // Make the speech synthesizer myVoiceSynthesizer = new p5.Speech(); myVoiceSynthesizer.setVoice(0); // Create the RiTa lexicon (for rhyming) myRitaLexicon = new RiLexicon(); } //========================================= function initializeMySpeechRecognizer(){ mySpeechRecognizer = new p5.SpeechRec('en-US'); mySpeechRecognizer.continuous = true; // do continuous recognition mySpeechRecognizer.interimResults = false; // allow partial recognition mySpeechRecognizer.onResult = parseResult; // recognition callback mySpeechRecognizer.start(); // start engine console.log(mySpeechRecognizer); } //========================================= function draw() { background(0, 200, 255); // Draw detected speech: fill(255); rect(0,0, 319,62); fill(0); textFont("Georgia"); textSize(18); textAlign(LEFT); text("You said: \"" + mostRecentSpokenWord + "\"", 10, 25); text("I rhymed: \"" + aWordThatRhymesWithMostRecentWord + "\"", 10, 50); findRhymeWithMostRecentWord(); fill(0,115,150); text(arrayOfRhymingWords, 10, 90); } //========================================= function keyPressed(){ if (key === ' '){ // Press the spacebar to reinitialize the recognizer. // This is helpful in case it freezes up for some reason. // If you have a lot of freezes, consider automating this. initializeMySpeechRecognizer(); } } //========================================= function parseResult() { mostRecentConfidence = mySpeechRecognizer.resultConfidence; if (mostRecentConfidence > 0.5){ // some confidence threshold... console.log (mySpeechRecognizer.resultString); // The Recognition system will often append words into phrases. // So the hack here is to only use the last word: mostRecentSpokenWord = mySpeechRecognizer.resultString.split(' ').pop(); bFoundRhymeWithMostRecentWord = false; } } //========================================= function findRhymeWithMostRecentWord(){ if ((bFoundRhymeWithMostRecentWord === false) && (mostRecentSpokenWord.length > 0)){ // Ask RiTa which words rhyme with mostRecentSpokenWord var rhymes = myRitaLexicon.rhymes(mostRecentSpokenWord); // If there are any words that rhyme, var nRhymes = rhymes.length; if (nRhymes > 0){ // Select a random one from the returned list, and speak it. aWordThatRhymesWithMostRecentWord = rhymes[floor(random(nRhymes))]; myVoiceSynthesizer.speak( aWordThatRhymesWithMostRecentWord); // Keep the first 10 rhyming words; insert some newline characters var arr = subset(rhymes, 0, min(nRhymes, 10)); // max of 10 words arrayOfRhymingWords = arr.join("\n"); } else { // But if there are no rhymes, blank it. aWordThatRhymesWithMostRecentWord = ""; arrayOfRhymingWords = []; } bFoundRhymeWithMostRecentWord = true; } } |

List of Deliverables

Now:

- Think it through! Do some sketches in your sketchbook. Discuss your idea with the professor, TA, or some friends.

- Using p5.js and associated tools, create a game or other experience which uses speech input and/or output. Graphics (or other displays) are optional.

- Blog it. Create a blog post. Give your post the title, nickname-Speech, and give it the WordPress Category, 05-Speech.

- Document it. The best technique may be to record a video with a screen-capture program. You can upload videos directly to this WordPress site (try to keep your video files under 10MB). I have installed a new WordPress plugin which should make your video embeds nicer.

- Write about it. Please write a paragraph (100-200 words) describing your concept. What is the context where you intend your speech program to be experienced? (For example: while jogging; in the car; at the desktop; etc.) Write about your process, and include an evaluation of your results.