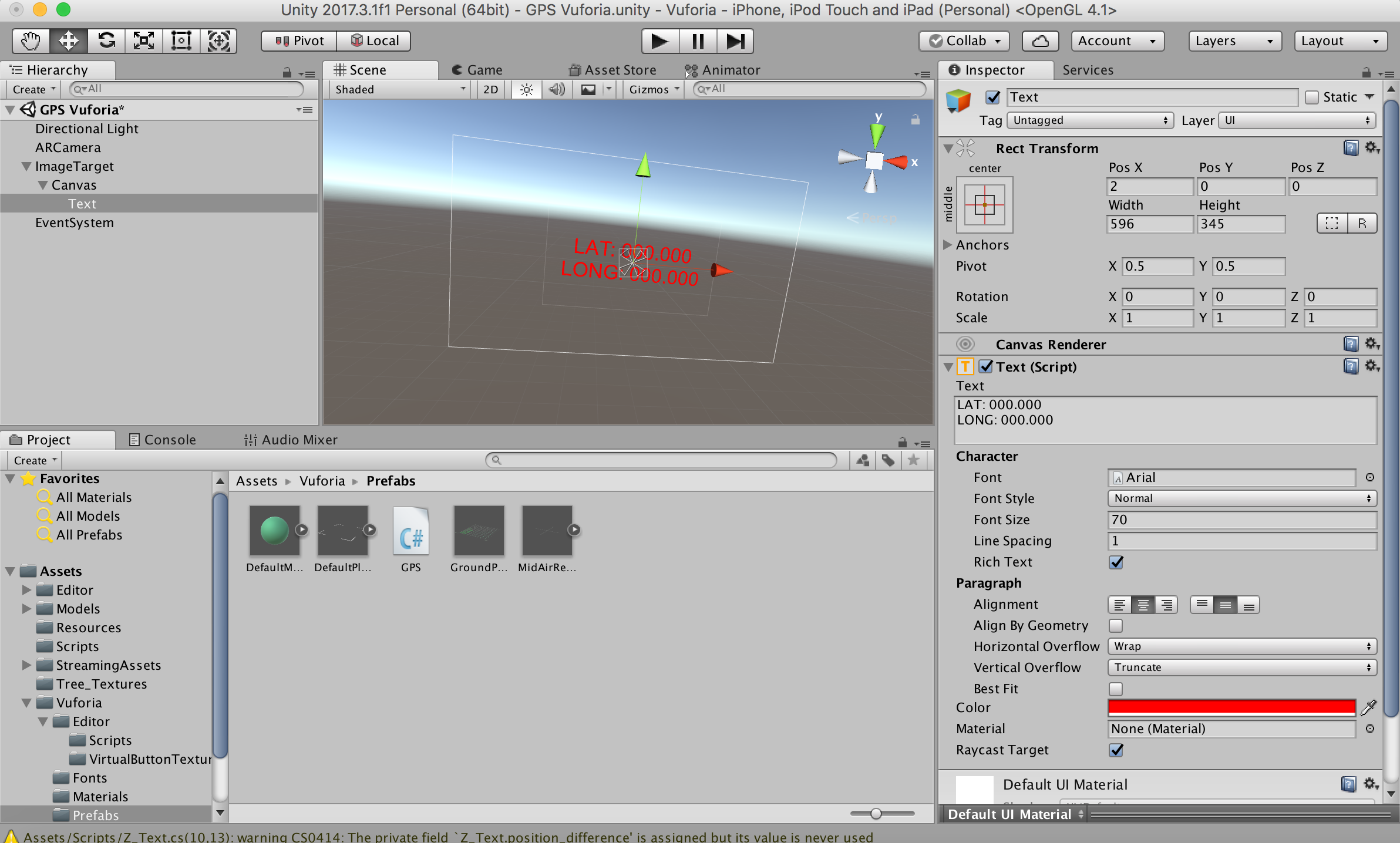

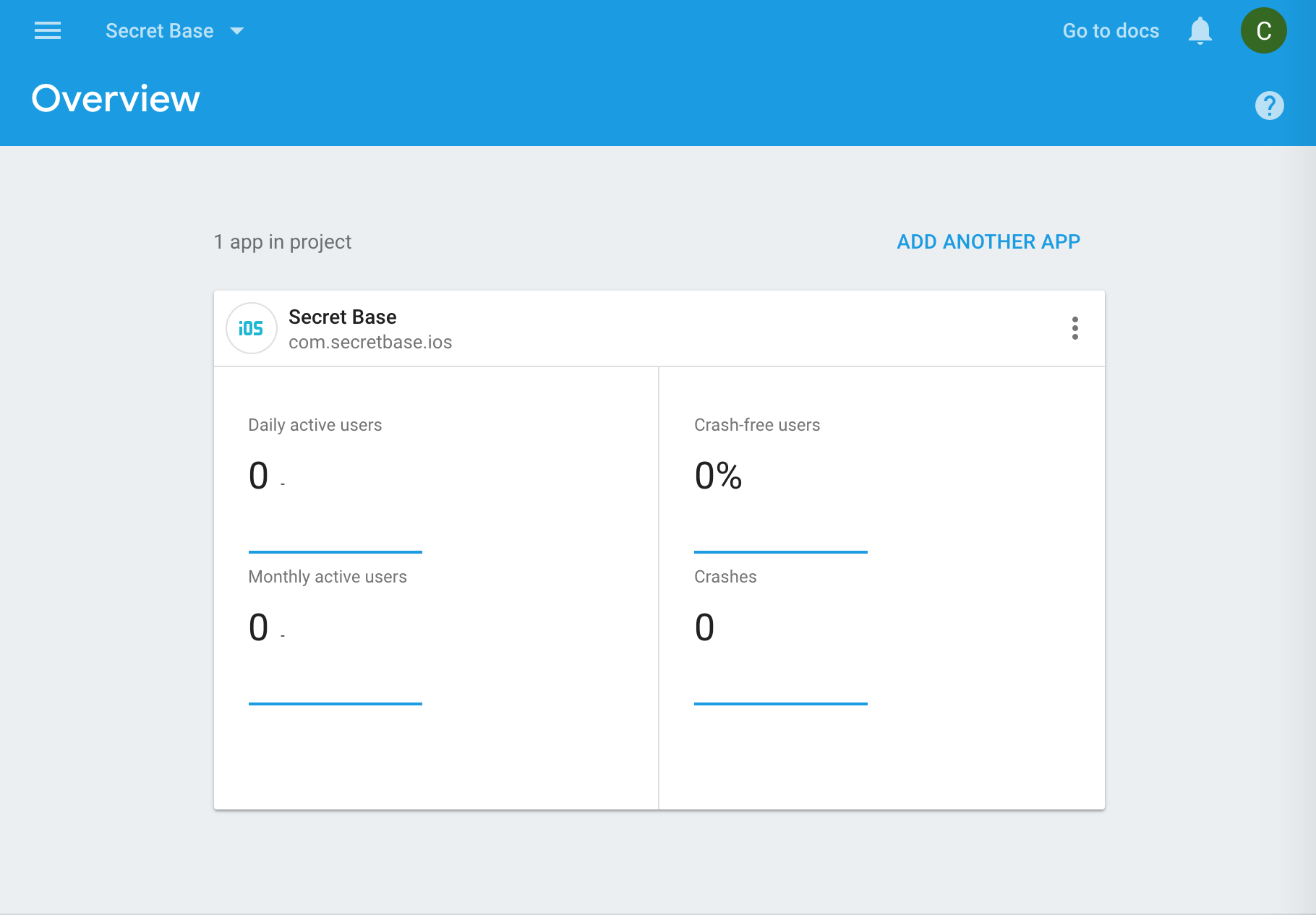

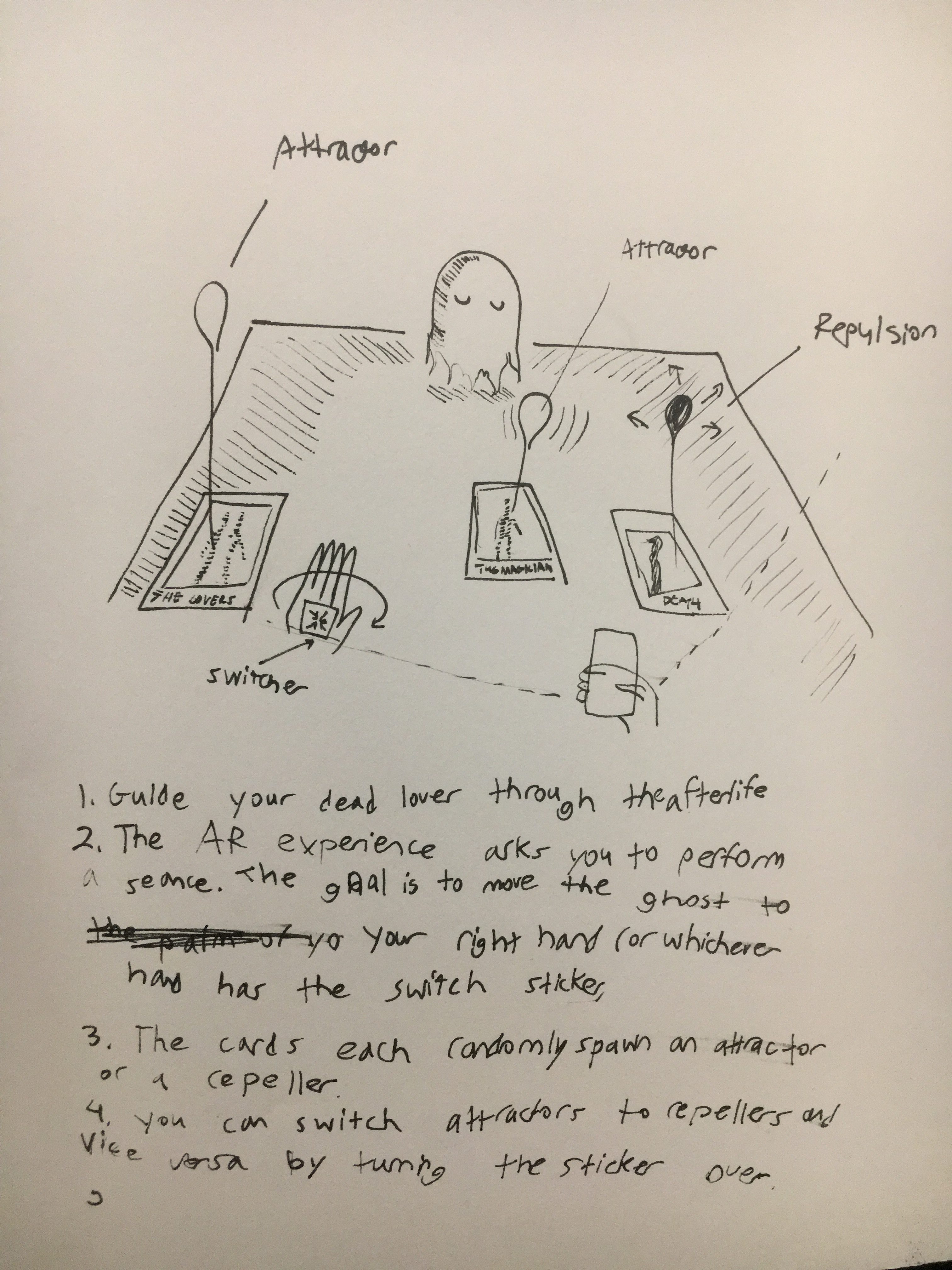

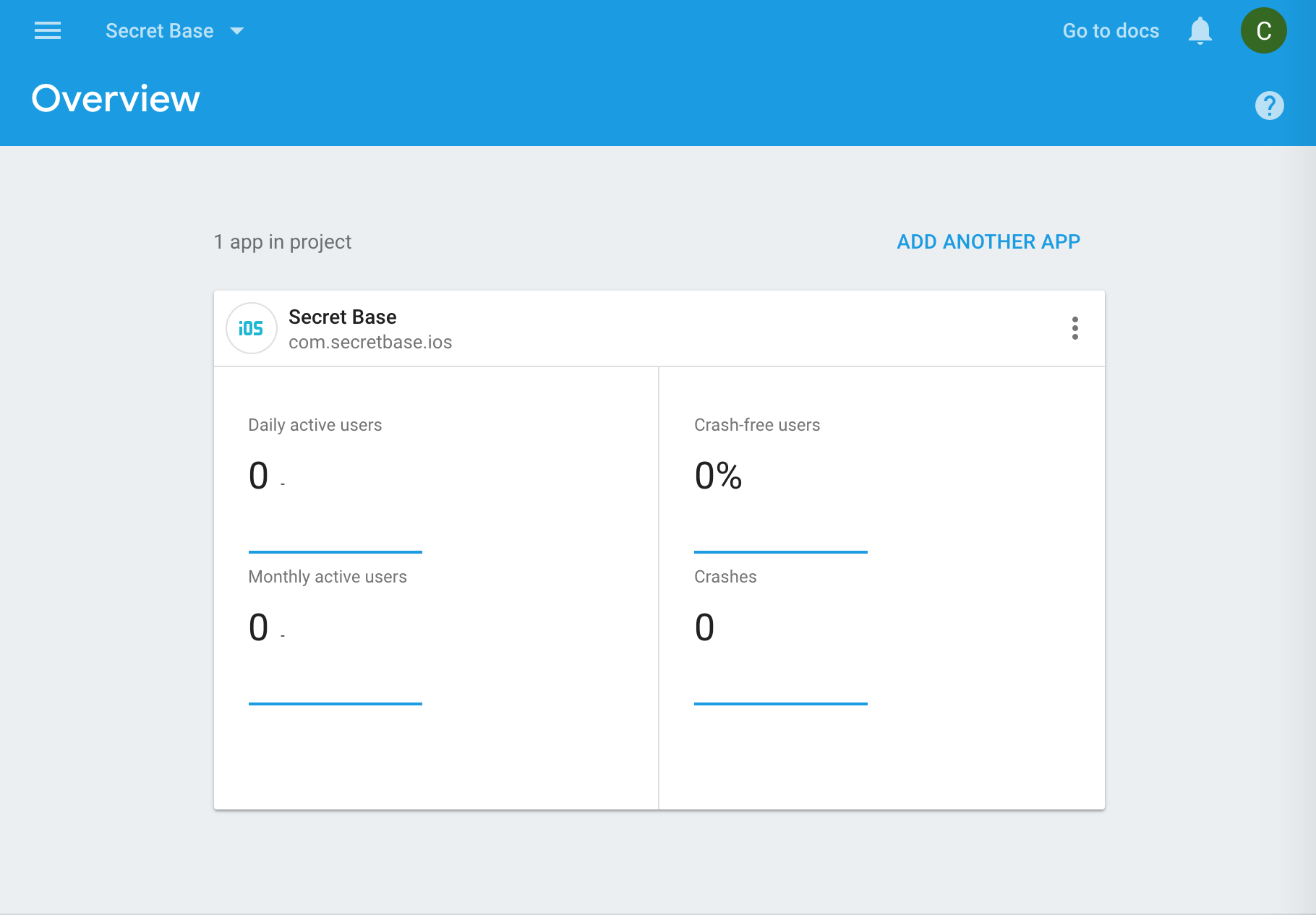

My project, a Secret Base app, will use firebase.

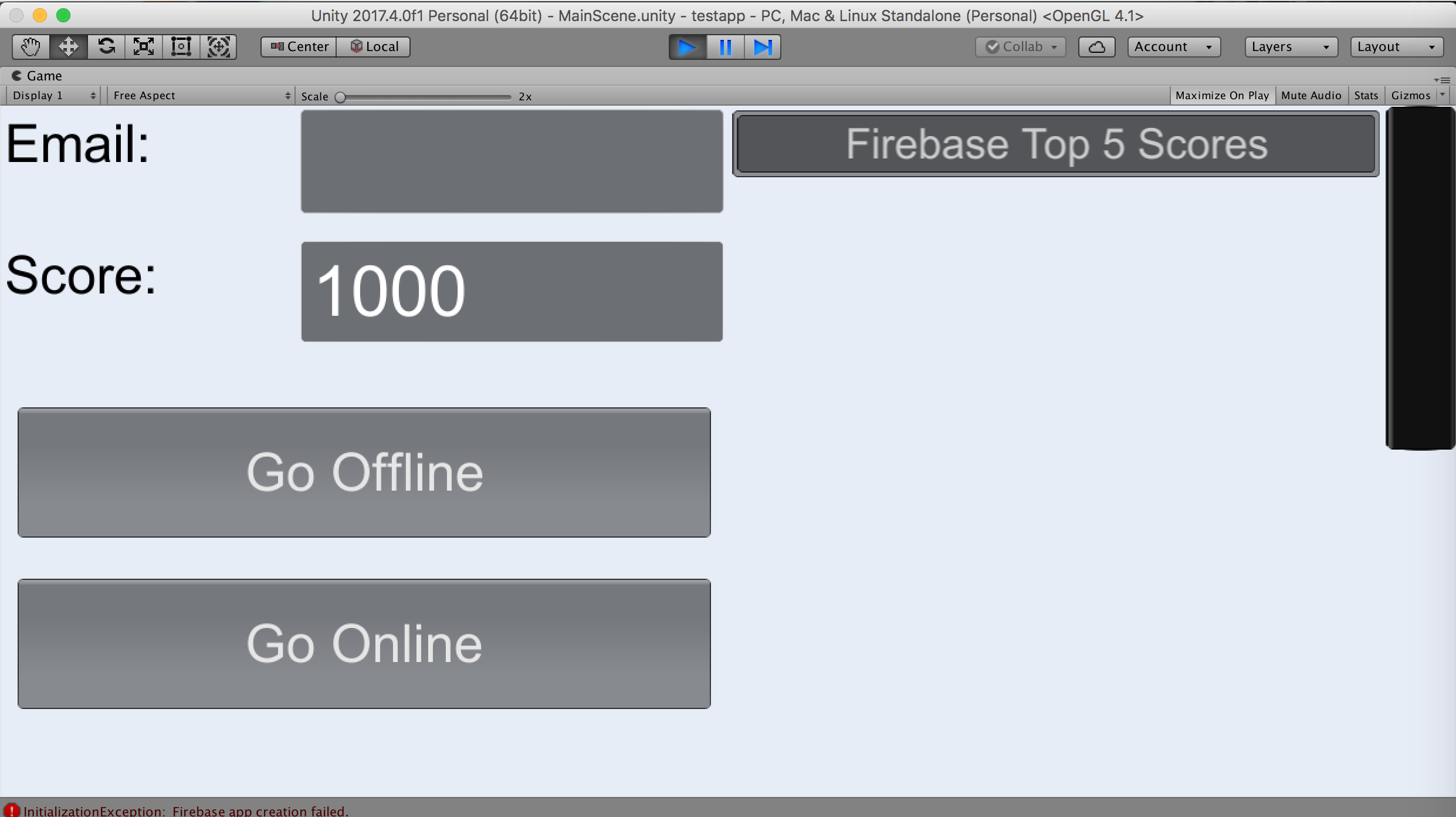

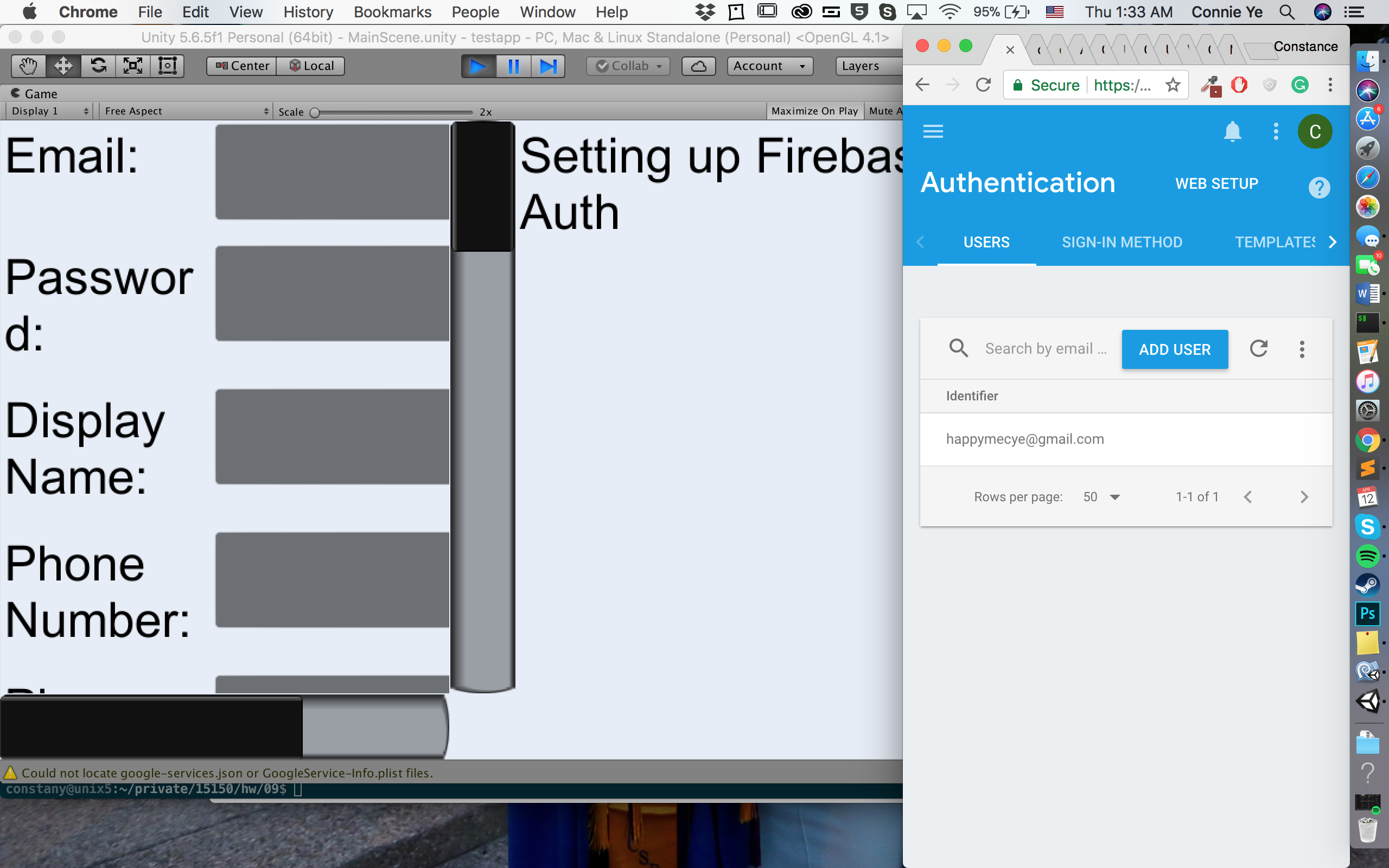

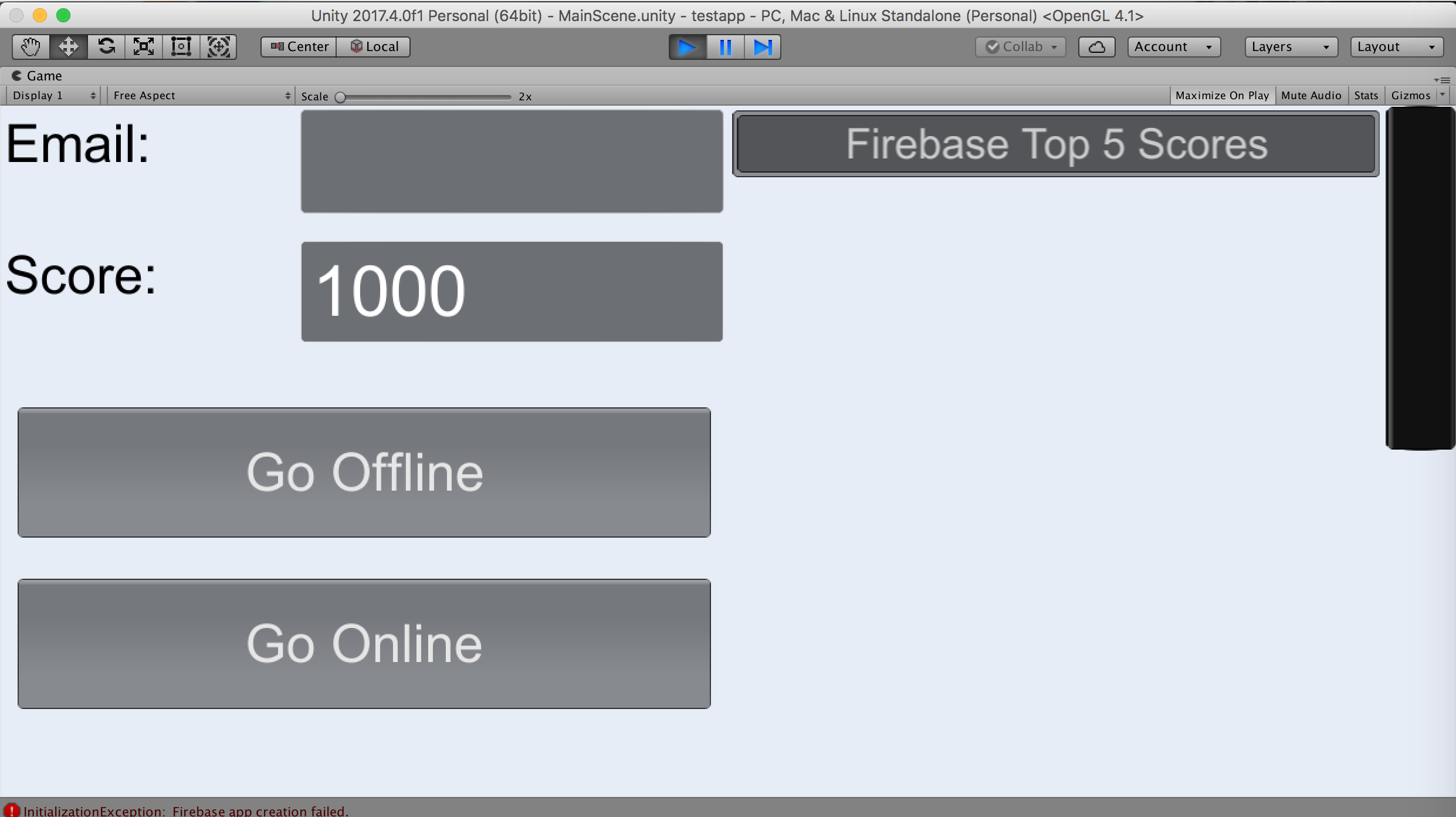

so this was my technical problem – using firebase in unity. I’ve used firebase before but after following all of the steps in their quickstart, I kept seeing this horrendous error – “InitializationException: Firebase app creation failed” I tried multiple times, with different settings and files, but this error kept showing up!!!

I switched my version of unity to 5.6 because I had 4.something originally and they were asking for 5.3, but it still didn’t fix it.

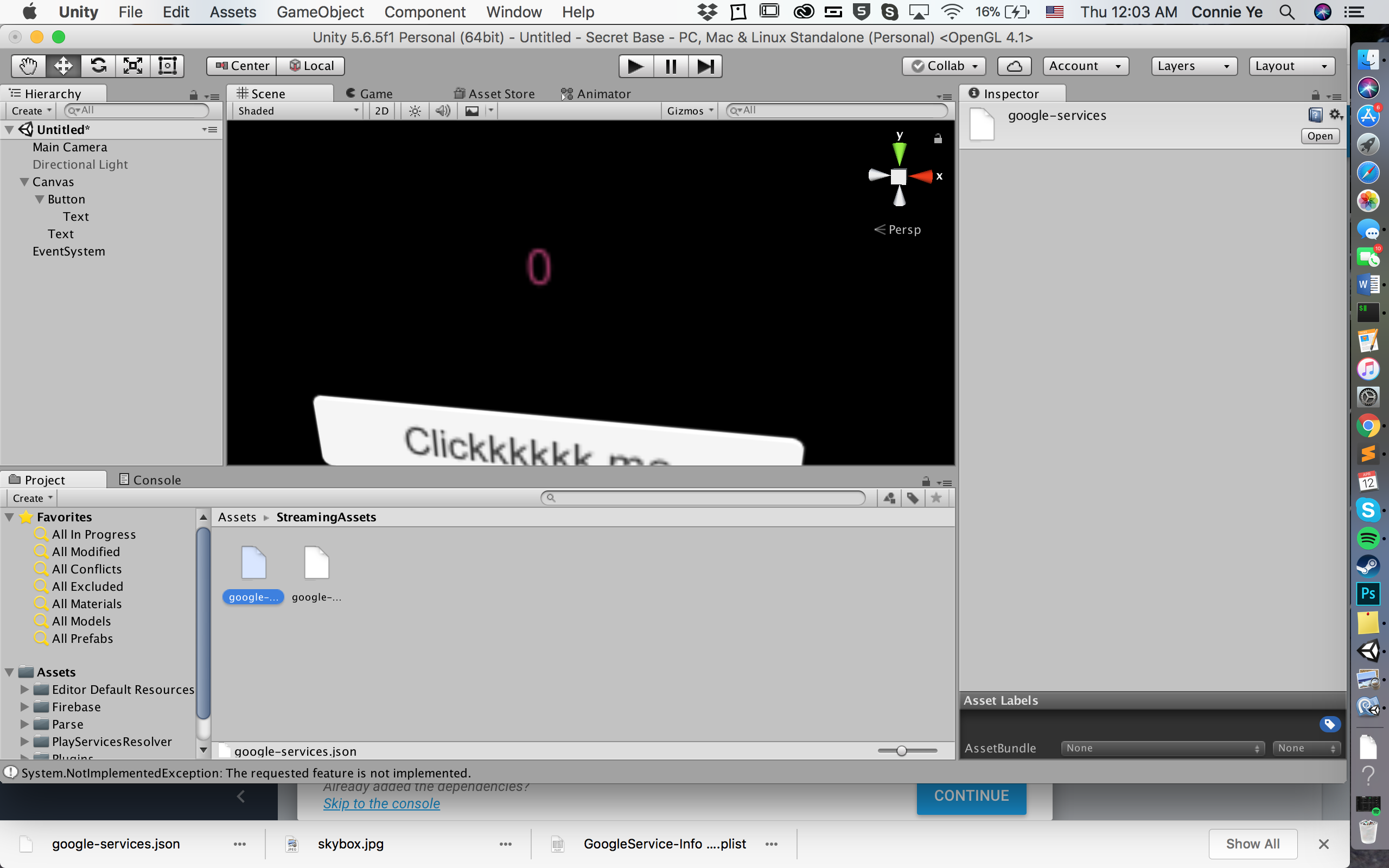

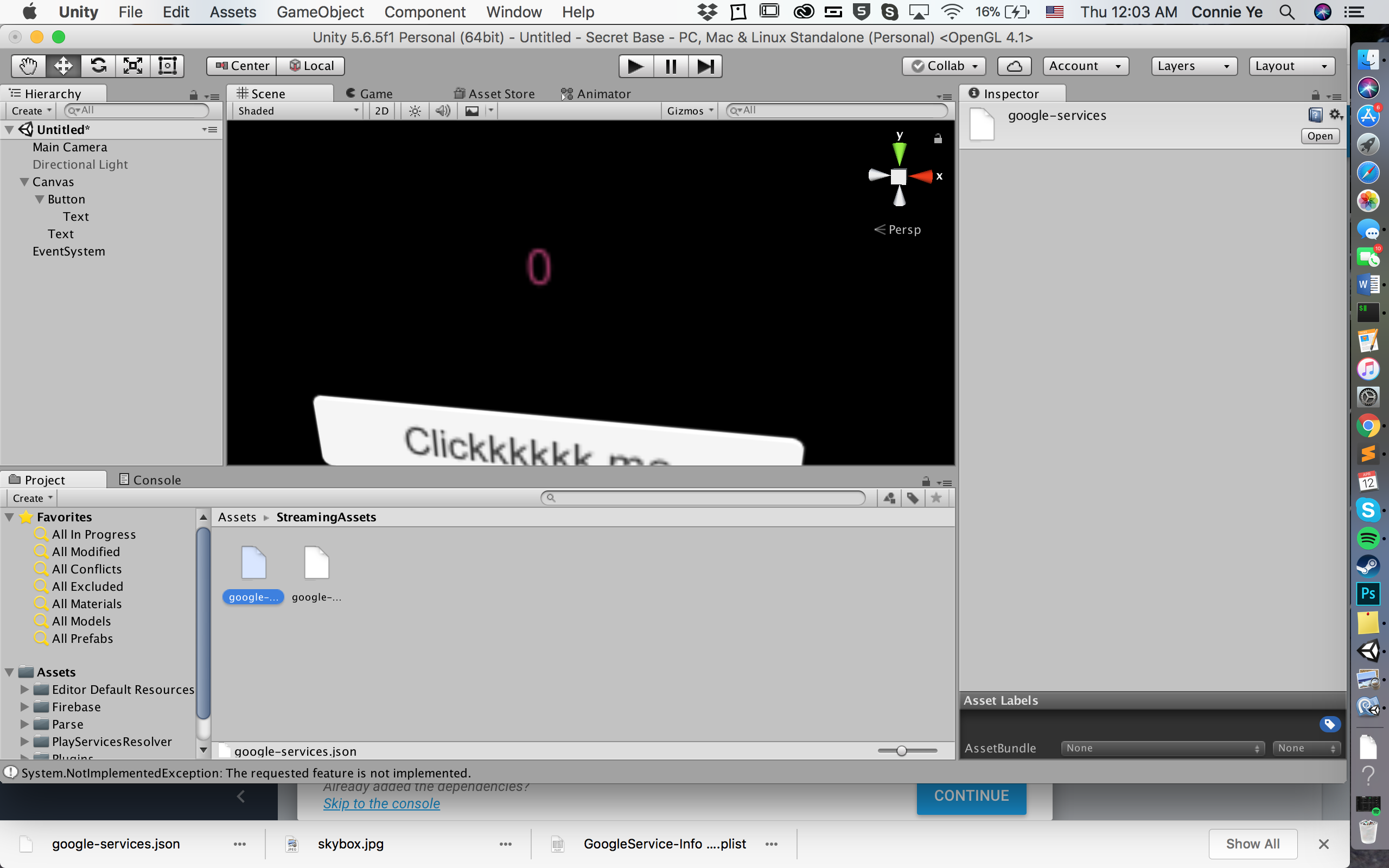

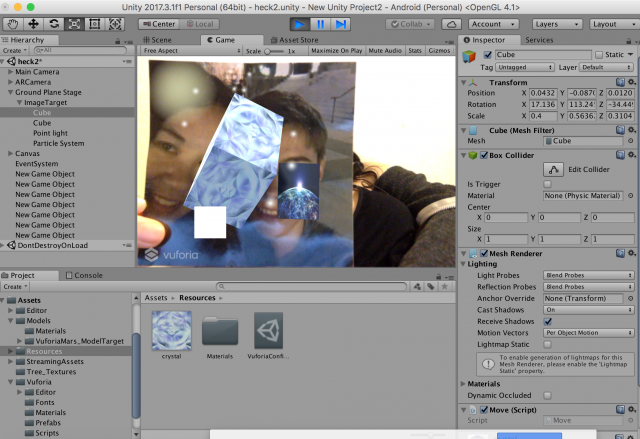

EDIT!!!! I solved the problem!!!!! Kind of! The error I kept getting was because I needed to create a folder called StreamingAssets to put the andriod google files into. None of the tutorials said I had to do that…. but it’s fine. The database works, but now I need to figure out their user authorization system.

these files changed everything?? no more errors! for now!

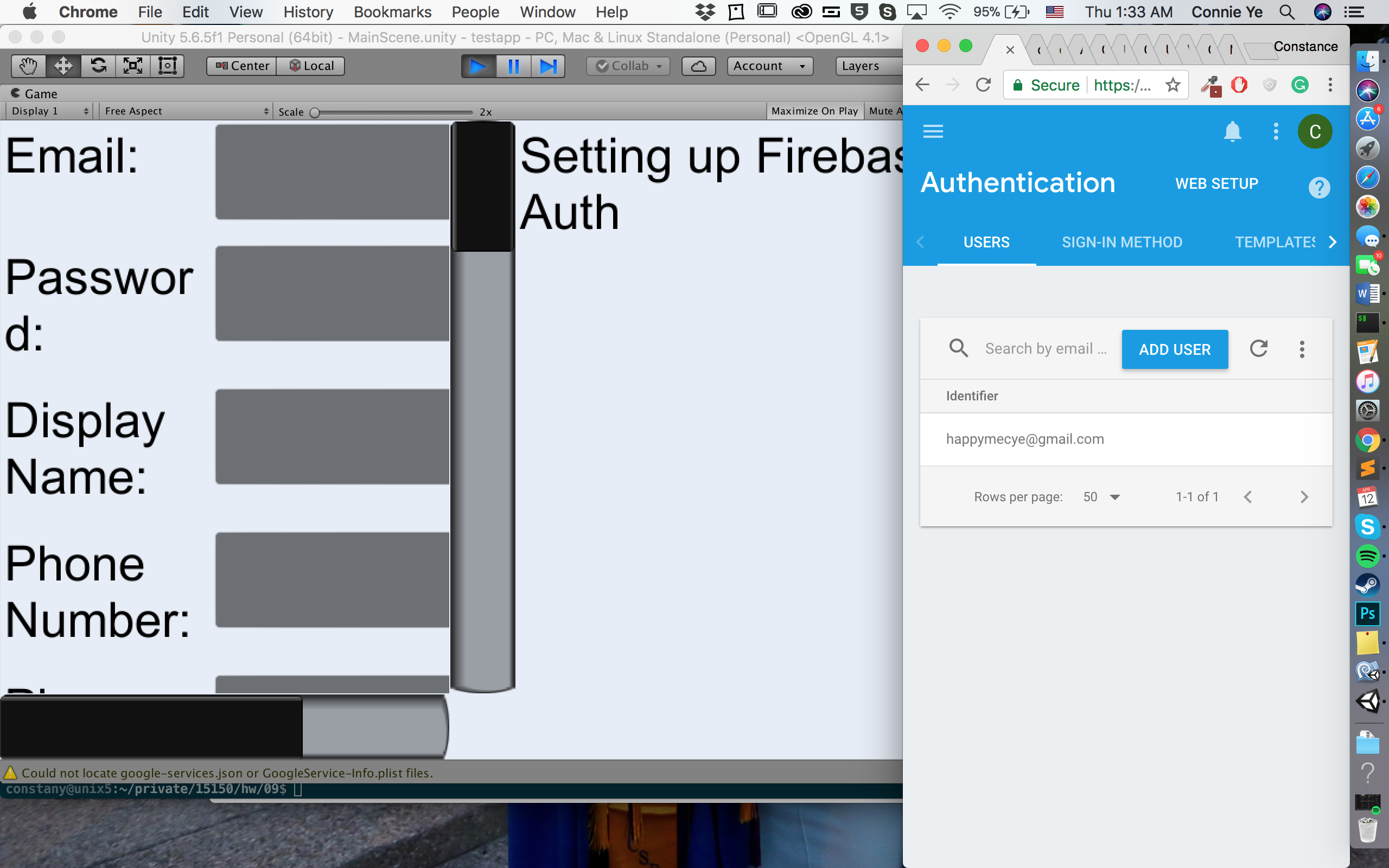

database demo

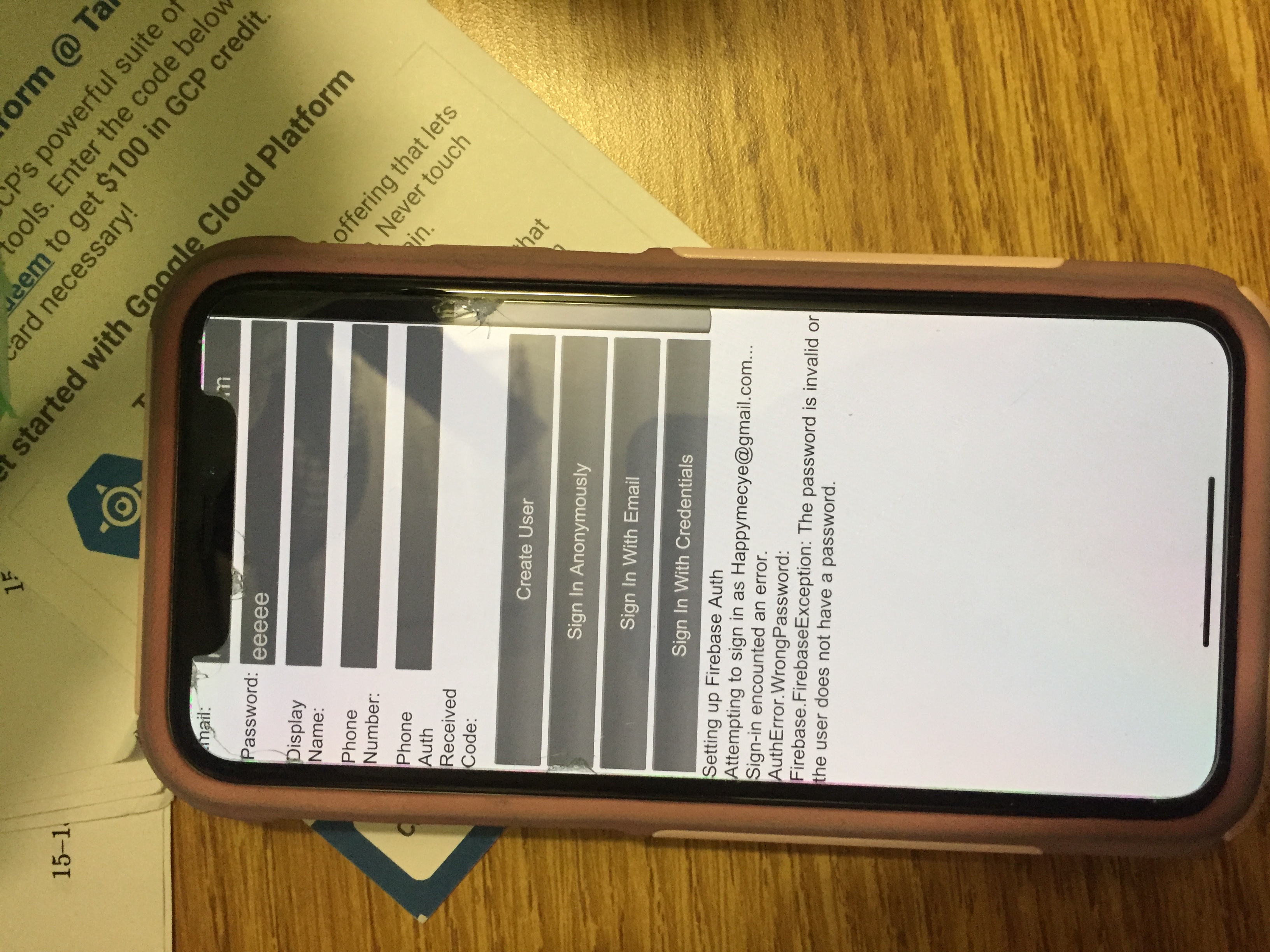

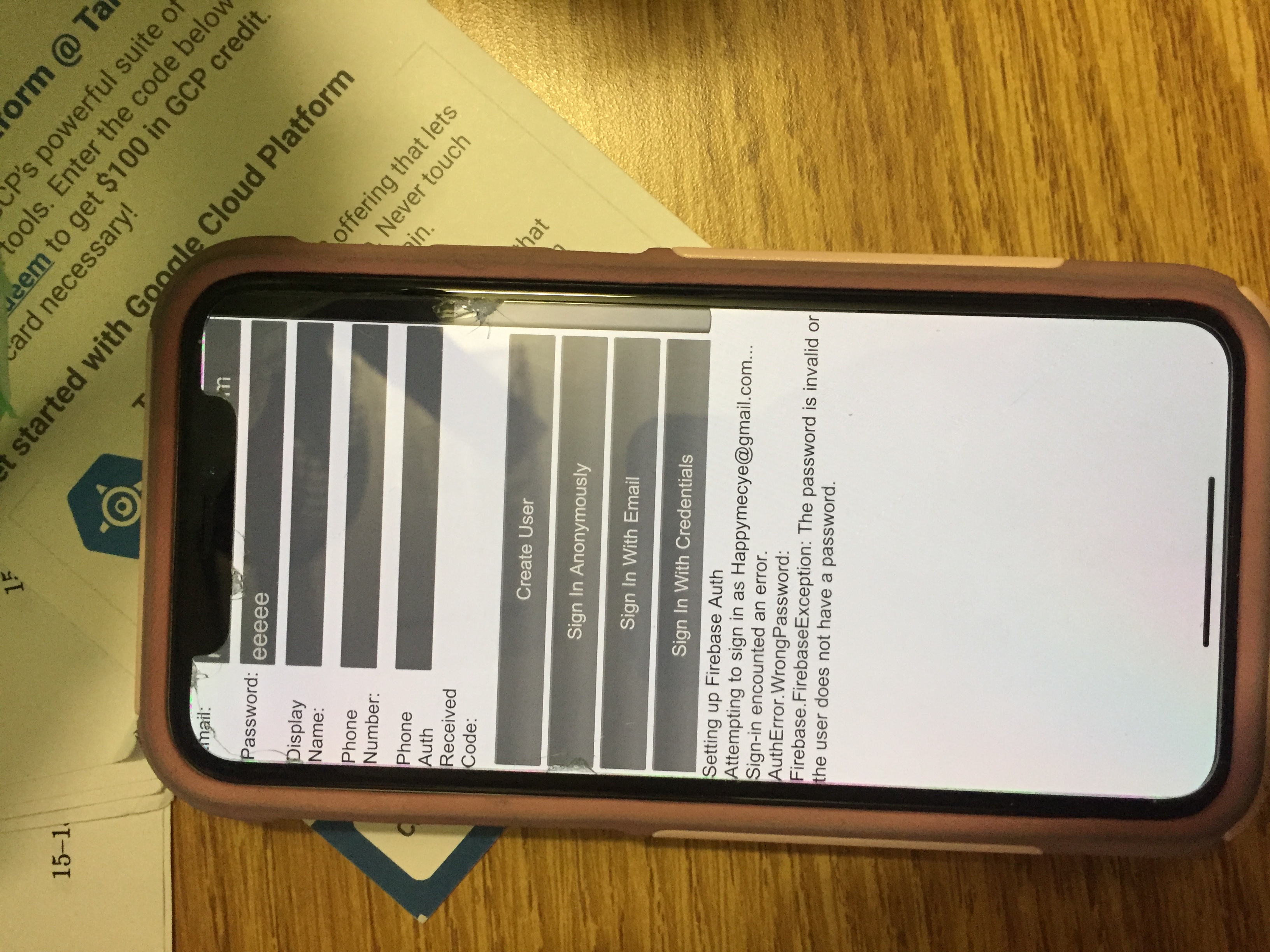

EDIT 2: I got the user auth to work! It’s just their basic quickstart example, but it’s working. It took a few hours for me to get it to build for iOS because of some problem with cocoapods :(. If anyone else wants to try Firebase/iOS, I noticed that a lot of people online had issues with the cocoapods/unity/firebase combo, but I think I’ve mostly figured it out!

For me the two big things were:

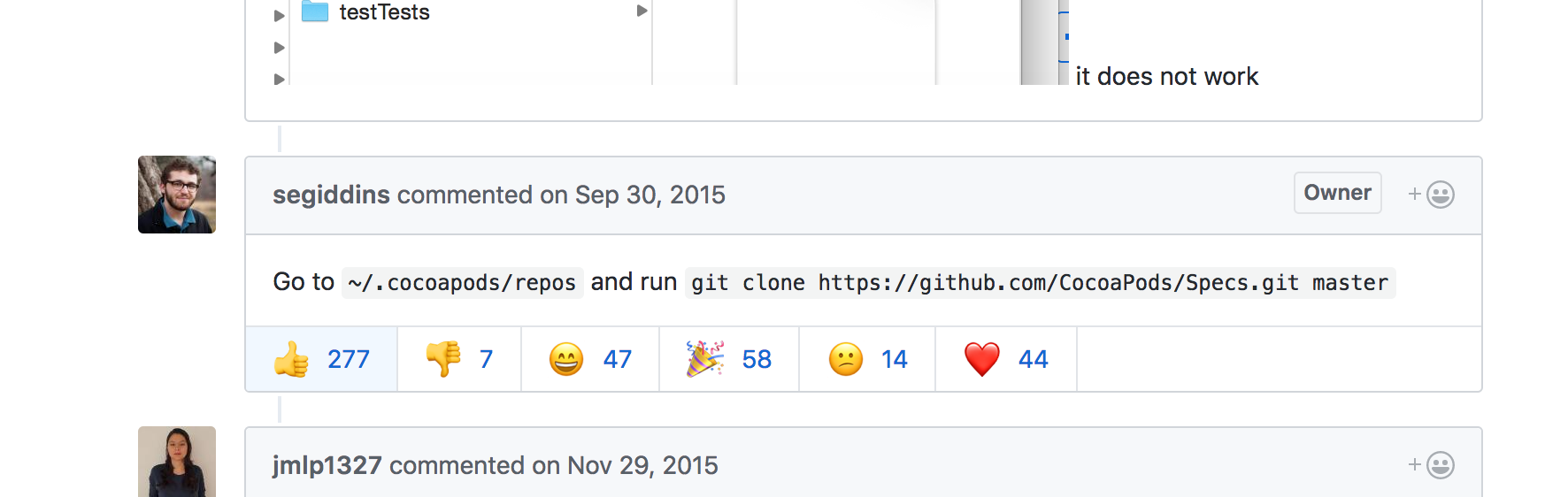

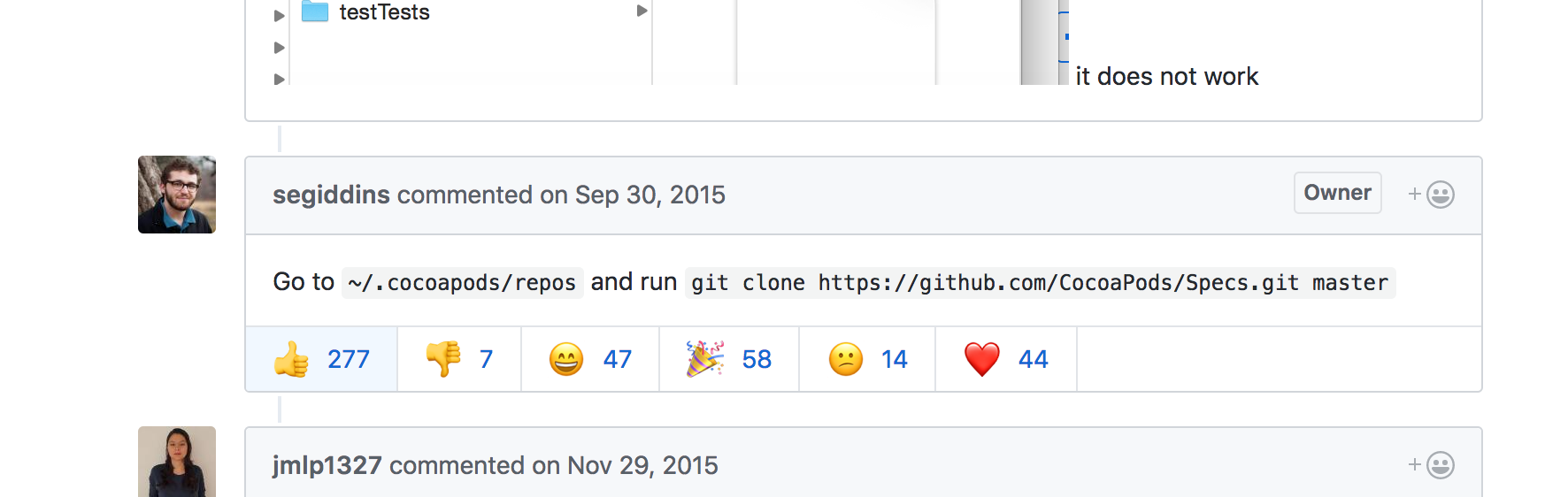

1) cocoapods couldn’t initialize its specs by itself for some (????) reason, so when I tried to build in Unity, it would get stuck on the C++ part, and eventually yell at me that “OS framework addition failed due to a Cocoapods installation failure. This will likely result in an non-functional Xcode project…

Analyzing dependencies

Setting up CocoaPods master repo

[!] Unable to add a source with url `https://github.com/CocoaPods/Specs.git` named `master-1`.

You can try adding it manually in `~/.cocoapods/repos` or via `pod repo add`.

“

You need to git clone it in yourself to fix it, and also maybe add the line: “export LANG=en_US.UTF-8” to your ~/.profile file:

ALSO I think they’ve changed something in the past 3 years because my repo already had a master directory and git starting throwing fatal error messages at me. You need to clone to “master-1”, not “master”, which is what the error message that Unity gives you says too.

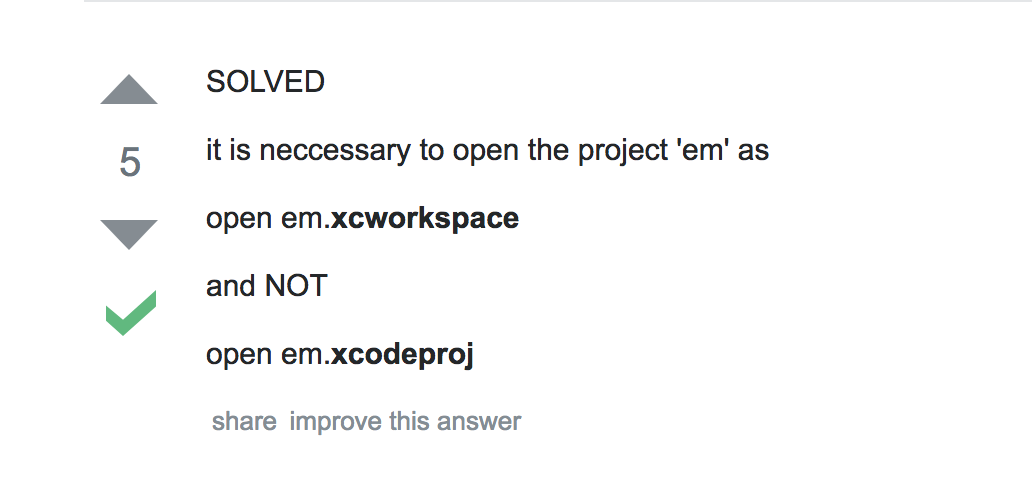

2) ALSO, in Xcode, you have to open NOT the project, but the workspace for some reason for it to compile.

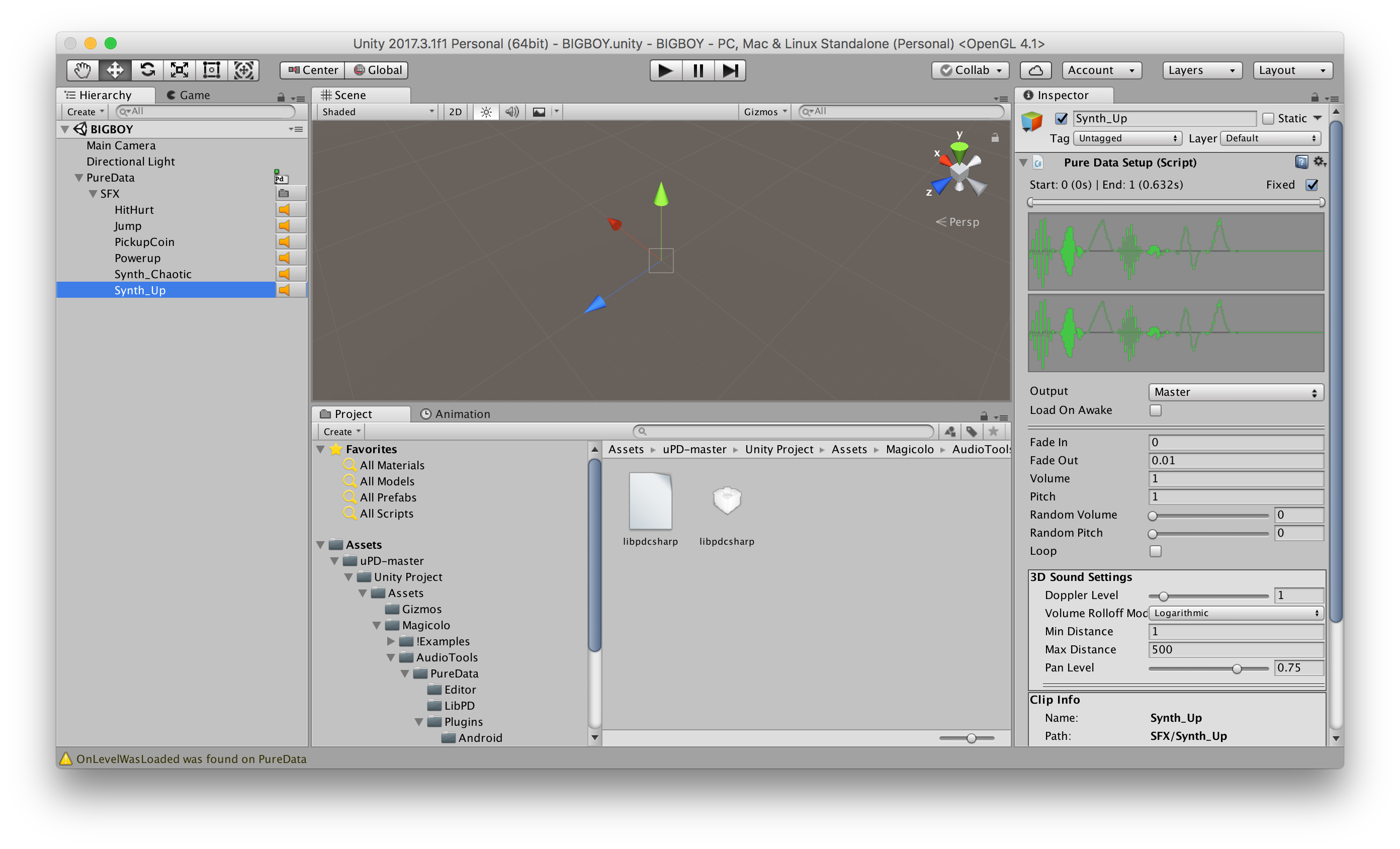

Pure Data: An open source visual programming language useful for sound synthesis and analysis:

Pure Data: An open source visual programming language useful for sound synthesis and analysis: