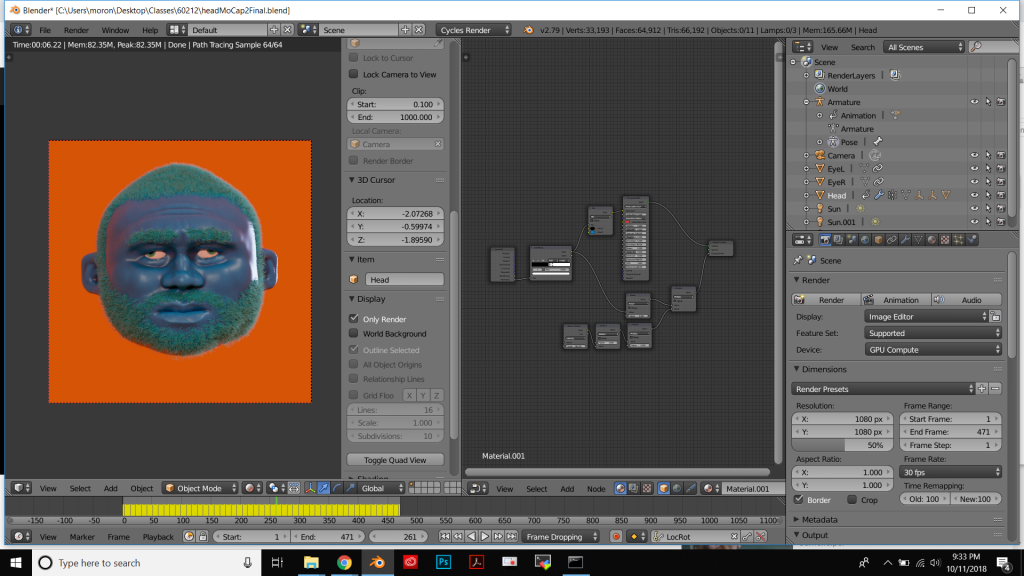

This project takes the motion from an entire human body and maps it to a face. Hands control eyes, head controls brow, hips control jaw, and feet control lips. Technically, the way this was done was by first recording the motion using the Brekel software for the Kinect, which outputs a BVH file. Then, I brought the MoCap data into Blender, which creates an animated armature. Using bpy, the Blender Python library, I took the locations of the armature bones as input and used them to animate location, rotation, and shape keys associated with the face model.

While the bulk of the work for this project was just writing the code to hook up the input motion to the output motion, the most important work, the work that I think gives this project some personality, was everything else. The modelling of the face, the constructing of the extreme poses, and of course the performing of the MoCap "dance" ultimately have the most impact on the piece. Overall, I'm happy with the piece, but it would have been nice if I had implemented a real-time version. I would have done a real-time version I could find a Python real-time MoCap library so I could connect it to Blender, but most of the real-time MoCap stuff is in Javascript.

Here are some images/GIFs/sketches:

Here's the code I wrote in Blender's Python interpreter:

import bpy defaultBoneLocs = {'Spine1': (0.7207083106040955, 9.648646354675293, 4.532780170440674), 'LeftUpLeg': (0.9253001809120178, 9.532548904418945, 2.795626401901245), 'LeftLeg': (1.0876638889312744, 9.551751136779785, 1.751688838005066), 'LeftHand': (1.816838026046753, 8.849924087524414, 3.9350945949554443), 'Head': (0.7248507738113403, 9.63467788696289, 4.774600028991699), 'Spine': (0.7061706185340881, 9.661049842834473, 3.7947590351104736), 'RightArm': (0.17774519324302673, 9.660733222961426, 4.388589382171631), 'LeftArm': (1.259391188621521, 9.625649452209473, 4.377967834472656), 'RightUpLeg': (0.42640626430511475, 9.538918495178223, 2.812265634536743), 'LeftForeArm': (1.7386583089828491, 9.596687316894531, 3.683629274368286), 'RightShoulder': (0.6663911938667297, 9.649639129638672, 4.518487453460693), 'Hips': (0.6839468479156494, 9.647804260253906, 2.792393445968628), 'LeftShoulder': (0.7744865417480469, 9.646313667297363, 4.5172953605651855), 'LeftFoot': (1.2495332956314087, 9.810073852539062, 0.5447696447372437), 'RightForeArm': (-0.3234933316707611, 9.588683128356934, 3.855139970779419), 'RightFoot': (0.08095724135637283, 9.851096153259277, 0.5348520874977112), 'RightLeg': (0.23801341652870178, 9.571942329406738, 1.788295030593872), 'RightHand': (-0.3675239682197571, 8.814794540405273, 4.040530681610107), 'Neck': (0.7212125062942505, 9.647202491760254, 4.556796550750732)} boneLocs = {} normBoneLocs = {} for frame in range(30,1491,3): if frame%90 == 0: print(frame/1491*100) bpy.context.scene.frame_set(frame) for arm in bpy.data.armatures[:]: obj = bpy.data.objects[arm.name] for poseBone in obj.pose.bones[:]: finalMatrix = obj.matrix_world * poseBone.matrix global_location = (finalMatrix[0][3],finalMatrix[1][3],finalMatrix[2][3]) boneLocs[poseBone.name] = global_location for key in boneLocs: x = (boneLocs[key][0] - boneLocs['Hips'][0]) - (defaultBoneLocs[key][0] - defaultBoneLocs['Hips'][0]) y = (boneLocs[key][1] - boneLocs['Hips'][1]) - (defaultBoneLocs[key][1] - defaultBoneLocs['Hips'][1]) z = (boneLocs[key][2] - boneLocs['Hips'][2]) - (defaultBoneLocs[key][2] - defaultBoneLocs['Hips'][2]) if key == 'Hips': z = boneLocs[key][2] - defaultBoneLocs[key][2] normBoneLocs[key] = (x,y,z) val = -0.6*(normBoneLocs['Hips'][2]) bpy.data.meshes['Head'].shape_keys.key_blocks['JawOpen'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['JawOpen'].keyframe_insert("value") val = 0.6*(normBoneLocs['Hips'][2]) bpy.data.meshes['Head'].shape_keys.key_blocks['JawUp'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['JawUp'].keyframe_insert("value") val = (normBoneLocs['LeftFoot'][0]) bpy.data.meshes['Head'].shape_keys.key_blocks['MouthLOut'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['MouthLOut'].keyframe_insert("value") val = -(normBoneLocs['LeftFoot'][0]) bpy.data.meshes['Head'].shape_keys.key_blocks['MouthLIn'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['MouthLIn'].keyframe_insert("value") val = -(normBoneLocs['RightFoot'][0]) bpy.data.meshes['Head'].shape_keys.key_blocks['MouthROut'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['MouthROut'].keyframe_insert("value") val = (normBoneLocs['RightFoot'][0]) bpy.data.meshes['Head'].shape_keys.key_blocks['MouthRIn'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['MouthRIn'].keyframe_insert("value") val = -(normBoneLocs['Head'][2]) bpy.data.meshes['Head'].shape_keys.key_blocks['BrowDown'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['BrowDown'].keyframe_insert("value") val = (normBoneLocs['Head'][2]) bpy.data.meshes['Head'].shape_keys.key_blocks['BrowUp'].value = val bpy.data.meshes['Head'].shape_keys.key_blocks['BrowUp'].keyframe_insert("value") bpy.data.objects['EyeRTrack'].location.z = 0.294833 + normBoneLocs['RightHand'][2] bpy.data.objects['EyeRTrack'].location.x = -0.314635 + normBoneLocs['RightHand'][0] bpy.data.objects["EyeRTrack"].keyframe_insert(data_path='location') bpy.data.objects['EyeLTrack'].location.z = 0.294833 + normBoneLocs['LeftHand'][2] bpy.data.objects['EyeLTrack'].location.x = 0.314635 + normBoneLocs['LeftHand'][0] bpy.data.objects["EyeLTrack"].keyframe_insert(data_path='location') bpy.data.objects['Head'].rotation_euler = bpy.data.objects['Armature'].pose.bones['Spine'].matrix.to_euler() bpy.data.objects["Head"].rotation_euler.x -= 1.7 bpy.data.objects["Head"].rotation_euler.x *= 0.3 bpy.data.objects["Head"].keyframe_insert(data_path='rotation_euler') |

Also, here's a bonus GIF I made using a walk cycle from CMU's Motion Capture Database: