A day in the life of Me, Myself, and I

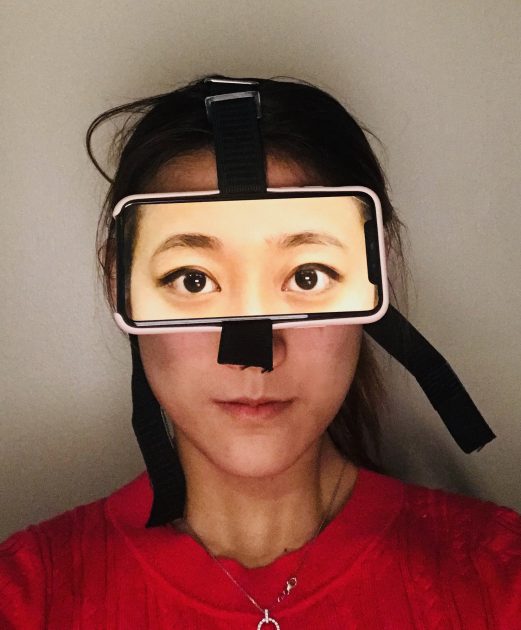

concept: I wear a mask that replaces everyone’s faces with my own. (pass through VR in a modified cardboard)

Some person drivers for this assignment was that yes, the prompt is “digital mask” that we perform with, but how do we take it out of the screen? I wanted to push myself to take advantage of the realtime aspect of this medium (face tracking software) — perhaps something that couldn’t be done better through post processing in a video software despite the deliverable being a video performance.

My personal critique though, is that I got caught up in these thoughts, and about how to make the experience of performing compelling, that I neglected how it would come off as a performance to others. It feels like the merit/alluring qualities of the end idea (^ an egocentric world where you see yourself in everyone) get’s lost when the viewer is watching someone else immersed in their own “bubble”. What the performer sees (everyone with their face) is appealing to them personally, but visually uninteresting to anyone else.

Where to begin?

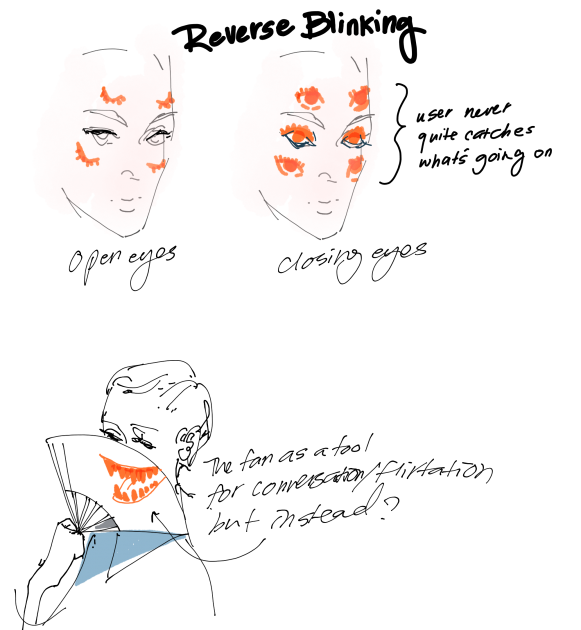

Brain dump onto the sketch book. Go through the wonderful rabbit hole of links Golan provides. And start assessing tools/techniques available.

Some light projection ideas (because it felt like light projection had an allure to it in the quality of light that isn’t as easily recreated in post processing. Projecting a light face on a face feels like there’s a surreal quality to it as it blurs the digital with the physical)

And in case a performance doesn’t have time to be coordinated:

Pick an idea —

I’ll spend a day wearing the google cardboard with my phone running pass through VR, masking everyone I see by replacing their face with my own. A world of me myself and I. Talk about mirror neurons!

Some of the resources gathered:

Open frame works ARkit add-on to go with a sample project with ARkit facetracking (that doesn’t work, or at least I couldn’t figure out a null vertex issue)

Open frameworks IOS facetracking without ARkit add on by Kyle McDonald

^ the above needs an alternative approach to Open CV Open Frameworks CV Add on

Ok, so ideally it’d be nice to have multiple faces tracking at once — And Tatyana found a beautiful SDK that does that

Create.js is a nice suite of libraries that handles animation, canvas rendering, and preloading in HTML5

Start —

— with a to do list.

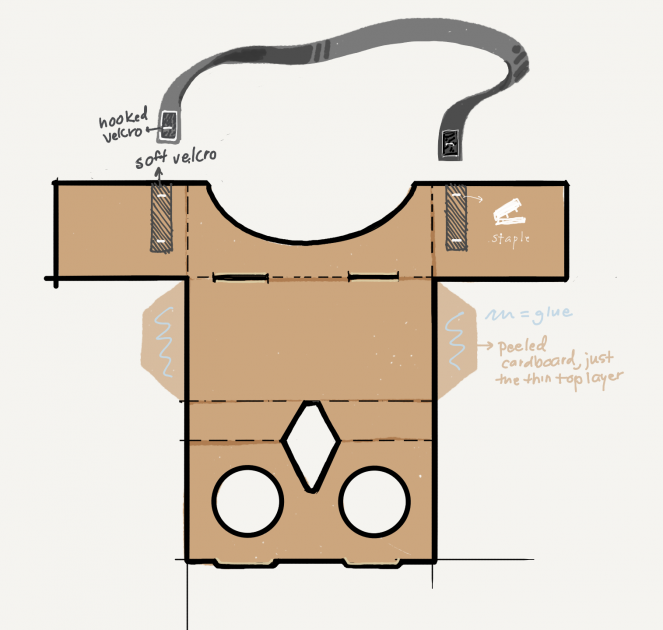

- Make/get cardboard headset to wear

- Brainstorm technical execution

- Audit software/libraries to pick out how to execute

- Get cracking:

- Face detection on REAR facing camera (not the True Depth Front facing one) ideally in IOS

- Get landmark detection for rigging the mask to features

- Ideally, get estimated lighting ….see if the true depth front facing face mask can be estimated from the rear

- Either just replace the 2d Texture of the estimated 3D mask from #3 with my face, OR….shit….if only #2 is achieved…make a 3D model of face with texture and then try to put it in AR attached to the pose estimation of detected face

- Make sure to render in the Cardboard bifocal view (figure out how to do that)

Re-evaluate life because the to do list was wrong and Murphy’s law strikes again —

Lol. Maybe. Hopefully it doesn’t come to this part. But I have a bad feeling…that I’ll have to shift back to javascript

Converting jpg to base 64 and using it as a texture…but only the eyebrows came in….lol….how to do life

Ok, so it turns out my conversion to base 64 was off. I should just stick with using an online converter like so —

Though this method of UV wrapping a 2D texture isn’t particularly satisfying despite lots of trial and error doing incremental tweaks and changes. The craft is still low enough that it distracts from the experience, so either I get it high enough it doesn’t detract, or I do something else entirely….

Two roads diverge…

Option 1: Bring the craft up higher…how?

- Sample video feed for ‘brightness’ namely, the whites of the eyes in a detected face. Use that as normalizing point to adapt the brightness of the image texture being used overlayed now

- Replace entire face with a 3D model of my own face? (where does one go to get a quick and dirty but riggable model of one’s face?)

- …set proportions? Maybe keep it from being quite so adaptable? The fluctuations very easily bring everything out of the illusion

- make a smoother overlay by image cloning! Thank you again Kyle for introducing me to this technique — it’s about combining the high frequency of the overlay to a low frequency (blurred) shader fragment of the bottom layer. He and his friend Arturo made

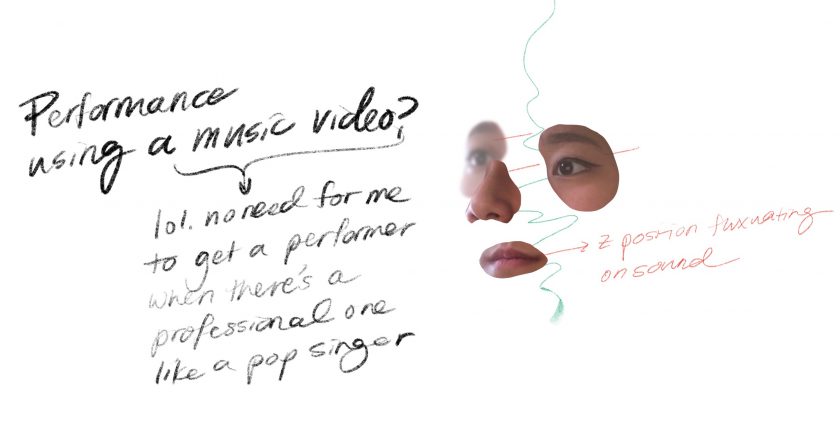

Option 2: Something new:

I was inspired by Tatyana’s idea of making a ‘Skype’ add on feature that would shift the eyes of the caller to always appear to be looking directly at the ‘camera’ (aka, the other person on the end of the call)…only in this case, I’d have a “sleeping mask” strapped to my face to disguise my sleepy state for wide awake and attentive. The front facing camera would run face detection in the camera view and move my eye to ‘look’ like I’m staring directly at the nearest face in my field of view. When there’s no face, I could use the (as inspired by Eugene’s use for last week’s assignment) computer vision calculated “Optical Flow” to get the eyes to ‘track’ movement and ‘assumed’ points of interest. The interesting bit would be negotiating between what I should be looking at — at what size of a face do I focus on that more than movement? Or vice versa?

And the performance could be so much fun! I could just go and fall asleep in a public place, with my phone running the app to display the reactive eyes with the front facing camera on recording people’s reactions. Bwahahhaha, there’s something really appealing about then crowdsourcing the performance from everyone else’s reactions. Making unintentional performers out of the people around me.

And I choose the original path

A Japanese researcher has already made a wonderful iteration of the ^ aforementioned sleeping mask eyes.

Thank you Kyle McDonald for telling me about this!

Thank you Kyle McDonald for telling me about this!

And then regret it —

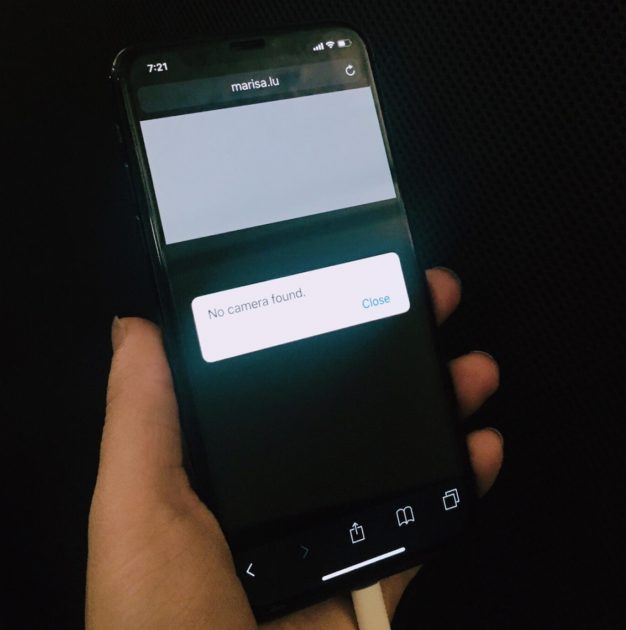

So switching from previewing on localhosts on desktop to Mobile browser had some differences. An hour later it turns out it’s because I was serving it over HTTP instead of over an encrypted network, HTTPS. No camera feed for the nonsecure!

Next step, switching over to the rear view camera instead of the default front face one!

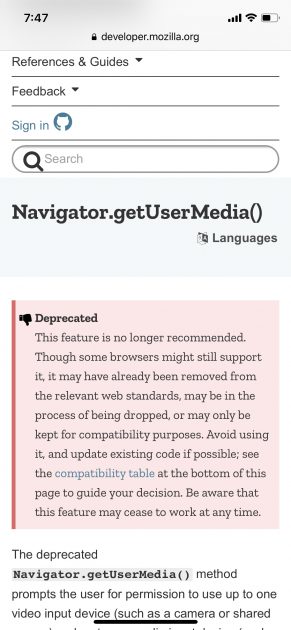

Read up on how to access different camera’s on different devices and best practices for how to stream camera content! In my case I was trying to get the rear facing camera on mobile which turned out to be rather problematic…often causing the page to reload “because a problem occurred” but never got specified in the console….hmmm…

hmmm….

I swear it works ok on desktop. What am I doing wrong with mobile?

Anywhoooooooooo — eventually things sort themselves out, but next time? Definitely going to try and go native.