Contents

- Appetizers: Stable Diffusion Image Variations (announced yesterday and only up for a limited time)

- Sharing of exercises.

- Discussion: Do titles matter?

- Discussion: This is fun, but is this authorship?

- Discussion: Automated plagiarism?

- Google Colab notebooks (e.g. giant list; demo; Chinese text-to-image etc.)

- More like this please (Runway)

- Train a teachable machine

Discussion: Do prompts/titles matter?

What is the relationship of text to image in the works you have created with MidJourney? Under what circumstances is the prompt necessary to appreciate the image?

Discussion: This is fun, but is this authorship?

This article shows how one might develop expertise at prompt engineering. For many of us (visual artists), synthesizing images this way is giddy fun—an enjoyable pastime for a holiday weekend. But: do you feel like (A) “you” are (B) “making” (C) “art”?

A MidJourney user won a state fair art prize for their AI-generated image (NYTimes). [PDF]

Discussion: Automated Plagiarism?

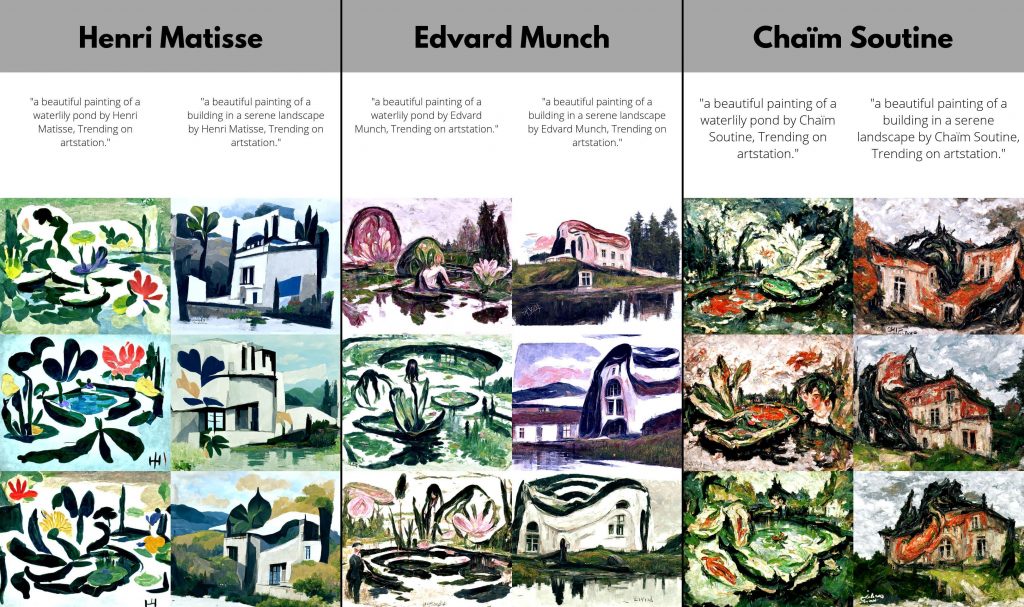

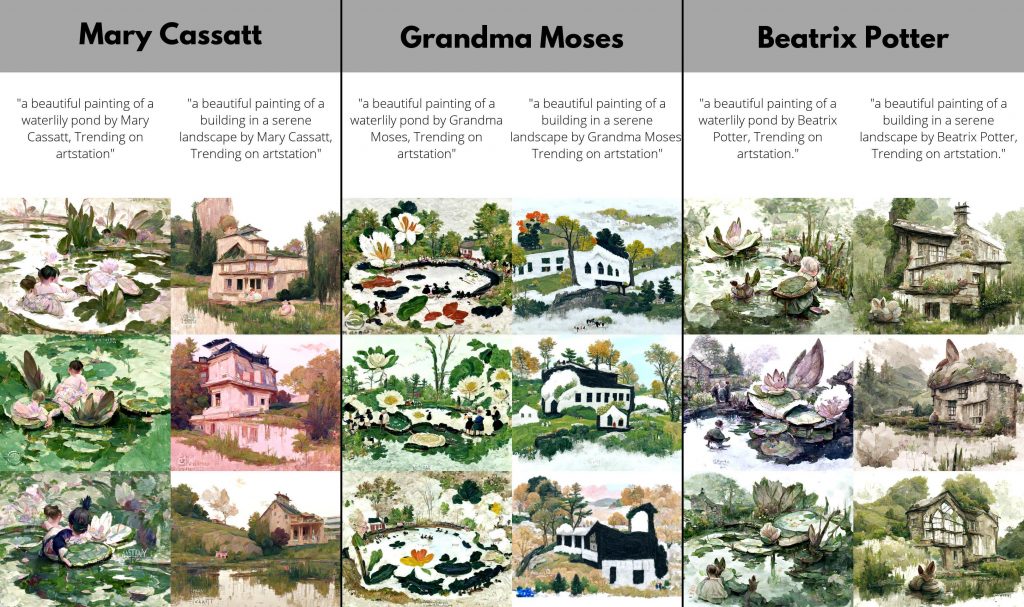

The large models used in MidJourney and Dall-E, etc. are trained on hundreds of millions of images, vacuumed up from the Internet. When this happens, images by actual artists, both living and dead, gets blended into the training data. It becomes possible to generate images that appear stylistically close to the work of these artists, as demonstrated by this site.

Thoughts by Nick Cave

From “Music, Feeling, and Transcendence: Nick Cave on AI, Awe, and the Splendor of Our Human Limitations“:

It is perfectly conceivable that AI could produce a song as good as Nirvana’s “Smells Like Teen Spirit,” for example, and that it ticked all the boxes required to make us feel what a song like that should make us feel — in this case, excited and rebellious, let’s say. It is also feasible that AI could produce a song that makes us feel these same feelings, but more intensely than any human songwriter could do.

But, I don’t feel that when we listen to “Smells Like Teen Spirit” it is only the song that we are listening to. It feels to me, that what we are actually listening to is a withdrawn and alienated young man’s journey out of the small American town of Aberdeen — a young man who by any measure was a walking bundle of dysfunction and human limitation — a young man who had the temerity to howl his particular pain into a microphone and in doing so, by way of the heavens, reach into the hearts of a generation. We are listening to Beethoven compose the Ninth Symphony while almost totally deaf. We are listening to Prince, that tiny cluster of purple atoms, singing in the pouring rain at the Super Bowl and blowing everyone’s minds. We are listening to Nina Simone stuff all her rage and disappointment into the most tender of love songs. We are listening to Paganini continue to play his Stradivarius as the strings snapped.

What we are actually listening to is human limitation and the audacity to transcend it. Artificial Intelligence, for all its unlimited potential, simply doesn’t have this capacity. How could it? And this is the essence of transcendence. If we have limitless potential then what is there to transcend? The awe and wonder we feel is in the desperate temerity of the reach, not just the outcome. Where is the transcendent splendour in unlimited potential? AI would have the capacity to write a good song, but not a great one. It lacks the nerve.

Can artists use AI to transcend human limitations?

Teachable Machine

Google’s Teachable Machine tool allows you to train a (neural-network-based) image recognition system, in the browser, without code. (Note that its models can also be used with many coding toolkits, like p5.js.)

Some of the people contributing to the project include recent CMU architecture alumna Irene Alvarado, and undergrad Design alums, Lucas Ochoa and Gautam Bose. Gautam and and Lucas have made an absurd cereal sorter:

Here’s another project breakdown by Gautham and Lucas, on making a system that tells you when your pancakes are ready to flip: