Machine-Assisted Creativity

This unit has 5 components, as follows:

- #1A-LookingOutwards (30 minutes, due 9/6)

- #1B-Pix2Pix (10 minutes, due 9/6)

- #1C-TextSynthesis (10 minutes, due 9/6)

- #1D-PromptEngineering (60-90 minutes, due 9/6)

- #1E-Prep-for-AppliedAI (10 minutes, due 9/8)

- #1E-AppliedAI (main project, due 9/13; 6 hours)

NOTE:

- Parts A,B,C,D have the recommended due date of 9/6.

- Part E is the primary project, and has the firm due date of 9/13; you will present it in class. You should also be prepared to discuss your project-in-pr0cess on 9/8.

1A. Looking Outwards #01: Machine Learning Arts

(30 minutes). Spend about 30 minutes browsing the following online showcases of projects that make use of machine learning and ‘AI’ techniques. Look beyond the first page of results; hundreds of projects are indexed across these sites.

- MLArt.co Gallery (a collection of Machine Learning experiments curated by Emil Wallner).

- AI Art Gallery (online exhibition of the NeurIPS Workshop on Machine Learning for Creativity and Design). Note: this site also hosts several years’ worth of annual exhibitions.)

- Chrome Experiments: AI Collection (a showcase of experiments, commissioned by Google, that explore machine learning through pictures, drawings, language, and music).

Now:

- After viewing at least 5 projects, select one to feature in a Looking Outwards post.

- Create a post in the Discord channel, #1A-LookingOutwards

- Include an image of the project you selected; link to information about it, and write a couple of sentences describing the project and why you found it interesting.

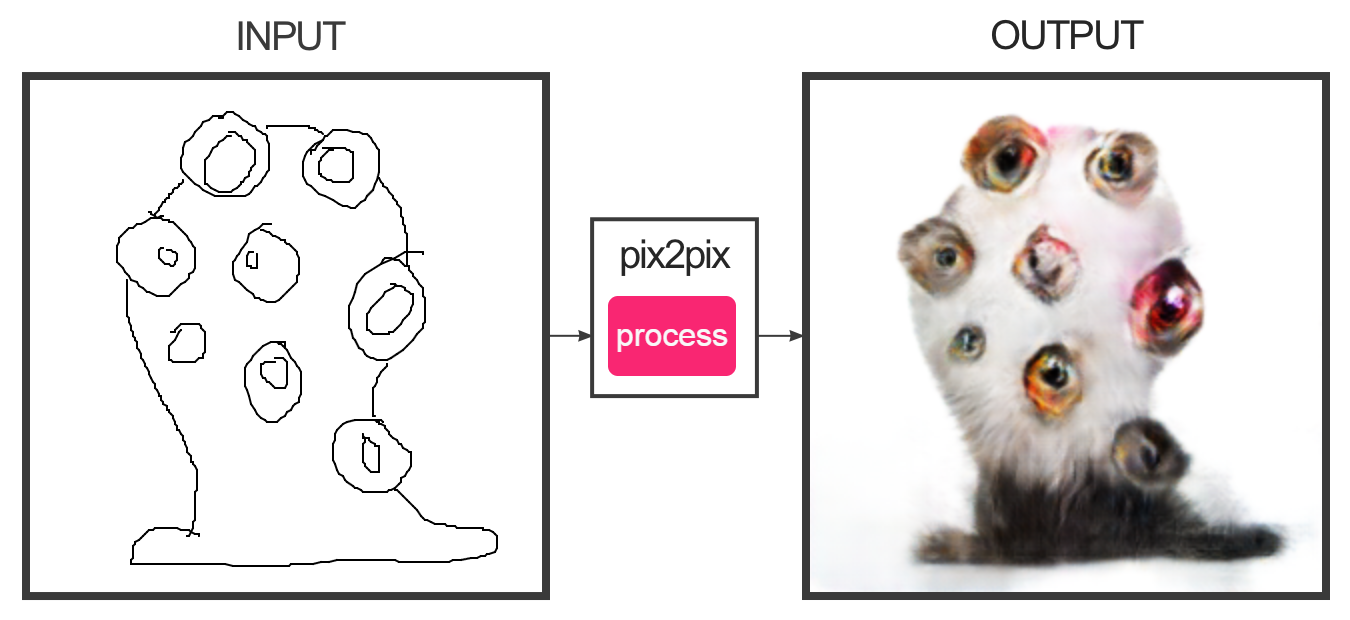

1B. Image-to-Image Translation: Pix2Pix

(10 minutes) Spend some time with the Image-to-Image (Pix2Pix) demonstration page by Christopher Hesse. Experiment with edges2cats and some of the other interactive demonstrations (such as facades, edges2shoes, etc.). You are asked to:

- Create a post in the Discord channel, #1B-Pix2Pix

- Create several different designs. Screenshot your work so as to show both your input and the system’s output. Embed your favorite result into the Discord post.

- In the post, write a reflective sentence about your experience using this tool.

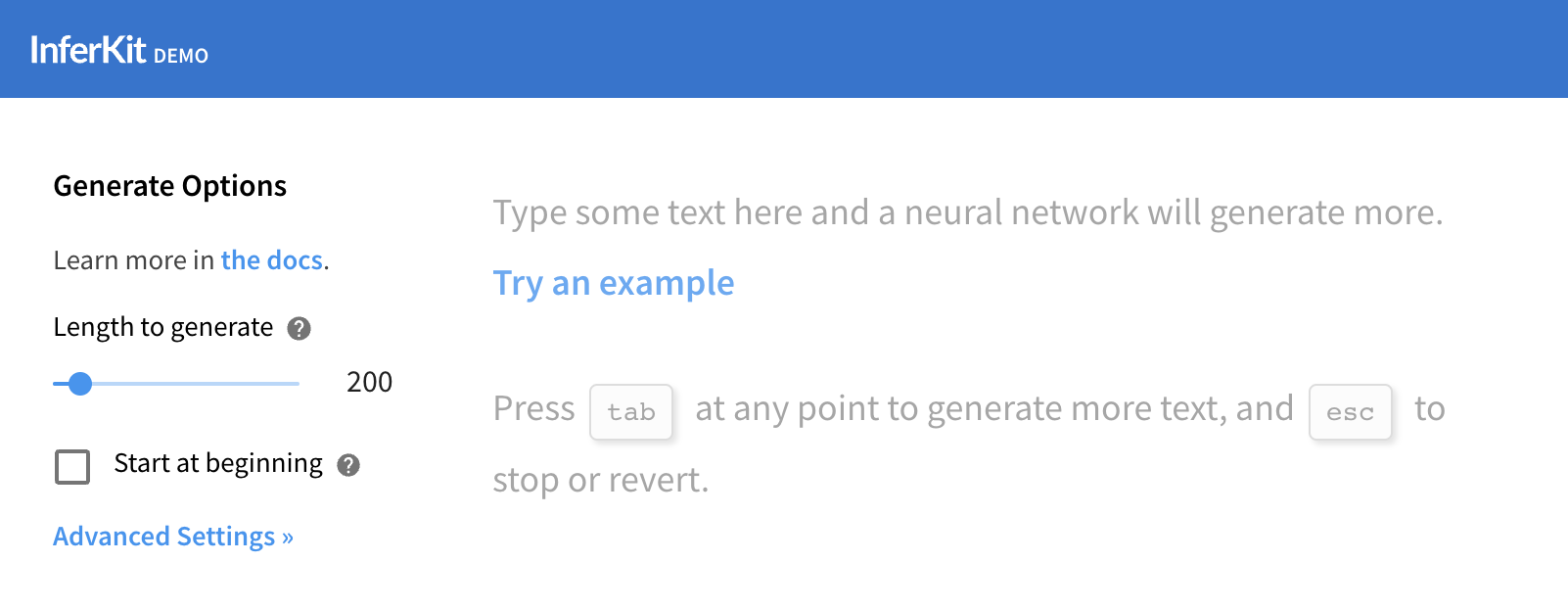

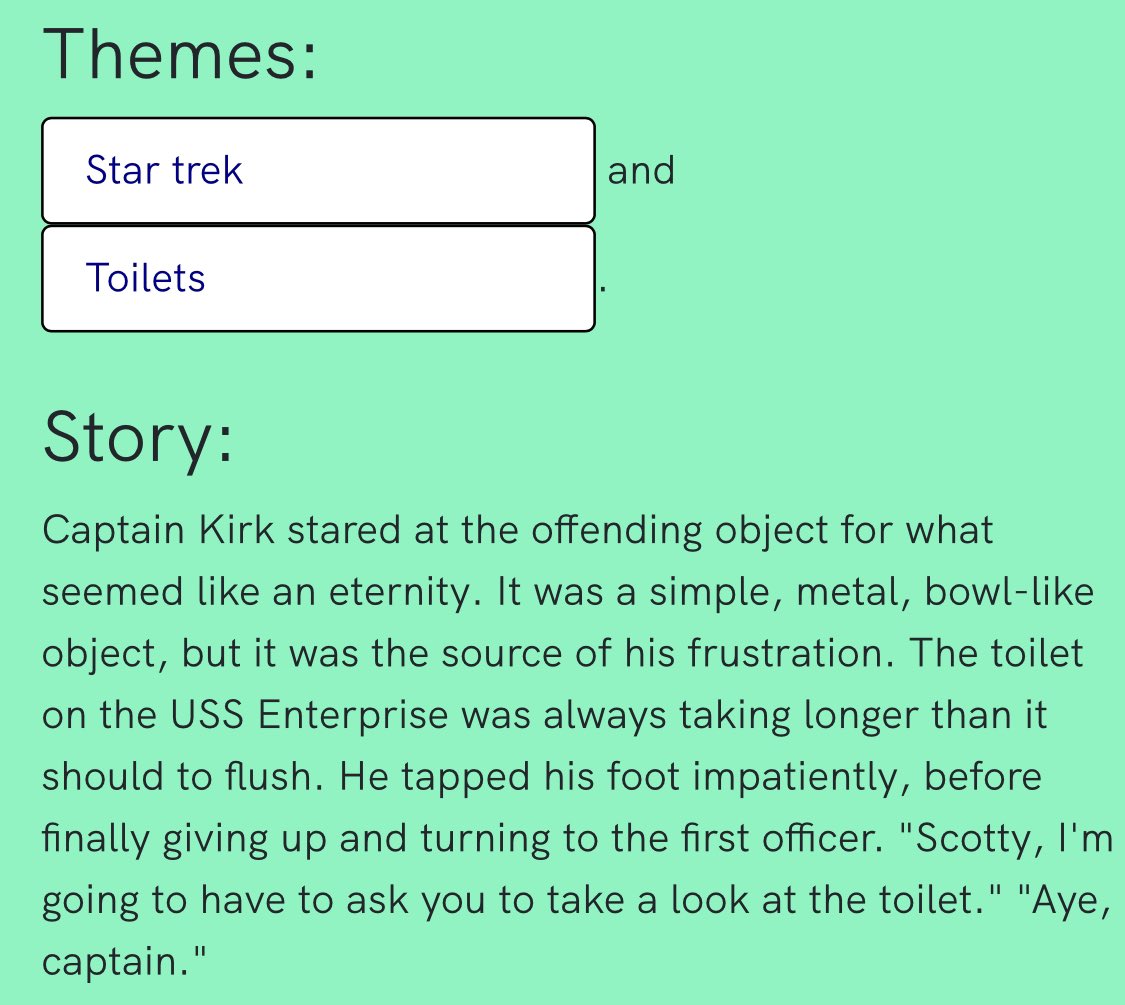

1C: Text Synthesis with GPT-3

(10 minutes) In this exercise, you will use some browser-based interfaces to Open AI’s GPT-3 language model in order to generate some text which intrigues you.

In particular, experiment with each of these tools until you produce a text fragment that you find interesting:

- Try the InferKit tool by Adam King

- Try the Narrative Device by Rodolfo Ocampo (you’ll need to register for a free token)

Now:

- Create a Discord post in the channel #1C-TextSynthesis.

- Embed your text experiment in a Discord post. Use boldface to indicate the words which you provided as inputs to the system.

- Also in your post, write a reflective sentence or two about your experience using these tool(s).

1D. Text-Based Image Synthesis (Prompt Engineering)

(60-90 minutes) Use machine learning systems—that have been trained on millions of images and billions of words—to generate some images that intrigue you.

You may wish to browse the following Twitter accounts to see how others have done this: @weirddalle, @images_ai, @RiversHaveWings, @advadnoun, @0xCrung, and @quasimondo. If you like this work a lot, you may also wish to check out @unltd_dream_co, @Somnai_dreams, @thelemuet, and @aicrumb. You can also browse uncurated examples in the Midjourney Discord.

The following services all work to generate images from textual descriptions, using similar algorithms, but provide different levels of image quality, speed, user interface controls, and interestingness. Most have a free tier that you may quickly exhaust; paid accounts have been provided for MidJourney and ArtBreeder. My recommendation is to browse the Midjourney Discord and start there.

- MidJourney (recommended; check the Discord for paid account details)

- ArtBreeder (recommended; check the Discord for paid account details)

- Craiyon

- HuggingFace StableDiffusion

- DreamStudio.ai

- Pixray Text2Image

- SimpleStable

- MindsEye

Now,

- Create a Discord post in the channel #1D-PromptEngineering

- Generate a half-dozen or so final images, and present them in the Discord post

- Write a few sentences of reflective commentary.

Note: You may find it helpful to use what Kate Compton has called “seasonings” (discussed in this Twitter thread) — extra phrases and hashtags (like “trending on ArtStation” that hint and inflect the image synthesis in interesting ways.

The following additional resources may be helpful:

- Prompt-Engineering Tip

- Kate Compton on “Seasonings”

- Kevin Slavin on virtual photography

- AI Art Is Challenging the Boundaries of Curation

- Dirt: Everything Dall-e

- Thread on Image-Gen & Copyright

- How Photography Became an Art-Form

- Into prompt engineering for A.I.

1E. Applied AI

The main project, due Tuesday 9/13, is to use one or more of the above techniques to create something interesting to you.

For Thursday, 9/8, make an entry in the Discord channel, #1e-prep-for-appliedai, and write a couple of sentences (and add a quickie sketch image if possible) of what you’re planning. For example, here are some possible things you might create (this list is not exhaustive):

- a ‘zine

- an abecedarium (alphabet book, e.g. “A is for Apple”, etc.)

- an illustrated children’s book

- a (brief!) animation

- a illustrated slide lecture presentation

- a storyboard

- a comic strip

- a cookbook

- a coffeetable travel book for an imaginary place (e.g. Hades)

- Alien Skymall catalogue

- a fashion line

- a collection of temporary tattoos or stickers

- a paper theater

- a prank; etc. etc.

In addition to the tools we’ve already used, many additional AI-powered tools can be found listed in this Twitter thread (a great one is cleanup.pictures). And here’s another massive list of free tools. I challenge or encourage you to try combining multiple tools (as Karen Cheng demonstrates in this recent thread), but this isn’t a strict requirement. Now:

- Don’t be too ambitious. You only have a week to make this thing, you’ve got other classes, and it’s hard to be human. Make something good, but make something small.

- Be prepared to discuss your informal project proposal in class on 9/8.

- Create a Discord post, in the #1E-AppliedAI channel.

- Document your project in the post. Include a link to your video, book, etc., as appropriate.

- Be prepared to present your project in class on 9/13. You’ll present the project from your Discord post, so make sure it’s uploaded before class.