Portrait — Ghost Eater

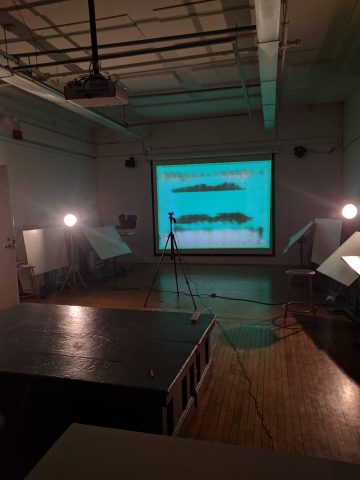

I captured the ghost of Ngdon. Here it is being called upon, during Open Studio 2016.

How

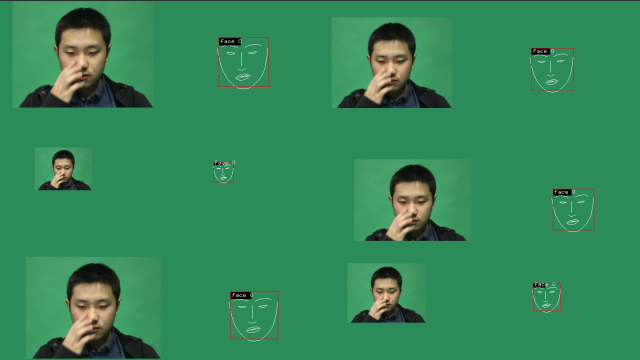

I trained a pix2pix convolutional neural network (a variant of a CGAN) to map facetracker debug images to Ngdon’s face. The training data was extracted from two interviews conducted with Ngdon, about his memories of his life. I build a short openFrameworks application to take the input video, process it into frames, applies and draws the face tracker. For each frame the application produces 32 copies, with varying scales and offsets. This data augmentation massively increases the quality and diversity of the final face-mapping.

For instance, here are 6 variations of one of the input frames:

These replicated frames are then fed into phillipi/pix2pix. The neural network learns to map the right half of each of the frames to the left half. I trained the network for ~6-10 hours, on a GTX 980.

At run-time, I have a small openframeworks application that takes webcam input from a PS3 Eye, processes with the dlib facetracker, sends the debug image over ZMQ to a server running the neural network, which then echoes back its image of Ngdon’s face. With a GTX 980, and on CMU wifi, it runs at ~12fps.

Only minimally explored in the above video, the mapping works really well with opening/closing your mouth and eyes and varying head orientations.

The source code is available here: aman-tiwari/ghost-eater.

Here is a gif for when Vimeo breaks: