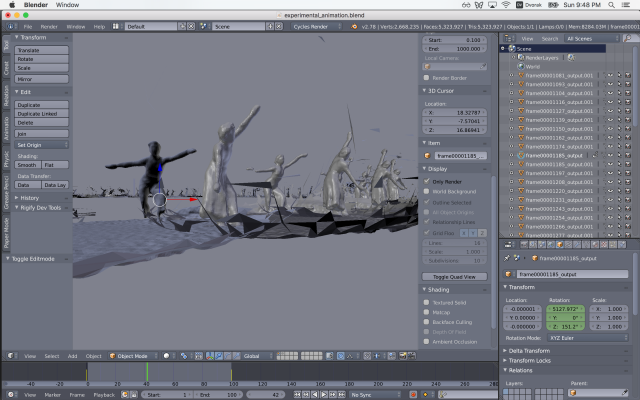

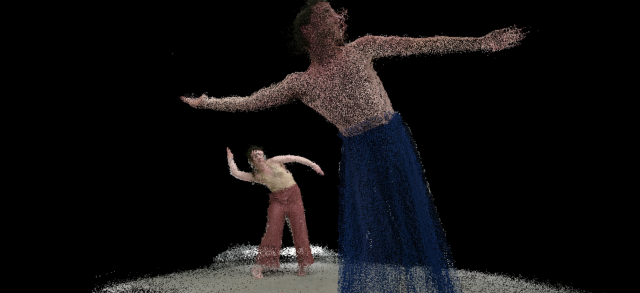

I am still working on looping, and will experiment tomorrow with rotoscoping and looping around a 360 video.

After talking with other students I was inspired to work on a project combining strobes and continuous light. I would also like to play with focus blue and this effect, but I need more hands to do that.

Flash/Continuous mixing is not a new technique. Combining continuous and flash is a necessary tool for photographers who shoot with flash out doors. The flash output is not affected by the shutter speed, so a photographer can change the shutter speed to get independent control of the balance between flash light and continuous light, in-camera. It’s a very powerful (and difficult) tool for photographers to master.

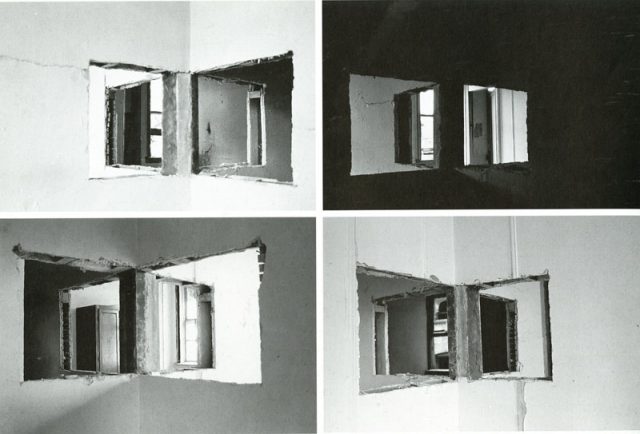

This is something I have done before for creative ends, as seen above, where I shook my camera while I fired my flash.

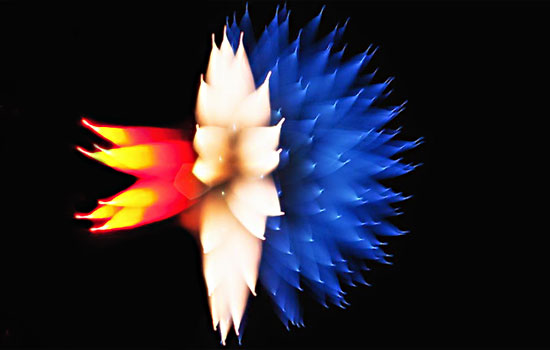

I set up a quick studio, a black backdrop, a continuous LED light (right), and a flash (top left, above lamp) with a grid on it (to control spill). The standing lamp (bright spot, left) was not used during the exposure.

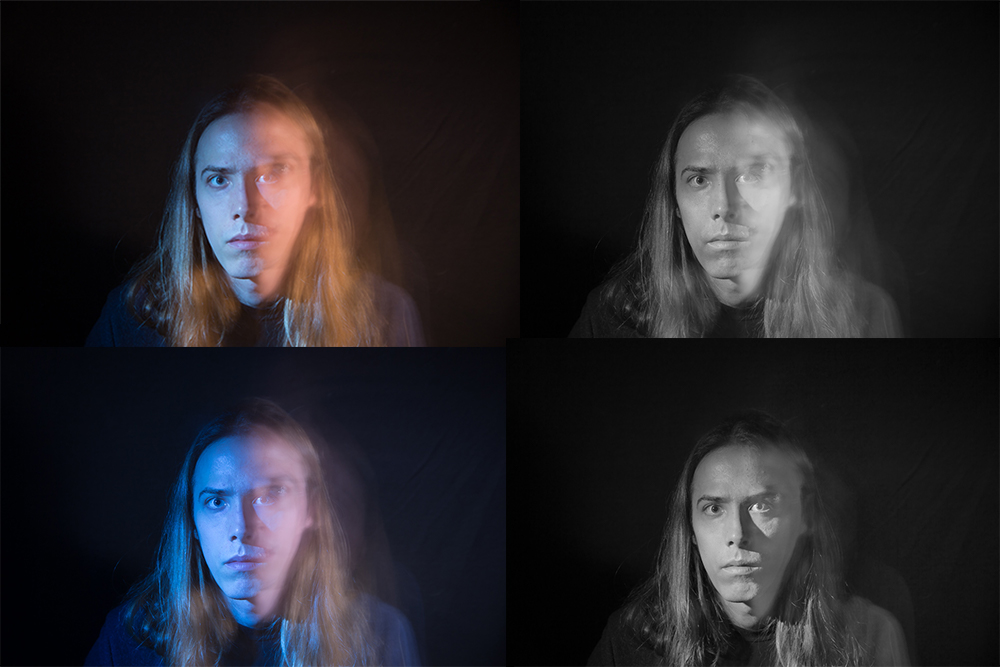

There is one clever thing I did. I put a warm filter over my continuous light and a cooling gel filter over my flash. By shooting RAW and adjusting the white balance, I had control over both of these lights independently. I could push them further blue and further orange. Doing this, I mixed to black and white. In the black and white mix, I can adjust thee white balance and the brightness of my blue channel and my orange channels.

This way, I could fine tune the balance after-the-fact, and edit my image with much more precision and control over the perceived lighting and shadows than if I had used masks, or dodging/burning.

These are all from the same source image. The left images are adjustments of white balance, and the right images include white balance and black/white mix adjustments to get different looks. Notice the shadows on the image-right side of my face in the BW photos above.

Moving around during the exposure got your standard “multi-exposure” “two-face” look, which is pretty cliche. Heisler nailed it, I see no reason to just make a bad imitation.

Attempting to stay still, however, proved more interesting. Uncanny, you might say.

Next, the actual direction I may take my final event project. For this image, I removed the color filters from both lights. The color version still looks like junk, so I mixed black/white here (okay, some split toning, to bring back my blue/orange palette in spirit), but I would may shoot color or go for a more documentarian (read: ‘non-artsy’ ‘point-and-shoot’, ‘just captured a slice of life’) feel for a final project.

I light different parts of a scene with continuous and strobe light. This gives the continue blur a sense of movement – I capture an event; but allows my to highlight a part (a face) of this event with clarity; all in-camera. In this case the event is drinking a beer. The continuous light doesn’t light my face almost at all, so the beer is gone when I raise it up. You see me fidgeting, crossing/uncrossing my legs, moving the beer, and so on. Note: this image is intentionally underexposed (I dropped it down in post).

Again, this image was created in-camera, colors/tones/acne edited in post for fun/habit.

If I did pursue this technique for my final project, I would get a model so I could focus on the photography side of things. I’d like to capture individuals performing different actions, using motion to a greater extreme than it could be done without a flash.

For my event project, I am largely interested in investigating things that happen underwater. I have two possible methods for capture-

For my event project, I am largely interested in investigating things that happen underwater. I have two possible methods for capture-