Echolocation Depth Map of the Electrical Engineering Lab

We experience and understand environments primarily using the sense of sight. But what if we could see using sound? I was inspired by bats, who can get around using high frequency sound waves to describe their environments, without being able to see. I was also inspired by Ben Snell’s LIDAR project, where LIDAR is a technology that measures distance by illuminating it’s target with a laser light. In a way, I’m sort of attempting to create my own Echolocation distance sensing LIDAR. The location I’ve chosen is the Electrical Engineering lab at CMU, which is very personal to me, I’ve spent many long hours there.

Turns out, there’s this thing called an Ultrasonic Sensor:

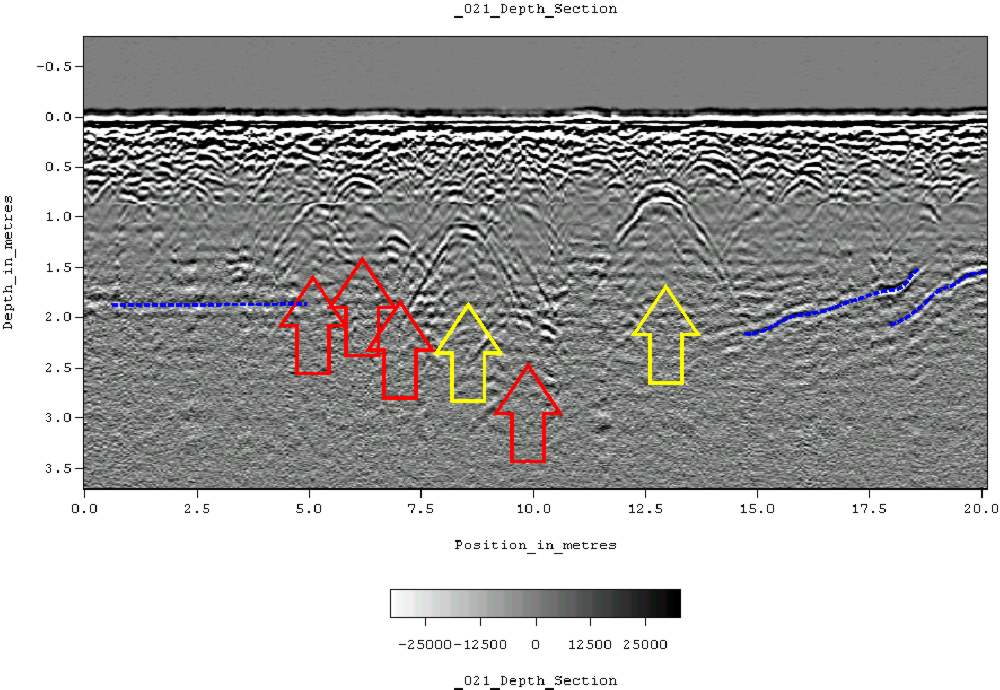

It emits a high frequency noise, and then waits for the sound to come back. Based on this information, it can tell how far away something is.

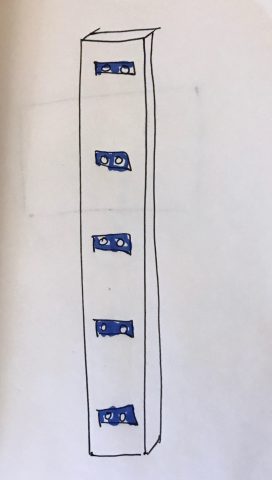

Giant 2×4 covered with Ultrasonic Sensors

I hypothesize that by covering a big stick with Ultrasonic Sensors, I can construct a rough depth map of the EE lab. I want to do this with the lights off.

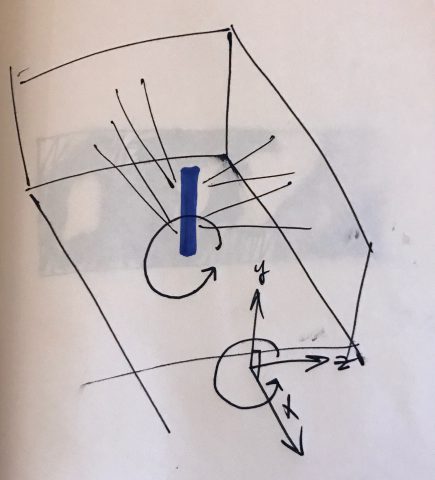

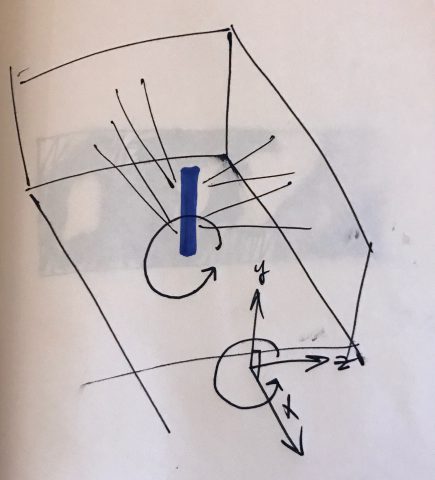

By placing the sensors at regular intervals, I know the location of the sensor in the height direction (y). If I stand in one spot with the pole, I know where I am on the floor (x). The only other questions is “how far away is all the other stuff?” (z).

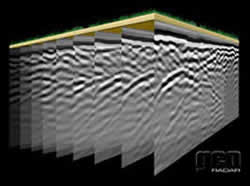

I think that if I spin around in a circle, and time how long it takes me, I’ll be able to create a 180 degree image of the lab (basically a cylinder). I bet there’s a way to do this more precisely with motors but honestly I’ll probably just wind up spinning around in a circle.

But will the sensors interfere with each other? No because I’m gonna do math and make that not happen

Data Visualization

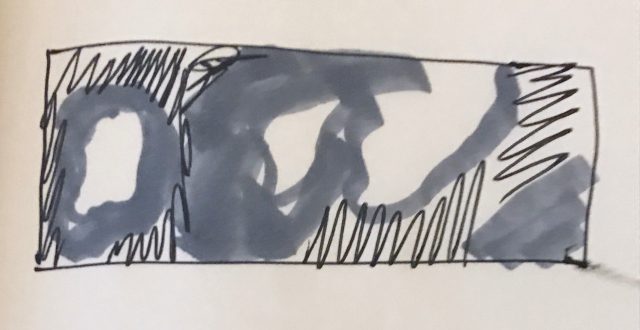

I’ll have all of this data about the room, but it’ll still be spaced out a lot because of how far apart the sensors are. I’ll write processing code to interpolate between the sensor values, so I get a smooth depth map. It won’t be hyper-accurate, but it’ll give a vague sense of the location.

Ultimately I’d like to put the 180 degree photo in a cardboard or some sort of viewer, so people can experience the room by using sound technologies to create a “physical” experience.