Portrait Plan:

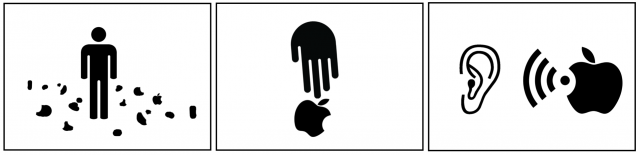

I will collect all the things my subject(a) throws away over a period of time, along with voice recordings of the subject telling some thoughts he has in mind the moment he wants to throw away that piece of trash.

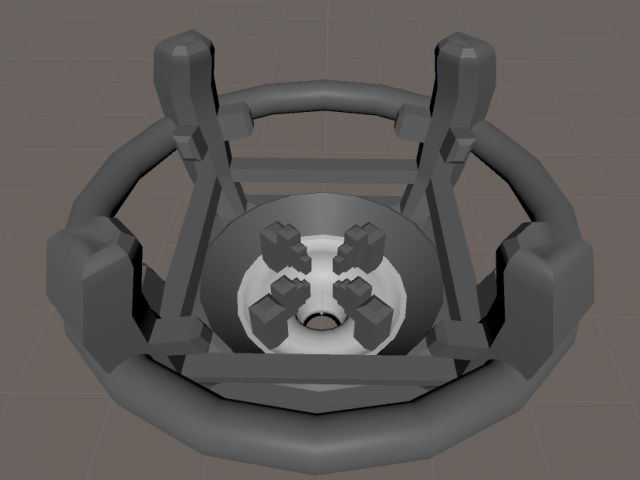

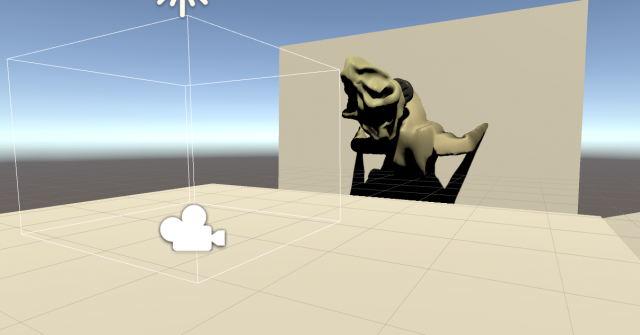

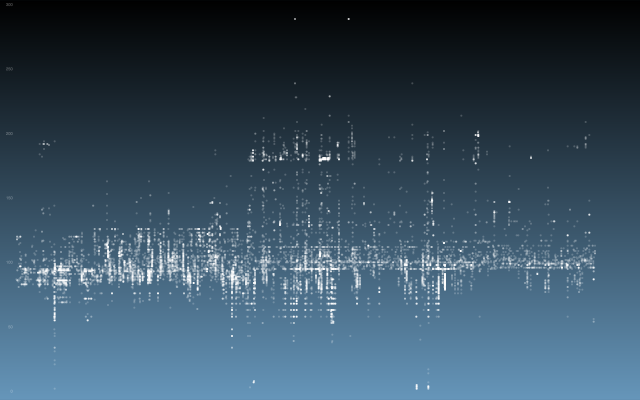

I will then do a photogrammetric scan of all the trash, and place them in a virtual 3D world where the user can wander around, pick them up and listen to the corresponding voice recording. (diagram below)

The voice recording can be simple like “This is a half eaten apple. I’m throwing it away because it tastes awful.” or “It’s Tuesday. I’m so happy” or just any random thought that jumped into the subject’s mind at the very moment.

I’m thinking of the trash as pieces of the subject’s life he left behind, and the voice as a frozen fragment of the subject’s ideas and values. Together they become a trail of clues that we can follow to catch a glimpse of the subject as a being.

I chose photogrammetry to record the trash because I feel that the photogrammetry models have an intrinsic crappy, trash-like quality to them, and will probably be a bonus.

I’ve been thinking about ways I can make the virtual world an immersive experience. The trash can be placed on a vast piece of land, or can be all floating in an endless river in which the user is boating. I will probably make it in Unity.

I’m also thinking about a method to systematically process all the trash and recordings, so everything can be done efficiently in an assembly line manner, and new trash and recordings can be easily added to the collection.