Stereo video, in Slitscanning and Time/Space Remapped views

Slitscanning as a visual effect has always fascinated me. Taking a standard video, slitscanning processes allow us to view motion and stillness across time in a completely new way. Moving objects lose their true shape, and instead take on a shape dictated by their movement in time. There is an immense history of this effect being taken advantage of by artists to create both still and moving works, but for my own explorations, I wanted to see the possibilities of slitscanning in stereo.

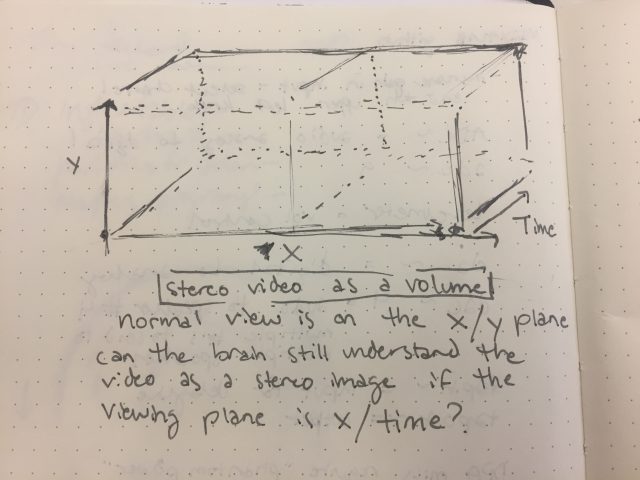

As an additional experiment, I processed the video not through a slitscanning effect, but through a time/space remapping effect. What happens when a video, taken as a volume, is viewed not along the XY plane (the normal viewing method), but along the plane represented by X and Time? This is a curious effect, but could it hold up in stereo video?

Stereo Slitscanning

Using the Sony Bloggie 3D camera, I captured a variety of shots. For Cdslls, I chose to isolate her in front of a black background, for simplicity’s sake. I first ran the video through code in Processing, which would create a traditional slitscanning effect. In order for the slitscanned video to hold up as a viewable stereo image, the slit needed to be along the X axis, so the same pixel slices were being taken from each side of the stereo video. I output this slitscanned video into AfterEffects, where I composited it with a regular stereo video. *1

Time Remapping

With a process for regular slitscanning in stereo achieved, I began to wonder about the possibilities for stereo video processed in other ways. Video, taken as a volume, is traditionally viewed along the X/Y plane where TIME acts as the Z dimension; every frame of the video is a step back in the Z dimension. But do we have to view from the X/Y plane all the time? How does a video appear when viewed along a different plane in this volume? Here I explore a sterographic video volume as viewed from the TOP, that is, along the X/TIME plane. *2

Code

processing code for both the slitscanning and time remapping processes, using a sample video from archive.org (Prelinger Archives)

Display

For display, I chose to present the video in the Google Cardboard VR viewer. Using an app called Mobile VR Station, the two halves of the images are distorted in accordance with the Google Cardboard lenses, and fused into a single 3D image. The videos are also viewable on a normal computer screen, but this requires the viewer to cross their eyes and fuse the two halves themselves, which can be unpleasant and disorienting.

Thoughts

- I chose to do this kind of post production on the first video for a couple reasons- The output of the slitscanning, while pleasing in terms of movement, did not really create a 3D volume to my eyes in the way that the non-slitscanned video did. The two halves of the image would fuse, but it appeared almost as if the moving body was a 2D object moving inside the 3D space that the still elements of the video created. Through compositing the two videos together, the slitscanning would create a nice layer of movement deep back in 3D space, while the non-slitscanned portion would act as an understandable 3D volume in the foreground. I also refrained from applying the slitscanning effect to the tight portrait shot of Cdslls due to my hesitation with distorting her features. While slitscanning does create nice movements, it at times has an unfortunate “funhouse mirror” effect on faces, at times looking quite monstrous. This didn’t at all fit my impression of Cdslls, so I left her likeness unaltered on this layer.

- The way that my time remapping code operates currently, there are jumpcuts occuring every 450 frames- that is the height of the video. This is due to the way that I am remapping the time to the Y dimension- each frame of the video displays a single slice of pixels in the input video, so the top output row of pixels is the beginning of the clip and the bottom output row of pixels is the end of the clip. Once the “bottom” of the video volume is reached, it moves to the top of the next section of the video, thus creating the cut.

Moving Forward

One of the richest discoveries of these experiments has been seeing how moving and still elements of the input videos react differently to the slitscanning and time remapping processes. The “remapped time experiment 1” video shows this particularly well- still elements in the background were rendered as vertical lines, deep back in 3D space. This allowed a pleasing separation between these still elements, and the motion of the figure, which formed a distinguishable 3D form in the foreground. I would thus like to continue to film in larger environments, especially outdoors, which contain interest in both the foreground and the background.

I would also like to further refine the display method for these videos. Moving forward, I’ll embed the stereo metadata into the video file so that anyone with Google Cardboard or similar device will be able to view the videos straight out of YouTube.