Underwater RGBD Capture with Kinect V2 and DepthKit

Can I capture a 3d image of me exhaling/blowing bubbles?

For my event project, I explored a few possibilities for underwater 3d capture. After briefly considering sonar, structured light, and underwater photogrammetry a try, I settled on using Scatter’s DepthKit software. DepthKit is a piece of software built in OpenFrameworks which allows a depth sensor such as a Kinect (I used the Kinect V2, an infrared “time of flight” sensor) and a standard RGB digital camera (I used the Kinect’s internal camera) to be combined into a “RGBD” video- that is, a video that contains depth as well as color information.

In the past, James George and Alexander Porter produced “The Drowning of Echo” using an earlier version of DepthKit and the Kinect V1. They filmed from above the water, and though many of their shots are abstract, they were able to penetrate slightly below the surface, as well as capture some interesting characteristics of the water surface itself. In some of the shots it is as if the ripples of the water are transmitted onto the skin of the actress. Another project using DepthKit that I find satisfying is the Aaronetrope. I appreciate this project chiefly for its interactivity. It uses several RGBD videos displayed on the web interactively using a webGL library called three.js. http://www.aaronkoblin.com/Aaronetrope/

Along the way, I encountered a few complications with this project. Chiefly, these were due to the properties of water itself. I would obviously need a watertight box to house the Kinect. After much research into optics, I found that near-visible IR around 920NM, the frequency the Kinect uses, is greatly attenuated in water. This means that a lot of the signal the Kinect sends out would simply be absorbed and diffused, and not return back to the sensor in the manner expected.

Some of the papers that informed my decisions related to this project are: Underwater reconstruction using depth sensors; Absorption and attenuation of visible and near-infrared light in water: dependence on temperature and salinity; Using a Time of Flight method for underwater 3-dimensional depth measurements and point cloud imaging

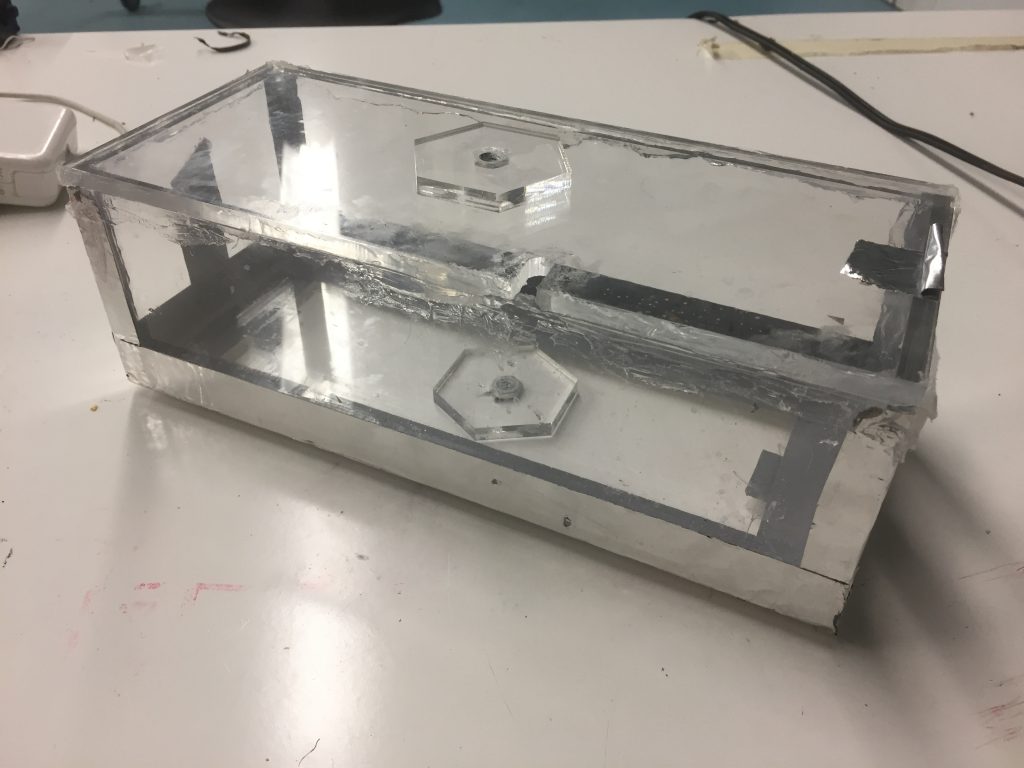

With the challenges of underwater optics in mind, I proceeded to construct an IR transparent watertight housing from 1/4in cast acrylic. This material has exceptional transparency, and neither reflected nor attenuated the IR signal or color camera feed. I also attached 1/4-20 threaded bolt to the exterior of the box, to make it magic arm compatible.

I carried out initial testing right in my bathtub. Here, I tested three scenarios: Kinect over water capturing the surface of the water; Kinect over water trying to capture detail beneath the water; Kinect underwater capturing a scene under the water.

It was immediately pretty clear that I would be unable to penetrate the surface of the water if I wanted to capture depth information below the water; I definitely needed to submerge the Kinect. And to my surprise, when I first placed my housing under, it (sort of) worked! The IR was indeed absorbed to a large degree, and the area in which I was able to capture both video and depth data together proved to be very small. But even so, a RGBD capture emerged.

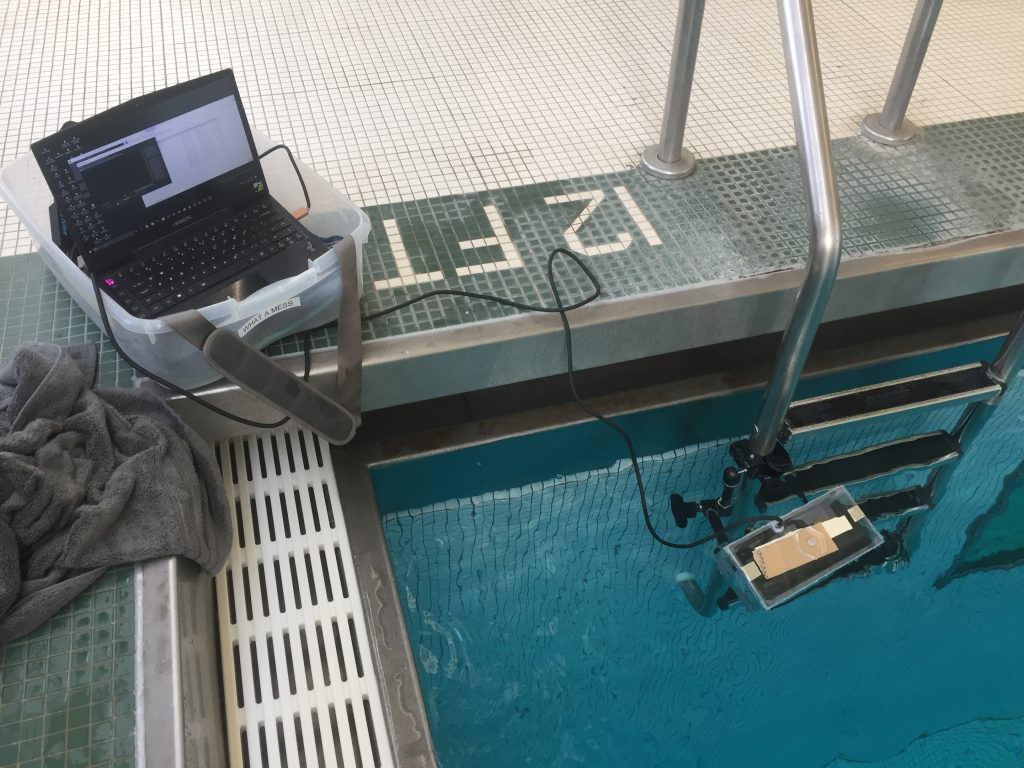

Into the Pool

The CMU pool is available for project testing by special appointment, so I took the plunge! The results I achieved were basically what I expected- the captures are more low-relief than full RGBD, and the depth data itself is quite noisy. I also discovered that the difference in refraction of light throws the calibration of the depth and RGB images way out of whack- manual recalibration was necessary, and even then it was difficult to sync. That said, I did have some great discoveries at this stage. Bubbles are visible! I was able to capture air exiting my mouth, as well as bubbles created by me splashing around.

Lastly, here is an example of where the capture totally went wrong, but the result is still a bit cinematic:

Anonymous feedback from group crit, 4/13/2017:

Underwater Kinect using V2- Sunk homemade water-tight box into pool. Great experimentation. Found out what was necessary to achieve your desired results. Body in water makes it feel much closer to animation – really beautiful

This is a highly well-researched, well-executed, well-explained project. The aesthetics are terrific. However, I wish the results were more conceptually cohesive than just “Yep, here’s me, swimming underwater”. In my opinion, the most compelling story would be if you said something like, “So, I wanted to CAPTURE A BUBBLE.” Even if doing so involved a bit of backforming the story of your project, a sharp focus on a magical goal like that would transport this from a great study to a incredible one.

Great documentation

^ Good presentation of context surrounding underwater depth capture

Great explanation on the technology +

It’s very sensual ++

This is incredible.. I have absolutely no negative comments to make: great presentation, great documentation, beautiful final

very visually beautiful

These things are in 3d right? Can we see some movement around the 3d objects? +

As a fellow fish I really appreciate this project – I’ve always wanted to film underwater

^relatable content

I wonder what famous film scenes that use underwater shots would look like with depth

Give me titanic with depth kit +

It would be cool if you included some rotating camera shots to show that it is in 3d +