I’m working with hizlik on this portrait project. Since both of us are photographers, we decided to share a single process that records our photography style over time, split into two different visualizations—lighting preferences and subject preferences. Photographers evolve their style over time, and we wanted to see how ours did.

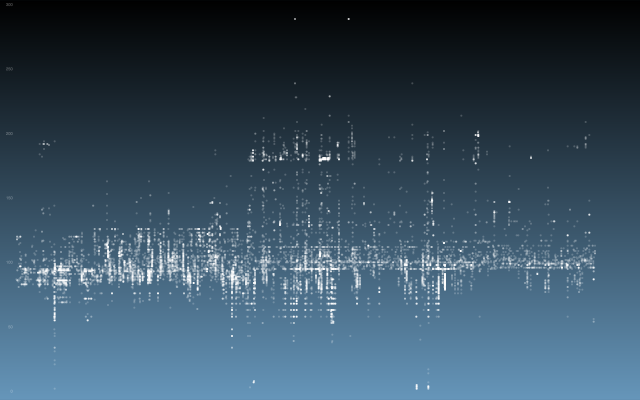

For the lighting portrait, we grabbed the EXIF data for every photo we’ve taken, and created a value from the aggregate of ISO, Shutter Speed, and Aperture, and plotted them with the appropriate timestamp on a chart over time. This is an example:

The categorical portrait will require us to run all of our photographs through Google Vision, which is a computer vision API that produces keywords from photos. We will use these keywords to figure out what we took pictures of in a general sense.