shawn sims-realtime audio+reactive metaball-Project2

I knew when this project began that I was interested in collecting real time data. I quickly became interested in live audio and all the ways in which one can collect information from an audio stream/input. I began by piecing together a fairly simple pitch detector, with the help of Dan,(thanks Dan!) and from there passed that information along in an osc packet to Processing. This saved me from having to code some fairly intense algorithms in processing and instead simply send over a float. At that point I began to investigate fft and the minim libraries of Processing. Intense stuff if you aren’t a sound guy.

The video below shows the reactive metaballs in realtime. Due to the screen capture software it lags a bit and runs much smoother in a live demo.

The data extracted from the audio offers us pitch, average frequency (per specified time), beat detection, and fast Fourier transform (FFT). These elements together can offer unique inputs to generate/control visuals live in response to the audio. I used pitch, average frequency, and FFT to control variables that govern metaball behavior in 2D.

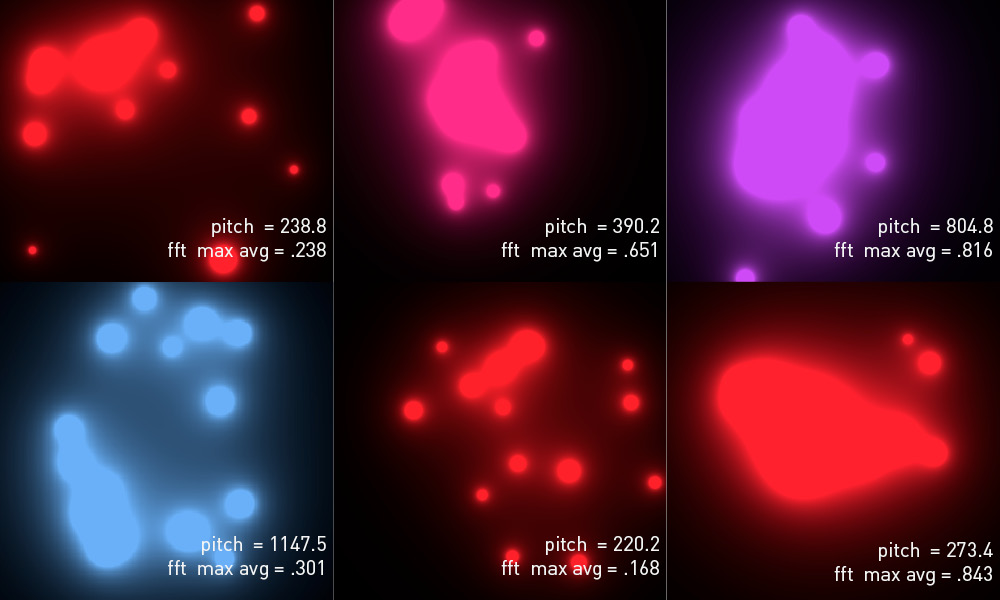

The specific data used is pitch and the fft’s maximum average. The pitch produces the color spectrum of the metaballs and the fft maximum average changes the physics of the system. When the fft max average is high the metaball system tends to move closer to the center of the image.

There are a few lessons I learned from working with live audio data…1. its super tough and 2. I need to brush up on my physics. There are a dozen variations of each method of frequency detection that will yield you different results. I do not poses the knowledge to figure out exactly what those differences are so I left it at that. By the end of coding project 2 I have gained an understanding of how difficult dealing with live audio is.

The code implemented uses the built in pitch detection of the Sigmund library in PureData. The OSC patch provides a bridge to Processing where opengl, netP5, oscP5, and krister.Ess libraries are used. The metaball behavior is controlled by a few variables that determine movement along vectors, attractor strength, repel strength, and tendency to float towards a specified point. Below is the source code.

// shawn sims // metaball+sound v1.1 // IACD Spring 2011 ///////////////////////////////////////////// /////////////// libraries /////////////////// //import ddf.minim.*; // load the Minim library import processing.opengl.*; // load the openGL library import netP5.*; // load the network library import oscP5.*; // load the OSC library import krister.Ess.*; // load the fft library ///////////////////////////////////////////// ////// metaBall variable declaration //////// int metaCount = 8; PVector[] metaPosition = new PVector[metaCount]; PVector[] metaVelocity = new PVector[metaCount]; float[] metaRadius = new float[metaCount]; float constant = 0.0; int minRadius = 65; int maxRadius = 200; final float metaFriction = .999; float metaRepel; float maxVelocity = 15; float metaAttract = .08; PVector standard = new PVector(); PVector regMove = new PVector(); PVector centreMove = new PVector(); PVector repel = new PVector(); float metaDistance; PVector centre = new PVector(0,0); float hue1; float sat1; float bright1; ///////////////////////////////////////////// //////// sound variable declaration ///////// FFT myFFT; //Minim minim; AudioInput myInput; OscP5 oscP5; int OSC_port = 3000; float pitchValue = 0; float pitchConstrain = 0; int bufferSize; int steps; float limitDiff; int numAverages=32; float myDamp=.1f; float maxLimit,minLimit; float interpFFT; ///////////////////////////////////////////// ///////////////////////////////////////////// void setup (){ size (500,500,P2D); Ess.start(this); bufferSize=512; myInput=new AudioInput(bufferSize); // set up our FFT myFFT=new FFT(bufferSize*2); myFFT.equalizer(true); // set up our FFT normalization/dampening minLimit=.005; maxLimit=.05; myFFT.limits(minLimit,maxLimit); myFFT.damp(myDamp); myFFT.averages(numAverages); // get the number of bins per average steps=bufferSize/numAverages; // get the distance of travel between minimum and maximum limits limitDiff=maxLimit-minLimit; myInput.start(); oscP5 = new OscP5(this, OSC_port); // arguments: IP, portnum for(int i=0; i<metaCount; i++){ metaPosition [i] = new PVector(random(0,width),random(0,height)); metaVelocity [i] = new PVector(random(-1,1),random(-1,1)); metaRadius [i] = random(minRadius,maxRadius); } frameRate(2000); } void draw () { //println(pitchValue); float mapPitchHue = map(pitchValue,0,1000,350,500); float mapPitchSat = map(pitchValue,0,1000,85,125); float mapPitchBright = map(pitchValue,0,1900,400,500); float hue1= mapPitchHue; float sat1= mapPitchSat; float bright1= mapPitchBright; colorMode(HSB,hue1,sat1,bright1); // draw our averages for(int i=0; i<numAverages; i++) { fill(255); interpFFT = myFFT.maxAverages[i]; //println(interpFFT); float mapVelocity = map(interpFFT, 0, 1, 200, 1000); float metaRepel = mapVelocity; } centre.set(0,0,0); for(int i=0; i<metaCount; i++){ centre.add(metaPosition[i]); metaVelocity[i].mult(metaFriction); metaVelocity[i].limit(maxVelocity); } centre.div(metaCount); for( int i=0; i<metaCount; i++){ centreMove = PVector.sub(centre,metaPosition[i]); centreMove.normalize(); centreMove.mult(metaAttract); metaVelocity[i].add(centreMove); metaPosition[i].add(metaVelocity[i]); if(metaPosition[i].x > width) { metaPosition[i].x = width; metaVelocity[i].x *= -1.0; } if(metaPosition[i].x < 0) { metaPosition[i].x = 0; metaVelocity[i].x *= -1.0; } if(metaPosition[i].y > height) { metaPosition[i].y = height; metaVelocity[i].y *= -1.0; } if(metaPosition[i].y < 0) { metaPosition[i].y = 0; metaVelocity[i].y *= -1.0; } } background(0); for(int i=0; i<width; i++) { for(int j=0; j<height; j++) { constant = 0; for(int k=0; k<metaCount; k++) { constant += metaRadius[k] / sqrt(sq(i-metaPosition[k].x) + sq(j-metaPosition[k].y)); } set(i,j,color(360,100,(constant*constant*constant)/3)); } } public void audioInputData(AudioInput theInput) { myFFT.getSpectrum(myInput); } // incoming osc message are forwarded to the oscEvent method void oscEvent(OscMessage theOscMessage) { // do something when I recieve a message to "/processing/sigmund1" if(theOscMessage.checkAddrPattern("/processing/sigmund1") == true) { if(theOscMessage.checkTypetag("i")) { // set the x and y position pitchValue = theOscMessage.get(0).intValue(); } } } |

Comments from PiratePad A:

soundflower for internal audio routing, so you dont need a microphone

averaging for values

more interesting with you playing with it with your voice instead of music

Definitely interesting. Very cool. I think it would be better if you can plot something over time for example, at least when a few plots of music are made, it’ll be interesting to see how it differs over genres and so on.

What I’ve learned about music visualizers—people will think anything is visualizing sound as long as it’s moving fast enough. You could make the real-time aspect more convincing by making it move faster. Cheap, but effective.

The music is very fast but that is not visible in the lava lamp.

What does the color mean?

How is the movement affected by the music?

The “blur” provides an Interesting visual result

Lava lamp! (I agree) Mee too!

SUPER COOL. Nice whistling. Maybe if you were to display the pitch on the screen so that we could see how it impacts the metaball… it would have been easier to understand the relation between the metaball size and the pitch quality.

I immediately “get” the visualization, even though I don’t actually understand what’s mapping to what. The color I notice immediately, and because I see that as working well, I search for other parts of the visualization to match up a little bit more explicitly. Perhaps representing beat, volume, etc. Also, would creating a visualization of the song overtime be interesting? I don’t know.

I like the idea, and the metaballs look good. I wish they moved faster. The Kanye track visualized better. Runaway from his new album would have been good for that too, becuase it has these discrete monotone beeps for a long time in the intro.

Pretty looking, which is always nice. I would love to see a more direct link to the physics as other people have said, but its somewhat detatched which also gives it an interesting feel. Internal audio would be nice, and probably more efficient than over the mic.

Interesting use of physics. It’s your own winamp-style visualizer.

Originally I thought the color change looked like it followed the tempo, so that’s an interesting side effect of what you did.

The movement and color are nice, but I had a hard time seeing how it related back to the music, or was affected by the sound input. Is there a way to make the response more drastic? Overall a nice exploration.

Nice looking, but it’s hard to see at times how the audio affects the visualization.

Works well as an ambient visualization.

Comments from PiratePad B:

The music sounds really cool, but a simpler track might have more

clearly illustrated the physics. Especially one with more variation in pitch.

metaball visualization is nice with the glowing edge. What parameters are matching with each ‘physical’ phenomena?

The Kanye west track is showing your demo off a little better than the daft punk… Agreed. Agreed

Looks great. Could possibly benefit from a stronger correlation between the audio properties and the metaball behavior Agreed

I would have liked to have been able to compare the different instraments used, but pretty cool none the less. You set yourself I high bar I think, as music viz is something we have so much experience with.

This project is very successful ‘technically’ — in terms of your success in connecting data from PD over OSC to P5 in order to control metaballs. But aesthetically, or design-wise, I think this lava lamp is not succeeding. The application of a pitch detector to techno-music is a poor choice that reveals either a poor understanding of the nature of musical sound (i.e. there is no one isolatable pitch) or a poor selection of inputs for your demo.

Check out http://roberthodgin.com/solar/ . He does a pretty good job of picking out each layer of music and representing it visually. Also the seperation/attraction is seeming pseudo random, its hard to tell what its reacting to.

Shawn,

Please use the instructions here to format your code better:

http://golancourses.net/2011spring/instructions/code-embedding/

Thanks,

Golan