P3 final blog post

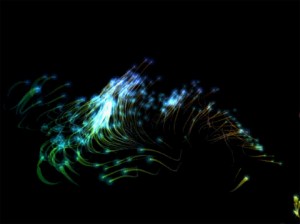

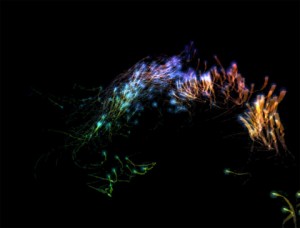

So, Alex & I decided to create a flocking effect with the Kinect, inspired by Robert Hodgin’s previous work in this area.

For this project, I was involved in the more low-level technical aspects, such as getting the Kinect up and running and finding out how to get the user data from the api. We spent a fair amount of time figuring out how to get the Kinect to work on the PC with a library that would give us both depth and rgb data on the detected user. After trying to use a processing library which eventually did not work, we opted for using OpenNI & nite. Since we both had PC laptops, this was arguably our best bet. Getting the project to work under Visual Basic was a hurdle, since there was poor documentation for the OpenNI library and how to get it up and running on VB.

Once we did get it running, I wrote a wrapper class to detect users, calibrate, and then parse the user and depth pixels so that Alex could easily access them using her particle simulator. We iterated on the code, and looked for ways to improve the flocking algorithm so that it would look more realistic and work better. We also spent a lot of time tweaking parameters and finding out how to represent the depth of the user as seen from the camera. The flocking behavior didn’t look quite as nice when we first implemented it in 3D. As such, we decided to use color to help represent the depth data.

And this is what we ended up with: