| build houses |

| go on space missions |

| do housework |

| make dinner |

| fly planes |

| act as a personal assistant |

| be independent beings who can make decisions and act as citizens of the world |

| be able to express some sort of feeling |

| be free-thinking and helpful beings |

| be able to take on any physical job that is needed by the workforce |

| have feelings |

| take over jobs that are usually about manual labor |

| ruin a country |

| do the biological things |

| be appearing in all stages of life |

| control all man made activity |

| enter politics |

| do all things that a computer programmer can do |

| help rule the world |

| mingle in our life |

| conquer the world |

| work in most of the industries |

| start to work in governmental organizations |

| start to think like humans |

| have households of their own |

| be involved in the government |

| be driving |

| be able to fly people around |

| cook for families |

| be in all households |

| be competent enough to challenge humans |

| be independent thinkers faciliitating all aspects of humans life |

| try to overcome humans |

| bring complete change in travel and tourism |

| be at par with humans |

| be a part of our daily life |

| be able to communicate without humans knowing they are robots |

| be everywhere |

| be able to bake |

| do all the housework |

| complete complex original tasks |

| be able to navigate across a room without stepping on pets |

| be more advance than we could ever imagine |

| be destroyed |

| begin to have artificial intelligence |

| be slightly feared |

| take over the world |

| be a part of every day life |

| be so commonplace to humans that they do not give them a second thought, such as the way we look at automobiles today |

| become commonplace in medical treatment |

| serve as police and soldiers to perform dangerous tasks |

| be able to help human beings in daily life activities |

| perform surgeries without the aid of human control |

| drive our cars without need for human control |

| be roaming this earth |

| be able to extraordinary things to protect humans from danger |

| not exist because people won’t trust them |

| be able to be of good service for the world (should only be used for wars or other necessary actions of protection) |

| more advanced |

| only be invented if they are strictly used to fight in wars (this is the only use for something that can potentially be harmful) |

| be serving many of our needs |

| provide both services and companionship, and health and medical needs |

| become more integrated into our daily lives |

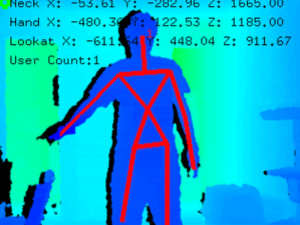

| be able to read our gestures and anticipate what we want |

| be fully integrated into our lives |

| be nearly invisible, part of the background, like a microwave or a couch |

| be designed to make sure they are not harmful to humans |

| be a common sight in everyone’s home |

| have taken over human kind |

| have advanced dramatically in capabilities from today |

| be used for hard labor jobs and tasks |

| be able to adequately serve human beings |

| be used for doing certain works for which humans take a lot of time |

| be used to earn money |

| be used for major operations |

| be used as fighting machines |

| have emotions in them |

| be used for jobs like cooking, driving, gardening, etc. |

| be sent for space expeditions and they will actually be able to think like we, humans |

| be used as a means of social entertainment |

| overcome the huge problems |

| take most of the human brain |

| predict the needs of humans |

| believe in life |

| carry humans |

| follow the crowd |

| be in most households in some form or another (ex: smart vacuum cleaner) |

| be commonplace |

| be able to self improve/diagnose |

| be able to accomplish a lot more than we can imagine right now (technology is growing exponentially) |

| be rapidly taking jobs previously done by people, like self serve at the grocery store but large scale |

| know what we need before we even tell them. They will analyze, remember, and predict behavior |

| be equals to humans for the law |

| help humans with complex tasks |

| want to have rights |

| serve the humans for domestic tasks |

| be a common property |

| improve its human aspect |

| be professors |

| be watchman at night and also local police |

| be a doctor |

| be in anti terrorist forces |

| be friends with people |

| be in the military |

| take rest in their depressed mode |

| be thoughtful like wise people |

| become famous for their outstanding contribution |

| think as human beings can |

| take part in scientific researches |

| be able to do our household activities |

| help human beings |

| be equal to humans |

| dominate human beings |

| rock |

| occupy the whole world |

| do all work without following human instructions |

| have baby robots |

| be assistants |

| work like most managers |

| work in companies |

| replace some humans |

| be more like humans |

| be already spread as commercial goods |

| be helpful to men |

| rule the world |

| think and analyze |

| talk |

| be able to do things independently |

| be able to reason out good and bad commands |

| be able to talk |

| be everywhere |

| be cheap enough for humans to purchase and use in their houses |

| be doing all jobs that human can |

| be cheaper and more available |

| be doing house work in homes |

| be able to serve people at restaurants |

| be able to drive cars |

| be able to have conversations with people |

| replace 90% of the human workforce |

| no longer be considered our friends |

| attain consciousness |

| be more widely used than they are today |

| be used for running elevators in shopping malls |

| be free |

| be self aware |

| be able to think freely an have basic emotions |

| be nearly indistinguishable from humans |

| be able to understand many languages |

| be able to express emotion and feelings |

| be able to communicate with us |

| not do anything more advanced |

| do the dishes and put them away |

| help protect rich people from poor people |

| do the laundry and put it away |

| replace some doctors for poor people |

| replace some caregivers for disabled people |

| bring back the greenary |

| replace all fuel |

| be there to help humans from all natural calamity |

| learn how to grow trees |

| be functioning only on solar energy |

| be using sun screen lotions due to UV rays |

| be more or less human in many aspects, able to have real conversations and interactions and act very human |

| dress and mimic humans in many ways |

| be visible all over the country to the point of being normal and accepted as something to interact with |

| be babysitting our children and working as communicative maids and domestic help |

| be working in offices, doing clerical and customer service type of positions, thereby lessening the number of humans performing these functions |

| be able to do household chores for those who can afford one |

| take care of basic house work |

| be smaller than the eye can see |

| be able to think for themselves |

| be self aware |

| be able to anticipate the needs of their owners |

| be doing jobs that require learning and quick thinking |

| as a human.. |

| do autonomous work but it should not control the human |

| be in all hazardous applications |

| be like humans (we can’t identify humans from robots) |

| think as like a man automatically |

| do automatic work |

| destroy the entire world |

| be capable of going to space and work there by themselves |

| rule human |

| be capable of doing risky works in battle fields, atomic plants, etc. |

| overcome human beings in some field |

| work with man in every fields |

| still be our slaves |

| clean the environment |

| clean your house |

| fight wars |

| be able to act, think and learn on their own |

| be able to sort and fold your laundry |

| conduct a polling collections |

| destroy everything |

| increase workplace safety (workers are moved to supervisory roles, so they no longer have to perform dangerous applications in hazardous settings) |

| be God |

| rule the World |

| be masters and peoples should be slaves |

| rule the world |

| drive the cars |

| replace all the workers |

| engage in wars |

| drive the cars |

| start doing some menial office jobs |

| be personal tutors |

| have valuable places in industry and the military |

| wash dishes |

| have the ability to perform household tasks |

| take the place of infantry |

| have more tasks to perform in industry |

| behave like man |

| drive cars |

| be our parents |

| have their own brains |

| be our partners |

| be our friends |

| be able to speak exactly like humans |

| take over the planet so humans can watch TV all day |

| be able to speak with human voices |

| go to work instead of humans |

| be replacing humans in the work force |

| start driving cars |

| improve quality of life for humans |

| be able to take over the world |

| be able replace soldiers |

| be able to talk like a human |

| become a part of human life |

| do all the work of human beings |

| become more helpful to human beings |

| be the new generation or kingdom of human life |

| become the leaders of human beings |

| progress their growth |

| be ruling the humans |

| realize earth is going to be destroyed and go to the moon |

| be as intelligent as humans |

| be able to form a government |

| land on the moon |

| be able to do all mechanical tasks |

| save the human race |

| be more energy efficient |

| evolve into life and reproduce |

| become cheaper |

| do jobs a human will do, like be a receptionist |

| perform surgery |

| do some work in a factory |

| be equal to human in doing anything |

| assist us and help us in house keeping |

| share the work burden of humans |

| do a lot of work and drive a car or even a flight |

| be very much helpful to human |

| be on par with human intelligence |

| go thru gravity tests |

| learn how to fly airplanes |

| be sent to other planets for exploration |

| be on board in a space shuttle |

| fly an airplane |

| be considered very dangerous! |

| be able to be considered ‘companions’ |

| be virtually indistinguishable from humans |

| be able to provide emotional comfort |

| be able to adapt mentally and emotionally to a situation |

| feel human-like emotions |

| go to a different galaxy |

| go back to where they came from |

| be extremely successful on Earth |

| prepare to dominate the world |

| be renewed |

| die out |

| start doing cooking |

| dispense fuel at fuel stations |

| do the job of a chauffeur |

| be a car mechanic |

| be a gun man |

| start doing the job of a watch man |

| refill ATMs |

| wash cars |

| be a prestigious thing for humans like TVs in the olden days |

| start robots rights commission |

| start to blame human beings |

| start to create a robot language and speak accordingly |

| think like human beings and have the capacity to verify the thinks |

| be used for protection purposes |

| should be protected from their vigorous activities |

| should be reduced in number |

| start to control men |

| be controlled |

| kill other robots |

| create other robots for themselves |

| begin to do things like being your personal assistant |

| become more commonplace for routine chores |

| be available to people who are hugely wealthy and eccentric |

| be limited so they don’t infringe on our rights |

| be available to many different households |

| be making life somewhat easier but in limited ways |

| perform surgery |

| pick up garbage |

| drive cars |

| assist with medical procedures |

| clean house |

| do housework |

| reproduce without human interaction |

| take the place of stupid people |

| be smarter that Earthlings |

| have brains |

| rule the world |

| be readily available to do housework and chores |

| be able to do all kind of things |

| be strictly supervised |

| affect the society |

| work the same like us |

| be part of our life |

| be able to help the peoples |

| be more efficient than most people |

| be surpassing our expectations in most areas |

| be breaking new ground |

| be able to fly planes |

| be able to drive cars |

| be able to prepare meals |

| a common household item |

| able to be ordered off the internet |

| work better and cost less |

| be able to perform surgery in an operating room from a doctor’s office |

| be able to cut the grass |

| wake you up in the morning, make your coffee and make your bed |

| kill all humans |

| be able to *think* |

| be capable of performing various tasks reliably |

| program humans to work for them |

| be way more advanced than humans |

| work instead of humans |

| be doing more involved tasks such as driving our cars |

| look more human and blend into society |

| be able to assist with domestic chores |

| be more advanced and able to handle more tasks that minimum wage people would handle previously |

| take over simple tasks in society |

| be more evolved with better technology |

| be the leaders of the world |

| give advises to the rulers of the country |

| be the rulers of the country |

| acquire the ability to have emotional life |

| be the soldiers in the boarder areas of the country |

| be able to grasp human language |

| look like humans |

| perform like a person |

| takeover all forms of government and run them efficiently |

| become human companions |

| takeover humanity and create a peaceful world |

| take the place of humans in dangerous jobs |

| learn how to reproduce |

| be capable of performing surgery |

| be able to prepare food and drinks |

| move heavy items from different places |

| fix things that are broken |

| be able to clean a house without making a mess of their own |

| take the place of some actors in movies |

| be able to drive a car. |

| become more useful in our everyday lives |

| completely eliminate humans working as cashiers |

| be humanlike and in every home |

| make homeowner’s lives easier (they should be commonplace) |

| replace humans in many facets |

| be used within every home to complete various household tasks |

| start destroying nature |

| do social service for free |

| start killing |

| start planting trees |

| rule the world |

| help people |

| be carefully monitored by actual humans |

| be useful to professionals in many fields |

| perform surgeries |

| be intuitive |

| recognize human speech |

| be available for average people to purchase |

| drive cars for us |

| serve as our military |

| go food shopping for us |

| be able to have emotions and converse with humans perfectly |

| become common household items |

| serve as janitors |

| guide the world |

| decrease the amount of green house effect |

| participate in social affairs |

| heal the world |

| take part in several invention widely |

| be able to communicate |

| fully run our home automatically |

| be considered a higher life form |

| take over our working factories |

| be more highly advance an more intelligent |

| run the world more or less |

| be advanced for assistance in the medical field |

| be readily available to carry out care taking work in day care, elder care and other types of facilities |

| be widely used in businesses for routine tasks |

| be companions to handicapped and elderly people |

| be readily available at reasonable prices for individuals and families |

| be able to do routine mechanics, such as repairing automobiles and completing some home construction tasks |

| be able to complete simple household chores |

| overtake human beings |

| not allowed to be so |

| start thinking like human beings |

| be the competitive to the human beings |

| not be allowed to think, they should always obey human orders |

| replace the jobs of many construction workers |

| exist in the classrooms as assistant teachers |

| assist in more homes as housekeepers |

| environmentally friendly |

| exist in more homes |

| be voice activated |

| be building buildings and developing land |

| be working in airports as baggage handlers |

| be more innovative in completing aesthetic tasks, like cut the grass of homes and companies |

| be able analyze personalities |

| be commonplace in our lives |

| guide our interactions with others |

| be familiar to many people |

| be used to further medical research |

| be able to do things we have never imagined |

| damage someone |

| stop |

| entertain the world |

| create some problem |

| be the nuisance of the world |

| rule the world |

| be considered very dangerous! |

| be able to adapt physically and emotionally, just as humans do |

| be virtually indistinguishable from humans |

| be able to feel emotions and react accordingly |

| mimic human beings and possibly substitute as ‘companions’ |

| be kept as pets |

| perform chores |

| take on more complex tasks |

| smile |

| be dependable |

| be stewardesses/stewards |

| be friendly |

| have a place in society |

| be smaller in size, but bigger in the amount of abilities they have |

| be driving cars and flying airplanes |

| be more advanced |

| be interacting with you in public |

| be doing the jobs that others do today |

| build the perfect human |

| take over the world |

| rule the Earth |

| have a reset button |

| be human |

| take over human jobs |

| be used wisely |

| have to be controlled |

| demolish the human life |

| be a part of human life |

| take the place of humans in the defense forces |

| take up the place of human in factories |

| resemble the human |

| replace humans in some work fields |

| undergo slight changes |

| be more in activities |

| talk like human |

| make a statement |

| be one of the working members in most of families |

| be the General Manager of multinational companies |

| become the familiar faces in industry |

| become an employee in all departments and industries |

| become just like a man |

| be able to manage some of the work done by human beings |

| be able to think |

| be in every household |

| be very life like |

| be available for industrial use |

| be performing everyday duties |

| be able to communicate |

| travel to mars |

| fly airplanes |

| fight in wars |

| help in home making |

| perform heart surgery |

| drive cars |

| be ubiquitous in the home, the office, and industry |

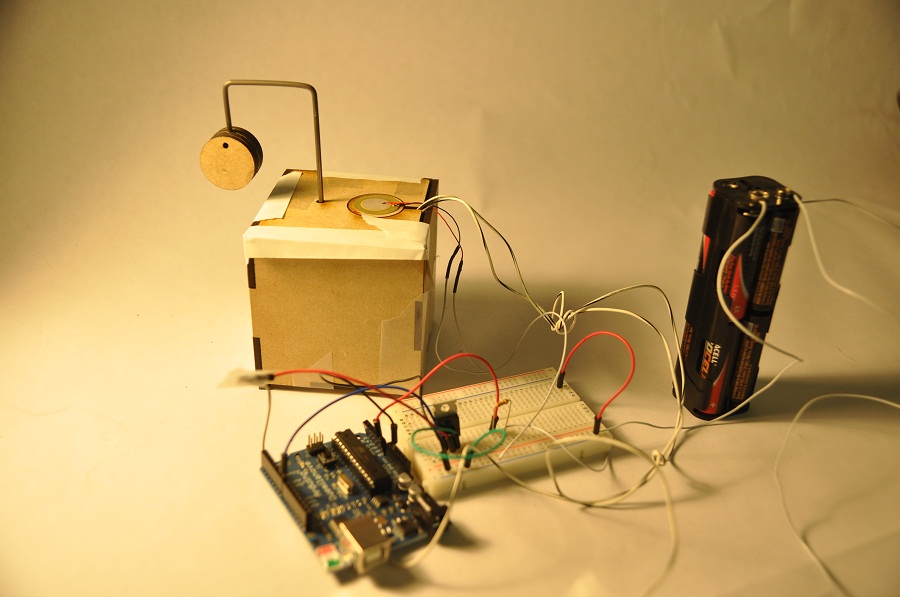

| perform many useful functions just existing as a box |

| kill |

| be capable of performing any human function |

| have undergone a major shift in the workforce as robots take over most jobs |

| assume most routine lab duties |

| ease out many jobs |

| do most of the work |

| be very very special |

| do most of the work that humans do in an organization |

| rule the world |

| replace managers and high post officers |

| make humans dependable |

| marry |

| do all mankind works |

| walk along the streets |

| reproduce |

| take over the world |

| cook food |

| be in all offices |

| be available in the market |

| conquer the world |

| be able to do our homework |

| be so advanced that they can do anything |

| be able to know what we are thinking |

| be really advanced |

| be able to drive |

| be able to do some of our daily duties |

| be able to translate from one language to another |

| remain subservient to their human owners |

| wear clothes |

| remain quiet while completing their tasks |

| try to write books |

| have specific warranties for their parts |

| be used in rescue situations |

| be used in place of humans to explore deep space |

| dominate assembly line factory work |

| be able to clean our ovens, just as the Roomba vacuums our floors now |

| be performing remote construction work |

| be used to assist physicians as microscopic robot-cameras in exploratory surgery |

| be defunct |

| should be as sophisticate and as close to human functioning as possible |

| be moving around the world |

| be able to help the elderly live a normal life as much as possible |

| be treated as obsolete |

| be useful medically |

| blend in with society |

| take away a lot of jobs |

| live in most homes |

| gain emotions |

| make it so humans have to do no work whatsoever |

| take anthropomorphic forms |

| drive cars |

| cry |

| replace children |

| be unplugged |

| be going to work in place of their human owners |

| be able to take over the world |

| be able to play the violin well |

| be indistinguishable from humans |

| be able to hold a conversation with a human |

| get older like humans |

| take decisions |

| look like human |

| understand nature |

| play guitar |

| achieve success |

| not talk to you but will get everything of you if you come in front |

| judge music and dance shows |

| check the intelligence of humans by their face, movement, etc. |

| anchor dance and music shows |

| be getting on the judge seat |

| be used in war |

| be more intelligent then humans |

| be functioning with humans |

| not be utilized for negative purposes |

| be obsolete |

| exist |

| be friend of the environment |

| marry people |

| improvise with dancers in popular concerts all around the world |

| prepare each and every thing in this world without the help of humans |

| drive the flight and do the unimaginable things itself |

| rule the World |

| influence humans and persuade them to think that they cannot live without robots |

| make another robots |

| become more powerful |

| become very smart, find us useless and take over the world |

| learn and think |

| walk and talk like real humans |

| be humanoid and perform actions like human beings |

| have emotions and feelings |

| be able to express |

| perform heart surgeries |

| land on Mars |

| be cab drivers |

| perform surgery |

| run the government |

| start a war |

| provide better personal assistance with greater mobility capabilities |

| have full artificial intelligence and unlimited battery usage |

| communicate better |

| process paper work |

| determine what’s wrong with someone at a hospital |

| drive cars |

| be brain controlled artificial limbs |

| be a high resolution artificial eye implant for humans |

| do voice recognition in houses and commonplaces |

| be flying cars |

| be taxis |

| clone |

| function as lost limbs in a fully functional manner with a person’s motor skills |

| replace humans that operate public transportation (busses, trains, and taxi cabs) |

| do basic surgeries revolutionizing the health care industry |

| be able to work |

| be able to think |

| react agains opposition |

| become merge with humans |

| help in homes |

| drive our vehicles |

| control all vehicles (no need for drivers) |

| turn agains the human race |

| surrogates representing humans |

| replace blood cells with thousands of times higher efficiency of work |

| be able to work as replacement for most of the vital organs of human beings |

| provide companionship to their owners |

| work in architecture-related tasks, doing the most repeating jobs like drilling or checking the stability of buildings |

| help doctors and surgeons |

| support everything from rescue teams to spaceships to hospitals |

| build a building |

| automate all easy tasks inside our homes |

| work as implants in human bodies |

| eliminate the need for human soldiers |

| do simple, non-invasive surgery |

| drive vehicles to their destination without human intervention |

| perform surgery on a human being with little assistance from humans |

| drive city buses down city streets shared by passenger vehicles, pick up passengers from bus stops and collect fare |

| be able to move cargo from docked ships to trucks without constant supervision from humans |

| be a fully functioning and true to life imitation of an actual human being, making it difficult to differentiate human and robot apart |

| be able to work as fully functional limbs, stronger than their organic counterparts |

| be capable of advanced thought processes, such as technical problem solving skills, on their own |

| be humanoid and suitable for companionship |

| be tiny and go in the bloodstream performing tasks like delivering medicine and attacking cancer cells |

| be a bionic arm that can be attached to nerves in the body, so that a person can control it and feel it like a normal hand |

| make the world dependable upon them |

| be like human beings |

| will rock |

| be very intelligent |

| help humans without any commands |