Blase’s Final Project- Gestures of In-Kindness

Title: Gestures of In-Kindness

Maker: Blase Ur (bur at cmu.edu)

Expanded Academic Paper: Available Here

Description: ‘Gestures of In-Kindness’ is a suite of household devices and appliances that respond in-kind to gestures the observer makes. For instance, the project includes a fan that can only be “blown” on; when the observer blows air at the fan, the fan responds by blowing air at the observer. Once the observer stops blowing his or her own air, the fan also stops. Similarly, there’s an oven that can only be operated by applying heat, such as the flame from a lighter. Only then will it apply heat to your food. A lamp that only turns on when light is applied and a blender that only spins its blades when the observer spins his or her body in circles round out the suite of devices. All devices operate over wi-fi, and all of the electronics, which I custom made for this project, are hidden inside the devices.

The interesting aspect of this project is that it leads observers to rethink their relationship with physical devices. In a sense, each device is a magnifying glass, amplifying the observer’s actions. This idea is highlighted by the devices’ quick response time; once the observer ceases to make the appropriate gesture, the device also turns off. Having this synchronicity, yet divergence in scale, draws the observer to the relationship between gesture and reaction. Oh, and the blender is really fun. Seriously, try the blender. You spin around and it blends. How cool is that?!

Process: On a conceptual level, my project draws inspiration from a number of other artists’ projects that have used household devices in an interactive way. One of my most direct influences is Scott Snibbe’s Blow Up, which is a wall of fans that is controlled by human breath. The idea of using a human characteristic, breath, to control devices that project the same behavior back at the observer on a larger scale was the starting point for my concept. While I really like Snibbe’s project, I preferred to have a one-to-one relationship between gesture (blowing into one anemometer) and response (one fan blowing back). I also preferred not to have an option to “play back” past actions since I wanted a real-time gesture-and-response behavior. However, I thought the idea of using breath to control a fan perfectly captured the relationship between observer and device, so I stole this idea to power my own fan and kickstart my own thought process. I then created a series of other devices with analogous relationships between gesture and device reaction.

James Chambers and Tom Judd’s The Attenborough Design Group, which is a series of devices that exhibit survival behavior, is an additional influence. For instance, a halogen lamp leans away from the observer so that it’s not touched; the oils from human hands shorten its life. Similarly, a radio blasts air (“sneezing”) to avoid the build-up of dust. In some sense, Chambers and Judd’s project explores the opposite end of the spectrum from mine. Whereas their devices avoid humans to humorous effect, my devices respond in-kind to humans in a more empathetic manner. I want the observer not to laugh, but to think about their relationship with these devices on a gestural, magnifying level.

Kelly Dobson’s Blendie is, of course, also an influence. Her project, in which a blender responds to a human yelling by turning on and trying to match the pitch of the yelling, captures an interesting dynamic between human and object. I really liked the noise and chaos a blender causes, which led me to include a blender in my own project. However, while her blender responded to a human’s gesture in a divergent, yet really interesting way, I wanted to have a tighter relationship between the observer’s action and device’s reaction. Therefore, with the conceptual help of my classmate Sarah, I decided to have the blender controlled by human spinning.

In class, I also found a few new influences. My classmate Deren created a strong project about devices that respond to actions, such as a cutting board that screams. For his final project, my classmate Kaushal made a fascinating camera that operates only through gesture; when the participant makes a motion that looks like a camera taking a photo, a photo is taken using computer vision techniques. Having these influences led me both towards the ideas of using household devices in art, as well as using appropriate gestures for control. Of course, Kaushal’s project is reminiscent of Alvaro Cassinelli’s project that lets an observer make the gesture of picking up a telephone using a banana instead of a telephone and similarly using gestures on a pizza box as if it were a laptop. This idea of an appropriate gesture being used for control is echoed in the projects that both Kaushal and I created.

Technical Process:

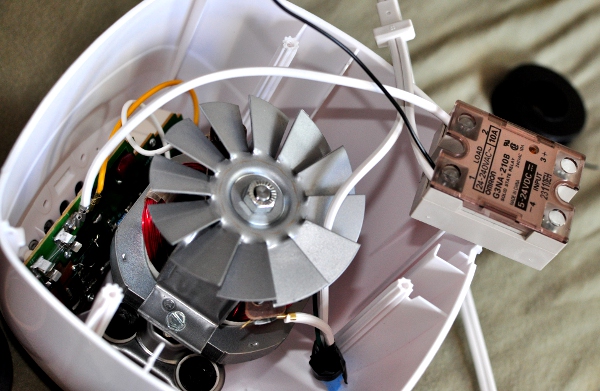

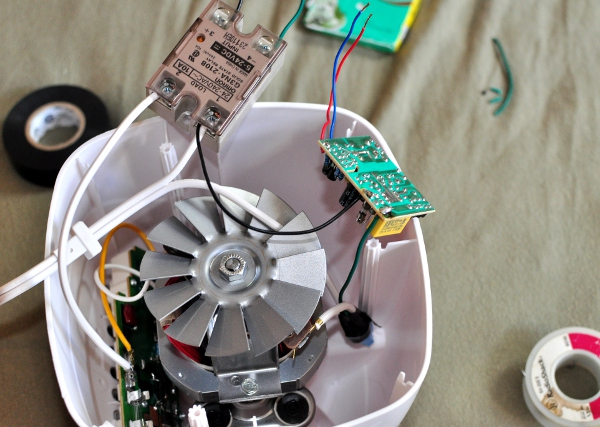

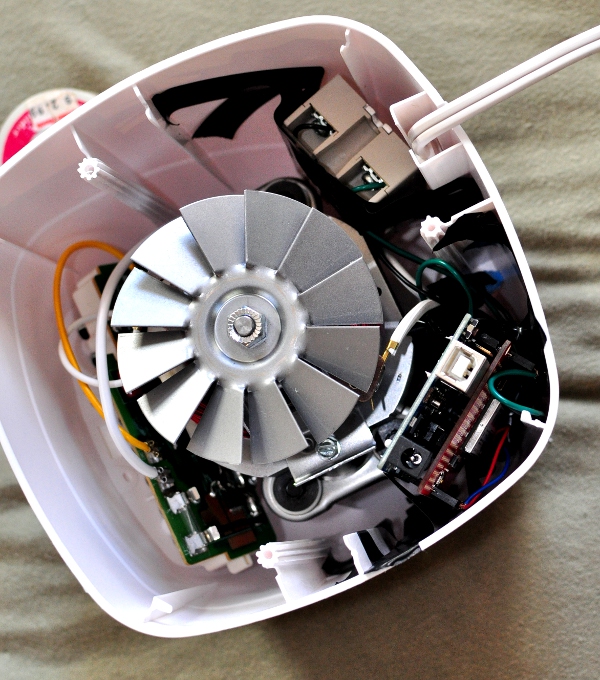

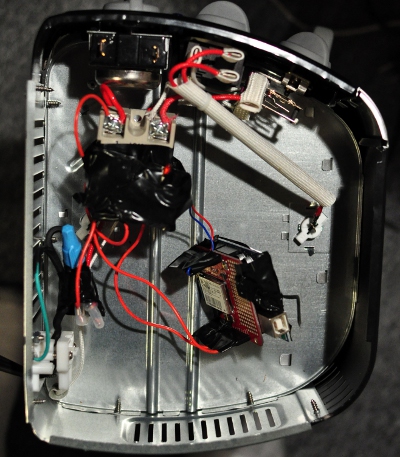

On a technical level, my project began with a project on end-user programming in smart homes that I’ve been a key member of for the last two years. As part of that research project, I taught myself to “hack” physical devices. However, I had never worked with ovens or blenders, two of the devices I hoped to use. In that project, I had also never worked with sensors, only with “outputs.” Therefore, a substantial amount of my time on this project was spent ripping apart devices and figuring out how they worked. Once I spent a few hours with a multimeter uncovering how the blender and oven functioned, as well as ripping apart a light and a fan, I had a good understanding of how I would control devices. This process of ripping apart the devices can be seen in the photographs below.

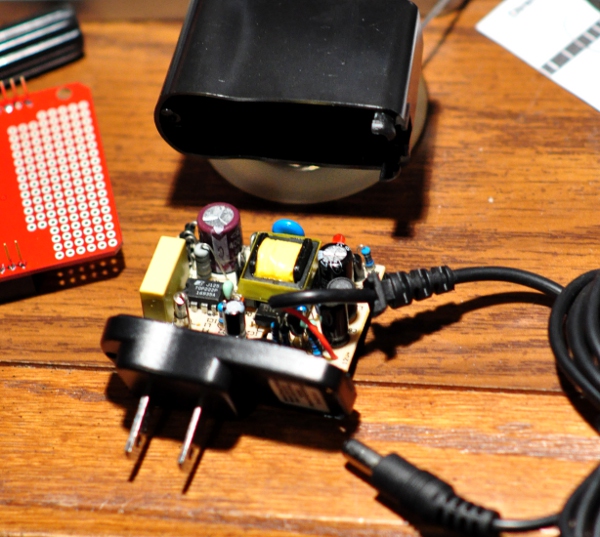

Inside each device, I’ve inserted quite a bit of electronics: power regulation, an Arduino microcontroller, a WiFly wi-fi adapter, and a solid-state relay. First, after opening up the devices, I isolated where power is sent to the device’s output (for instance, the oven’s heating elements or the blender’s motor) and cut the hot wire. Then, I started to insert electronics. As power came from the wall, I inserted a DC power regulation circuit that I ripped from a 9V DC Adapter that I purchased from Sparkfun; I could now power an Arduino microcontroller off of the electricity the device already had flowing into it. Then, I inserted a relay into the device (a 20A Omron solid-state relay for the oven and blender, and slightly more reasonable 2A solid-state relays for the fan and lamp). An Arduino Uno-R3 controls the relay, and a WiFly wi-fi adapter sits on the Arduino to provide wireless capability. I programmed these devices to connect to a wireless router, and all communication occurs over this wireless channel.

On the sensor side, I have a separate Arduino that reads the sensor inputs. For sensing breath, I used a surprisingly accurate (given its low cost) anemometer from Modern Devices. For sensing temperature, I used a digital temperature sensor; for sensing light, a photocell did the trick. Finally, to sense spinning to control the blender, I used a triple-axis accelerometer from Sparkfun. Since I wanted to avoid having a wire tangle with a spinning person, I connected this Accelerometer to an Arduino Fio, which has a built-in port for XBee (802.15.4) chips. This rig was powered by a small Lithium Ion battery. At my computer, I also had an XBee connected via USB listening for communication from the accelerometer.

I wrote the code for all Arduinos (those inside the devices, the Fio for the accelerometer, and the final Arduino that connects to all the sensors), as well as Processing code to parse the messages from the sensor Arduino and accelerometer. In this Processing code, I was able to adjust the thresholds for the sensors on the fly without reprogramming the Arduinos. Furthermore, in Processing, I opened sockets to all of the devices, enabling quick communication over the wi-fi network.

For additional information, please see my paper about this project.

Images: