Joint Operations from john gruen on Vimeo.

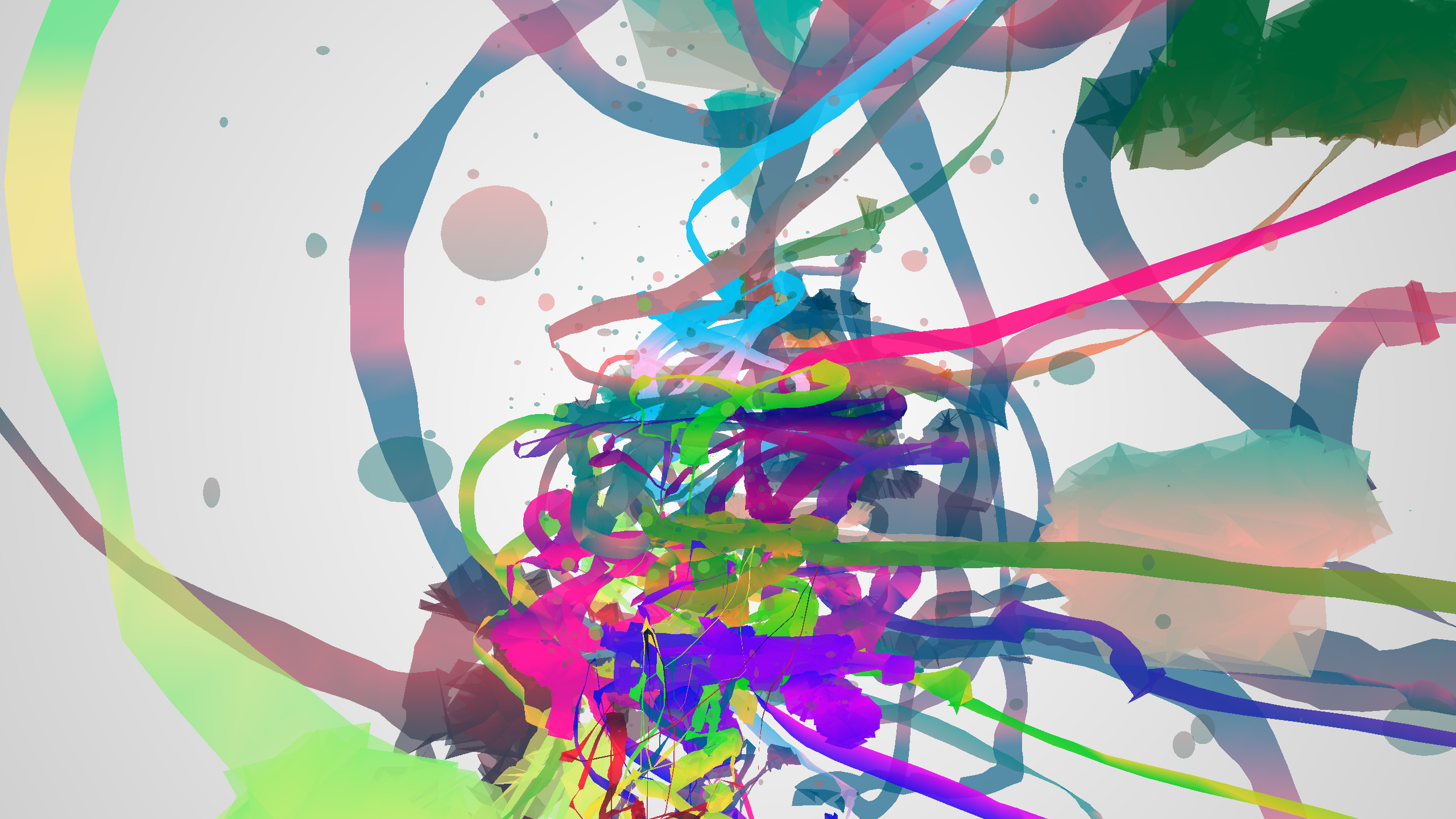

The Big Idea

Joint Operations grew out of my previous project in which I explored using a Microsoft Kinect to draw using gestures. This project extends the functionality of my past work in several key ways to make it more fun and more responsive.

- It’s truly 3 dimensional and makes use of the Kinect’s depth tracking capabilities.

- It makes use of the Kinect’s strengths and masks its weaknesses by affording free play and exploration over fine, detailed drawing.

- It allows up to 14 simultaneous meshes for drawing.

- It uses simple gestural controls not dependent on the user’s absolute position.

- It looks badass.

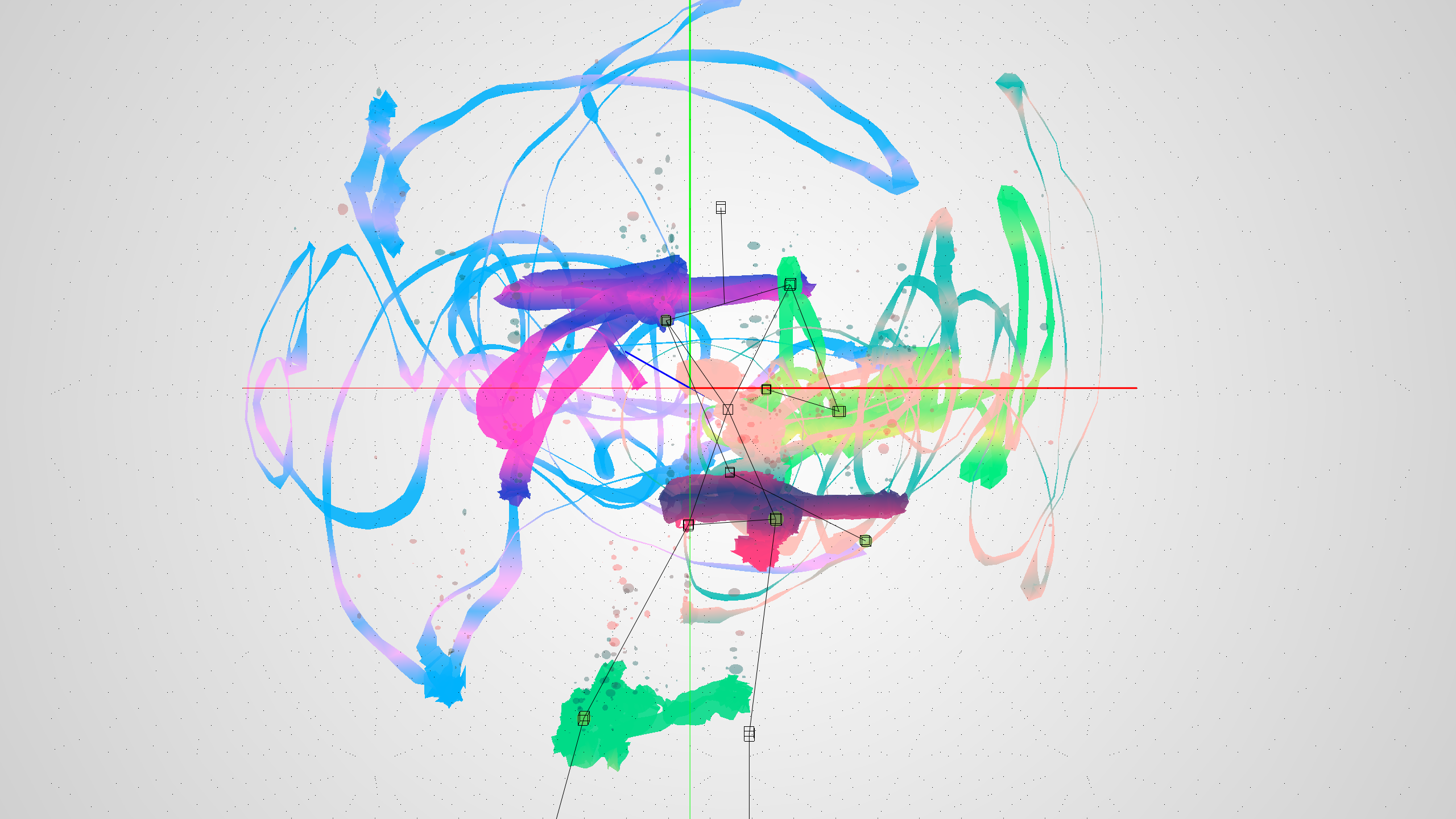

The system is built on OpenFrameworks, and works by reading skeleton data sent from a Kinect through Synapse. I’ve used the synapseStreamer class to make this a bit easier. Raw skeleton data is eased to stabilize motion, and then pushed into a a mesh. There are fourteen meshes in total, one for each joint tracked by the Kinect.

Users are given control over the the display of each mesh, and can set a virtual camera to any of the 14 eased joint positions. This affords two key features. Aesthetically, it allows a heightened sense of immersion as users can explore their drawings as sthey are created in a virtual 3D environment. In more piratical terms, it obviates any need to remap the raw Kinect joint data (a true pain in the ass) to account for the variable width of the sensor’s field of vision at different depths.

Users are able to change and modify their pen and camera selections at any time. The system stores up to 100 previous meshes for display.

Controls and Details

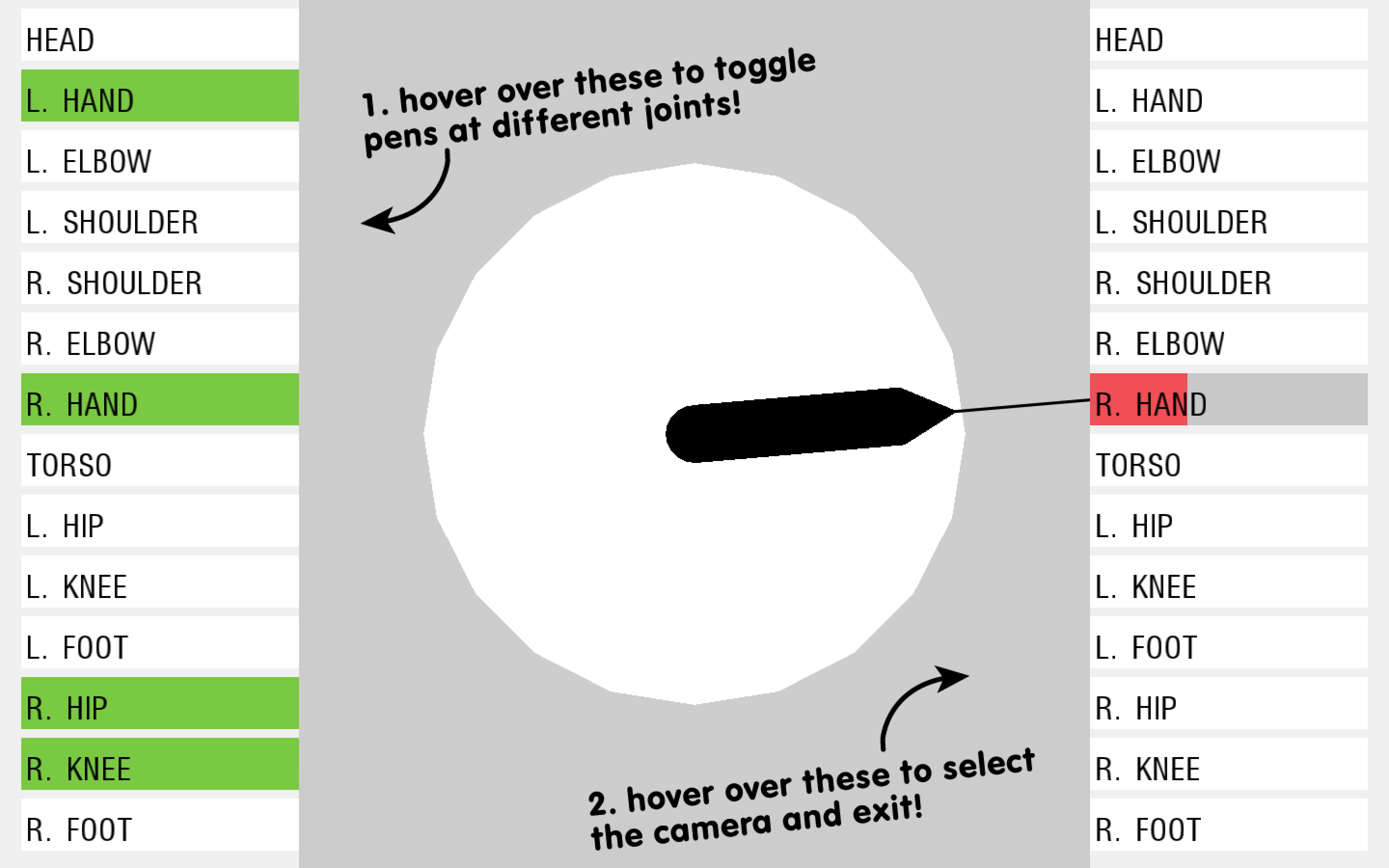

Joint Operations is made up of three primary screens:

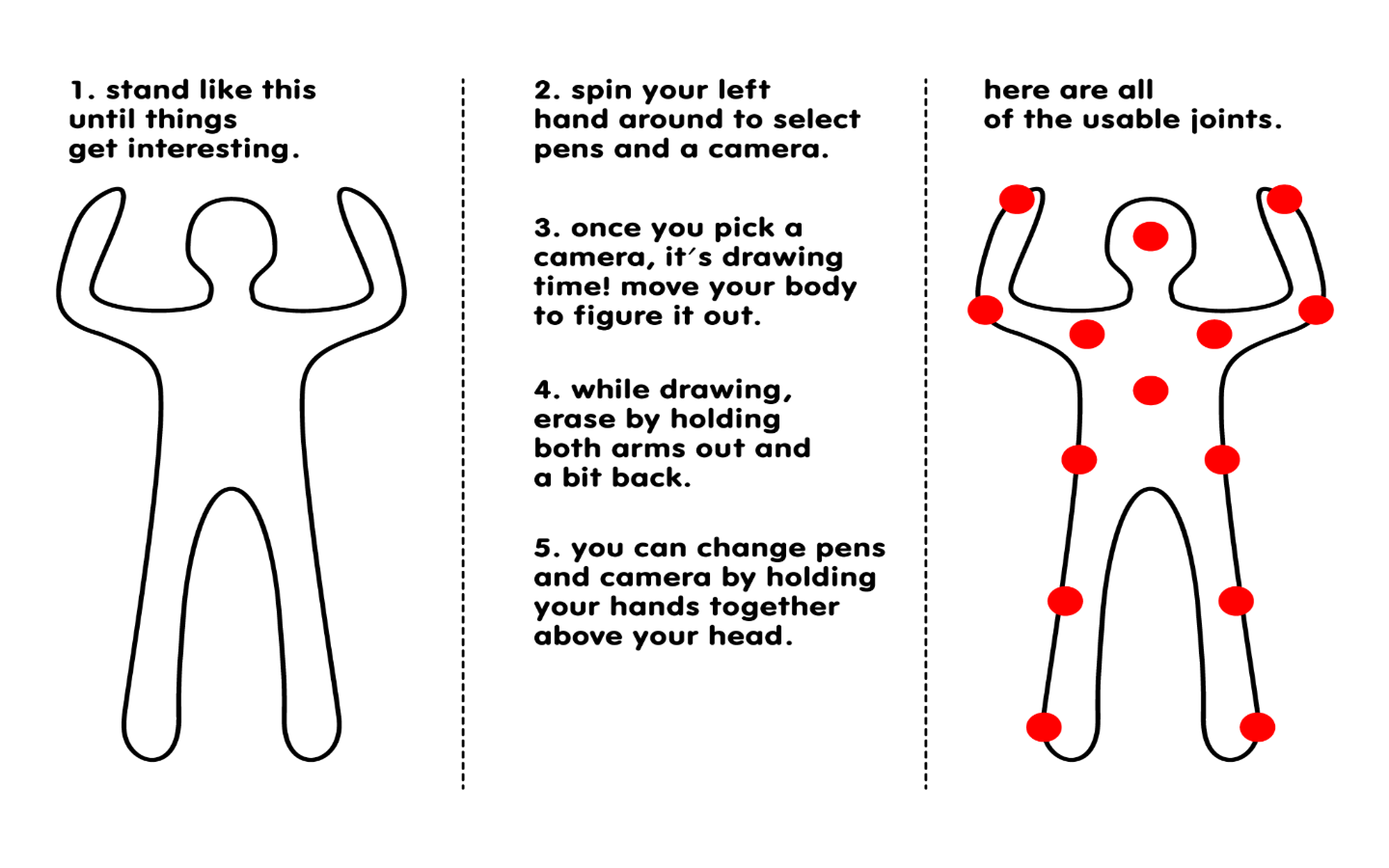

- Before Synapse recognizes the user, a the system displays an instruction page.

- Once Synapse detects a skeleton, a control page is displayed to allow users to toggle pens on and off and select a camera

- When a camera is selected the user begins to draw, and the joint selected as camera will remain at the center of the view window.

One of the primary challenges in this project was figuring out how to handle a gestural UI for pen and camera selection. The system I implemented affords a degree of forgiveness for the necessarily coarse movements of users bodies. I’ve used a clock metaphor within which users can point with their left arm (because I’m left handed and wanted to be slightly devious). Users point to the camera or pen they’d like to select and wait for the button to ‘fill up’ with color. Do deselect, they have to ‘drain’ the button. Adding a time-based component to selection greatly reduces the likelihood of accidental selection.

In addition to the clock control, there are a few other minor controls available.

- In draw, users can hold their hands together above their head to return to the selection menu.

- In draw, users can hold their arms out and back to clear their drawings.

- the B,S, and T keys toggle some debug settings such as making joints and a registration grid visible.

Conclusions and Considerations

All in all, this was a really fun project, and I got great feedback while showing it. I’m still really interested in trying to figure out novel UI structures for the Kinect. For example, how might I make the system capable of lifting the pen (ie drawing only part of the time instead of continuously), and how might I make a more intuitive UI for such a new type of interaction?