Accessibility

You can play around with this project here:

http://www.contrib.andrew.cmu.edu/~dgurjar/faceframe/

Note that this project has been tested with the most recent install of Chrome. It is likely that other browsers will not support some of the features that I am using.

Process

This idea was a stepchild of another which involved using video with a Chrome Extension. Unfortunately, at this time Chrome Extensions don’t support video input. I found this out a bit late, but decided to use what I learned with HTML5 video processing to make something else.

I was inspired by an N64 video game from my childhood, Banjo-Kazooie which had a video puzzle as a mini-game.

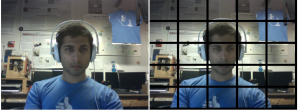

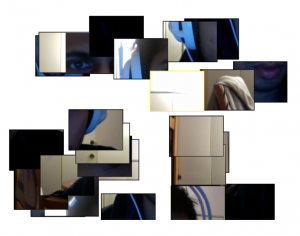

I started off by trying to split a video feed into a set of images.

I then controlled the placement of these pieces by setting their initial state randomly. And I also added mouse hover interactions.

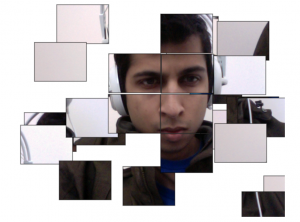

Hovering was followed by click and drag, which (then temporarily) would allow me to interact with my puzzle.

I then looked into hand gesturing in javascript, something I wish I had done earlier. I wanted the user to be able to interact with the puzzle by using their hands to grab tiles. I came across this library: https://github.com/mtschirs/js-objectdetect which seemed extremely promising. However, when I got around to using it, it simply didn’t work as advertised – the detection of my hand was too variable for my purposes.

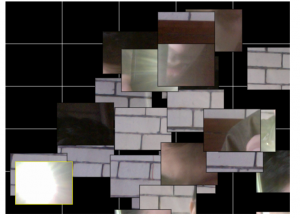

I decided to ditch the idea, and try going for brightness based motion. I wrote code for it that worked, but after testing it on some friends, found that it was somewhat unnatural.

The tests told me one thing – people playing with tiles would consistently move their head to refresh images on the tiles. Completing a face is easier than completing some random background image. I decided to hook this concept with the fact that the face tracking javascript libraries worked reasonably well. The one I had tried headtrackr.js was very well done.

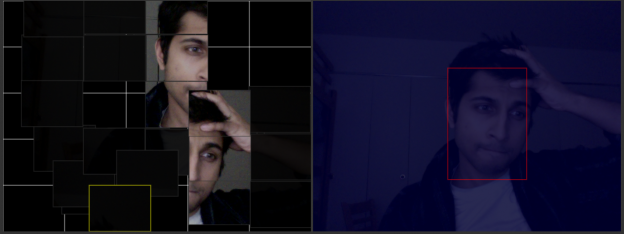

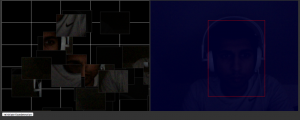

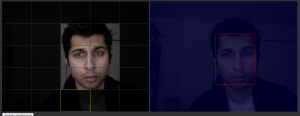

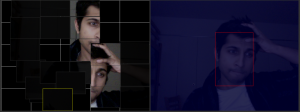

I started by using headtrackr to select pieces, and found this had an interesting effect. I evolved this into a puzzle which would darken tiles which didn’t have a face, and properly display those that did. I wanted to force users to really use their faces.

Playing with opacity:

Pairing opacity and face detection:

Current State and Conclusions

At the moment this project lets users interact rectangular portions of their webcam’s video feed. These tiles can be rearranged however the user chooses. Tiles that don’t contain the user’s face are purposely obscured to encourage users to use face location as an interaction.

I think there is some fun appeal in a project like this. And am happy that I have further exercised my web development skills in this course. I really regret two things about my implementation:

- Interaction technique was not thought out early enough, especially given that it was meant to be the crux of this project.

- Experimentation and reimplementation left less time for polish.

Hopefully I can learn from this in my future works.

Future Work / Expansion

- I really think video puzzles could be fun as a multiplayer game. Maybe with the task of completing someone else’s field of view would be interesting.

- I also looked into things like microphone input. Maybe the visible squares around the users face could increase based on how loud the user was?

- I can buffer the video images from the past and cause some tiles to display video slowly, or video from the past. Not entirely sure how to make this interaction sensible though.