Solar Stereo Interface Demonstration from Mike Taylor on Vimeo.

Introduction

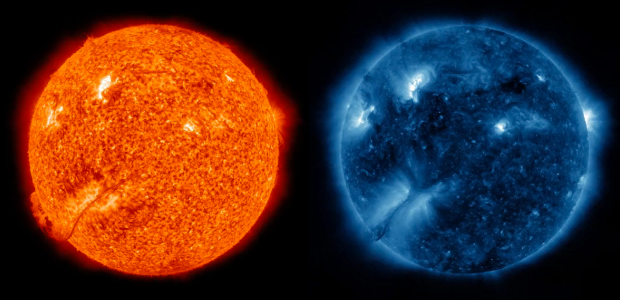

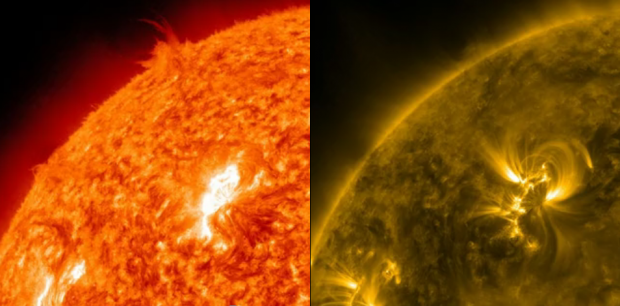

Through this project, I have attempted to create a new means of viewing high-resolution time-lapse imagery of the surface of the sun. This approach uses stereoscopic lenses to view two separate video streams from the Solar Dynamics Observatory. Typically, stereoscopic imagery is a means by which two-dimensional images can be made to appear in three dimensions. Here, I explore the ways in which two dissimilar images are combined in the mind to produce a single image when both eyes are open, and two separate images corresponding to two separate video channels when each eye is opened one after another. The behavior of the brain in situations similar to this is often referred to in literature as “binocular rivalry.” For more information on binocular rivalry, please see the related works section of this post.

Implementation

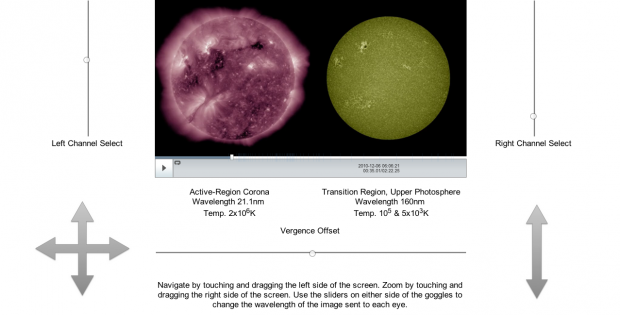

The dataset for this project consists of publicly-available imagery from the Solar Dynamics Observatory. Because we are interested in exploring the imagery in great detail, we need to be able to pan, zoom, and move forward and backward in time. To this end, I use the GigaPan Time Machine API to embed zoomable time-lapse videos inside an HTML5 document. One of the two viewers is designated as the master, and the second viewer’s motions (X, Y, Zoom, play/pause, and discrete time changes) mirror that of the first. Each viewer has its own control for changing the wavelength being viewed, as described in the interface section.

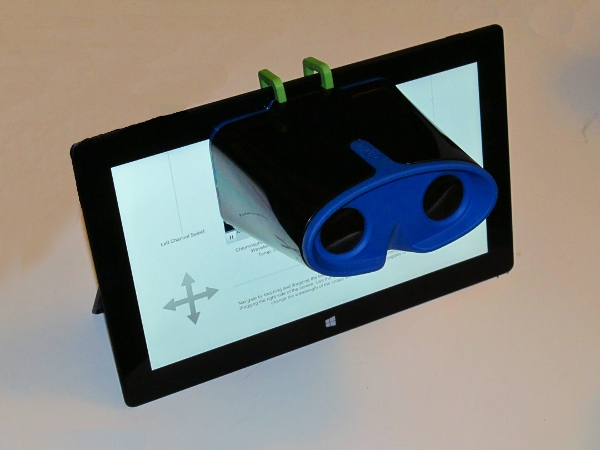

In order to demonstrate the proposed technique, I created a stereoscopic attachment and software interface for use with the Microsoft Surface Pro. The stereoscopic attachment is a modified Hasbro MY3D viewer, intended for use with special apps on the iPod and iPhone. By removing the back cover of the MY3D and clipping two of the hinge tabs, we may attach two custom rapid-prototyped brackets that allow the viewer to be attached to the top of the Surface Pro. The STL files for these clips are publicly available at https://github.com/SteelBonedMike/TMStereo.git.

Because of variations in eye spacing and the importance of having the images from each eye converge properly, we must have a variable control method for changing the vergence of the images. This amounts to simply changing the x offset in pixels between each eye.

While the Time Machine interface allows for panning and zooming using a cursor over the viewport, the MY3D unit prevents this from being accomplished easily. To overcome this, I remapped the controls to areas of the touch screen. When holding the device in landscape mode, the bottom left corner of the screen is mapped to pan controls, while the bottom right is mapped to zooming in and out. At the top, to the immediate left and right of the viewer, I placed sliders mapped to the available wavelengths of solar imagery. Below each viewer is a short text segment describing the visible layer in addition to its temperature and wavelength.

In the interest of cross-compatibility, this project is coded in HTML5 and JavaScript, so most platforms with a Chrome web browser should be able to access the interface. For non-touch devices, panning can be accomplished using the arrow keys on the keyboard, while the + and – keys zoom in and out respectively.

Observations

Solar Stereography Use from Mike Taylor on Vimeo.

Experiences and reactions among viewers were much more varied than initially expected. For some, image convergence comes easily, while others must adjust the vergence control significantly for the images to overlap properly. Independently, some viewers primarily see only one of the two images even when the two are lined up. Many of these viewers did not even realize they were being presented with two separate images until told to close one eye and then the other. This appears to be related to two factors: the brilliance of the wavelength being viewed, and whether the eye that is viewing it is strongly dominant. While not always effective, one technique for achieving the blending effect is for the viewer to switch which eye is viewing which wavelength so that the less dominant eye is viewing the more brilliant image. Certain wavelengths are also clearly more interesting than others, either by themselves or in combination. Wavelengths brightly showing solar flares, coronas, and easily-visible sunspots were frequently viewed longer than darker, more featureless wavelengths.

Many viewers were convinced that the image was three-dimensional, even though no depth-enhancing stereoscopy is in effect. There is certainly a slight distortion near the center of the viewer, which is likely simply due to the curvature of the lenses in the MY3D device. Also, there is a cultural expectation that stereoscopic attachments yield three-dimensional images, and because the sun is spherical and also rotating slowly, it is easy to reconstruct it mentally as a three-dimensional object.

Some users described phenomena similar to those described in previous research. Specifically, several mentioned that the images appeared to flicker, even in the absence of motion. Others described sensations similar to “hypercolor,” where seemingly impossible colors are perceived. Both of these can be the result of presenting the eye with similar images but with opposing colors. At times, these sensations helped the viewers to identify surface features present on one or both of the images. Viewers could then identify which of the wavelengths possessed the features by alternatively closing one eye and then the other.

Typical interaction time ranged from one to four minutes, with the longer interactions stemming from a better understanding of the on-screen controls. Many viewers did not seem to realize that the image could pan, zoom, and change wavelengths until these features were explicitly pointed out to them. These controls are mostly labeled on the interface, but most people do not seem to read these labels.

General response to the project was highly positive, with people describing the imagery as “amazing,” “beautiful,” and “surreal.” One user commented, “I never knew the sun moved like that.” Another said “I felt big when I was in the goggles, but now I feel very small again.” To some degree, these reactions are certainly a function of the SDO data itself, which is stunning in its own right. Another factor is likely the exploration in time and space made possible by the Gigapan Time Machine technology. Another aspect, however, likely stems from the stereoscopic aspect which A) isolates the user from distractions while looking through the goggles and B) reveals the interplay of various solar dynamics by presenting multiple views at once.

Conclusions

Throughout the initial exhibition, not a single user responded negatively to the project, though this cannot be used to conclude that this stereoscopic technique is universally effective. In those cases where users saw only one image, they were still likely awed by the ability to pan and zoom within the image that they could see. That being said, the number of people who perceived both images and continued to enjoy the experience suggests that stereoscopy can be effectively implemented in at least some instances.

There are several improvements that should be made for the interface, and these are generalizable to similar experiments. First, the sliders should be made thicker, and thus harder to miss. Second, the instructions should potentially be present on an introductory screen, as people tended to go straight for the goggles without reading any of the words on-screen. Even after looking at the controls, they still did not seem to read the labels for each, preferring instead to just start changing sliders experimentally. Perhaps a tutorial mode of some sort is in order, though this may turn people away and may be difficult to implement in a gallery setting.

The sliders for changing wavelengths were perhaps, in retrospect, the wrong input type for non-continuous data. The wavelengths are ordered, but the spacing between them is uneven, and given eight discrete wavelengths, there is no intuitive mapping to the slider. Since users tended to slide through each wavelength discretely until reaching the desired imagery, simple up and down buttons may be the correct input type for this interaction.

The informational text for each wavelength might also receive more attention if placed to the side of each viewport rather than underneath. Despite the fact that the viewer does not obstruct the text below, people tended to pay less attention to that portion of the screen. A bounding box to highlight this text might also be beneficial. Noticing and understanding this text is crucial to the goal of the project, which is to allow people to understand the interplay between solar dynamics on different wavelengths. Without the descriptions to provide context, much of this understanding can be lost.

The stereoscopic presentation described in this paper shows promise for future installations. With the suggested improvements and with more focus on interface design, dissimilar stereoscopy could result in a new, useful, and interesting way to explore solar data and other similar imagery.

Acknowledgements

This work was completed in fulfillment of the requirements for Interactive Art and Computational Design, Spring 2013, taught by Professor Golan Levin in the Frank Ratchye STUDIO for Creative Inquiry. I would also like to acknowledge Paul Dille, the CREATE Lab, and the rest of the GigaPan Time Machine project for their assistance in integrating Time Machine viewers into this project. Finally, this work is made possible by the publicly available data from the Solar Dynamics Observatory.

Related Works:

- Alper, Basak, Tobias Hoellerer, JoAnn Kuchera-Morin, and Angus Forbes. “Stereoscopic Highlighting: 2D Graph Visualization on Stereo Displays.” IEEE Transactions on Visualization and Computer Graphics 17.12 (2011): 2325-333. Print.

- Kovacs, Ilona, Thomas V. Papathomas, Ming Yang, and Akos Feher. “When the Brain Changes Its Mind: Interocular Grouping during Binocular Rivalry.” PNAS 93.26 (1996): 15508-5511. JSTOR. Web. 7 May 2013. <http://www.jstor.org/stable/40907>.

- Liu, Lei, Christopher W. Tyler, and Clifton M. Schor. “Failure of Rivalry at Low Contrast: Evidence of a Suprathreshold Binocular Summation Process.” Vision Research 32.8 (1992): 1471-479. Print.

- Roth, Heidi L., Andrea N. Lora, and Kenneth M. Heilman. “Effects of Monocular Viewing and Eye Dominance on Spatial Attention. Roth HL Lora AN, Heilman KM. Brain 2002;125:2023–2035.” Brain 125 (2002): 2023-035. Print.

- “SDO.” GigaPan Time Machine. CREATE Lab, n.d. Web. 08 May 2013. <http://timemachine.gigapan.org/wiki/SDO>.

- “Solar Dynamics Observatory.” SDO. NASA, Goddard Space Flight Center, n.d. Web. 08 May 2013. <http://sdo.gsfc.nasa.gov/>.

- Zhang, Haimo, Xiang Cao, and Shendong Zhao. “Beyond Stereo: An Exploration of Unconventional Binocular Presentation for Novel Visual Experience.” Proc. of CHI 2012, Austin, Texas. N.p.: n.p., n.d. 2523-526. Print.