Banner Design by Aderinsola Akintilo

Banner Design by Aderinsola Akintilo

Video:

Loop Findr from Collin Burger on Vimeo.

Tweet:

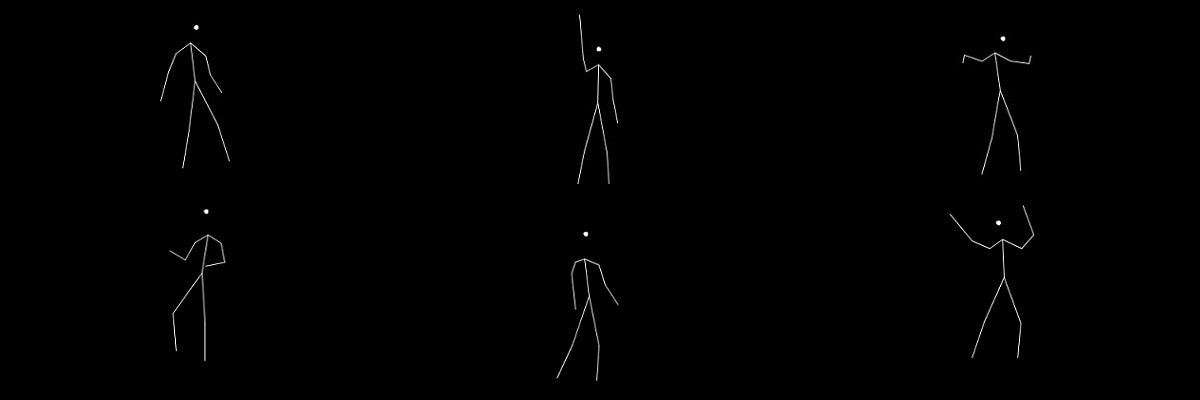

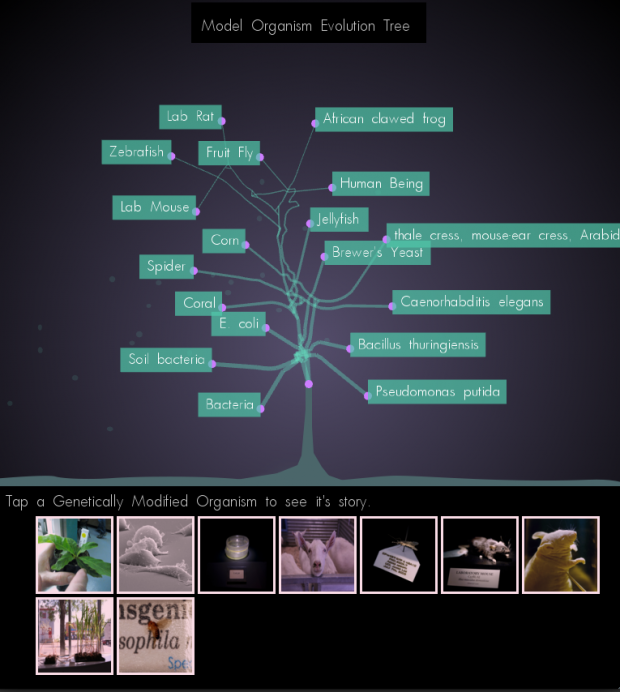

Loop Findr is a tool that automatically finds loops in videos so you can turn them into seamless gifs.

Blurb:

Since their creation in 1987, animated GIFs have become one of the most popular means of expression on the Internet. They have evolved into their own artistic medium due to their ability to capture a particular feeling and the format’s portable nature. Loop Findr seeks to usher in a new era of seamless GIFs created from loops found in the videos the populate the Internet. Loop Findr is a tool that automatically finds these loops so users can turn them into GIFs that can then be shared all over the Web.

Narrative:

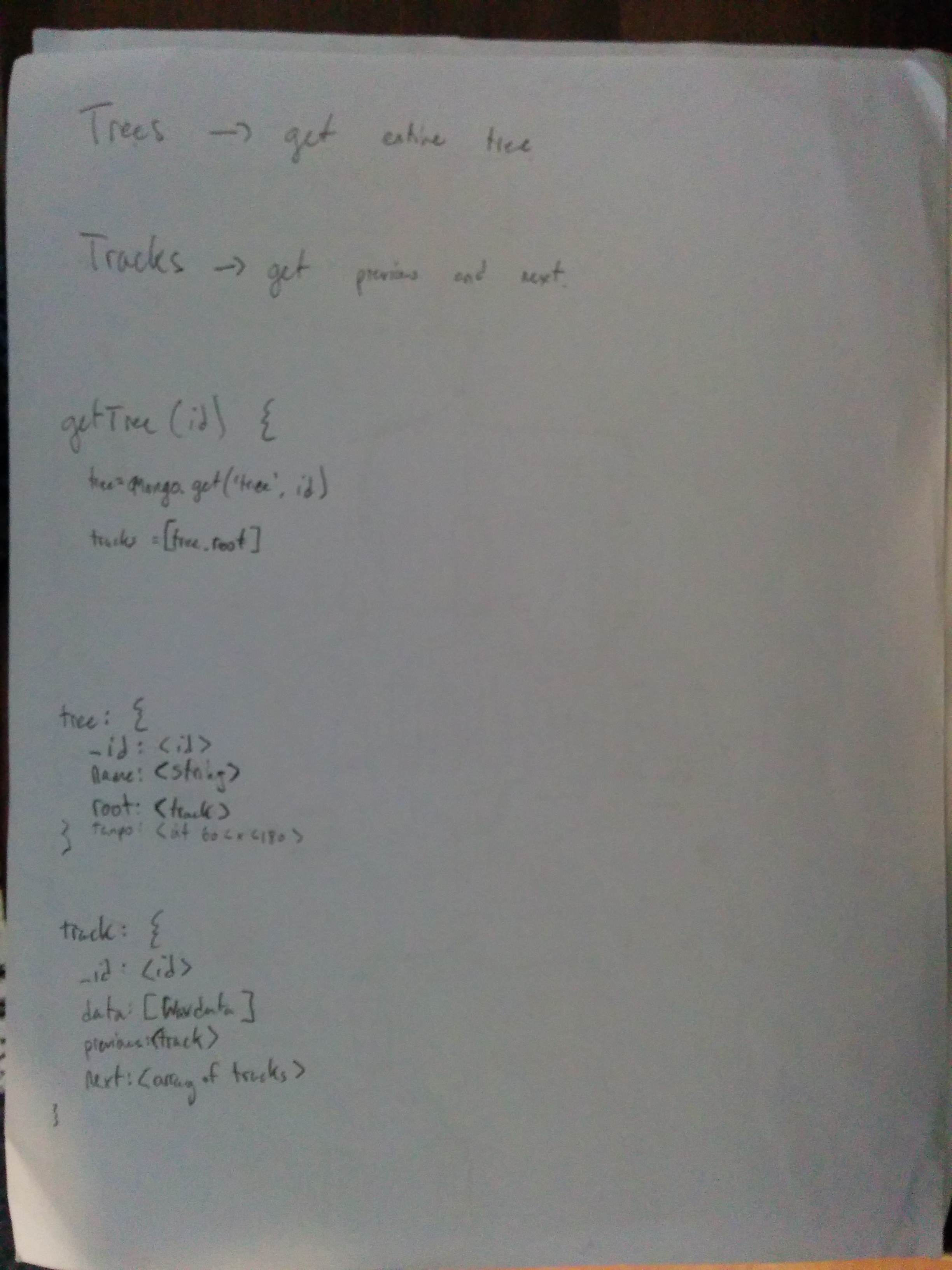

Inception:

The idea for Loop Findr came about during a conversation with Professor Golan Levin about research into pornographic video detection in which the researchers analyzed the optical flow of videos in order to detect repetitive reciprocal motion. During this conversation the idea of using optical flow to detect and extract repetitive motion in videos emerged, and its potential for automatically retrieving nicely-looped, seamless GIFs.

Research:

Professor Levin and I devised an algorithm for detecting loops based on finding periodicity in a sparse sampling of the optical flow of pixels in videos. After doing some research, I was inspired by the pixel difference compression method employed by the GIF file format specification. It became clear to me that for a GIF to appear to loop without any discontinuity, the pixel difference between the first and final frames must be relatively small.

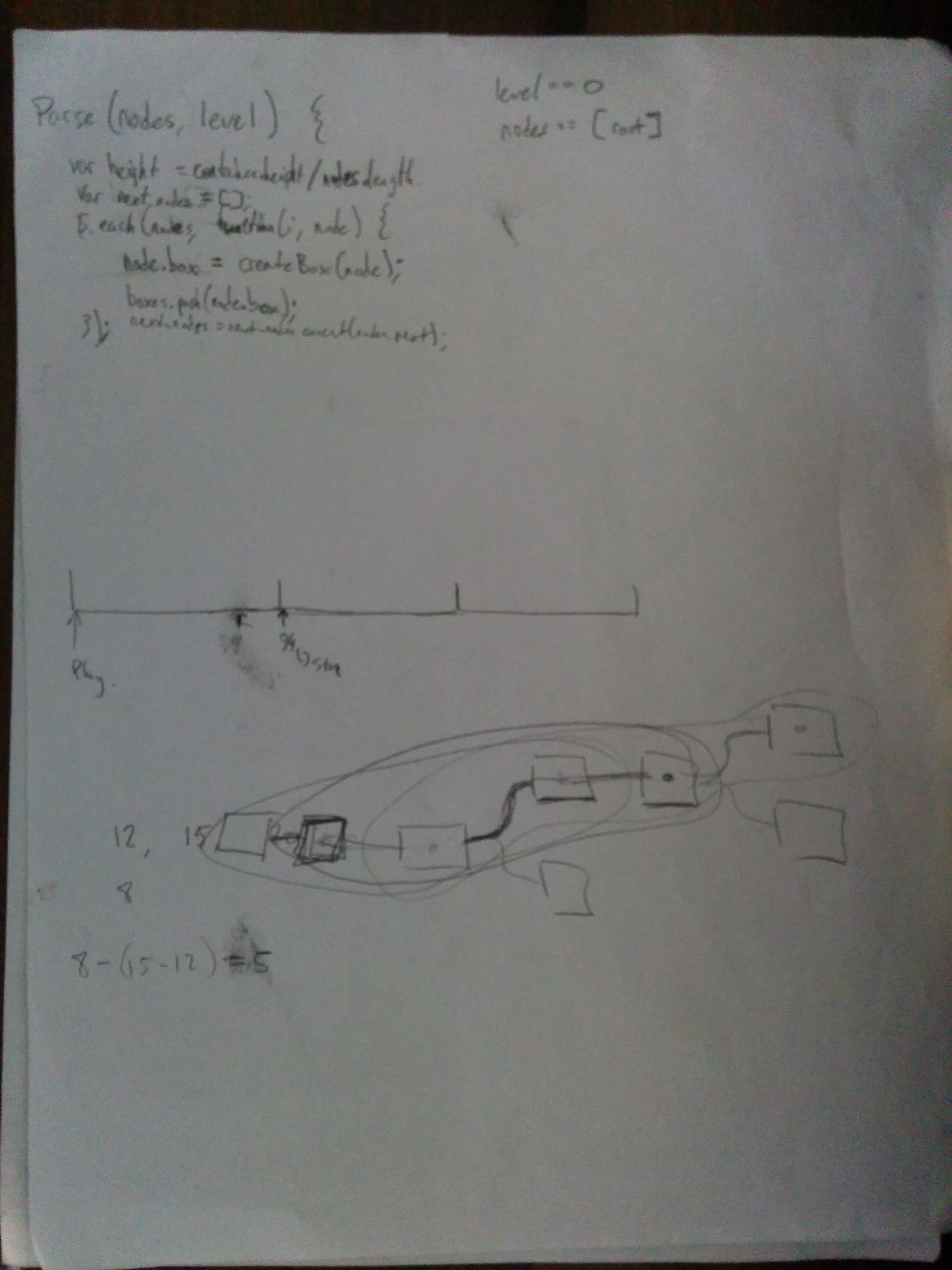

Algorithm:

After performing the research, I decided to implement the loop detection by analyzing the percent pixel difference between video frames. This is enacted by keeping a ring buffer that is filled with video frames that are resized and and converted to sixty-four by sixty-four, greyscale images. For each potential start of a loop, the percent pixel difference of all the frames within the acceptable loop length range is calculated. This metric is calculated with the mean intensity value of the starting frame subtracted from both the starting frame and each of the potential ending frames. If the percent pixel difference is below the accuracy threshold specified by the user, then those frames constitute the beginning and end of a loop. If the percent pixel difference between the first frame of a new loop and the first frame of the previously found loop is within the accuracy threshold, then the one with the greater percent pixel difference is discarded. Additionally, minimum and maximum movement thresholds can be activated and adjusted to disregard video sequences without movement, such as title screens, or parts of the video with discontinuities such as cuts or flashes, respectively. The metric used to estimate the amount of movement is similar to the one used to detect loops, but in the case of calculating movement, the cumulative percent pixel difference is added for all frames in the potential loop.

Development:

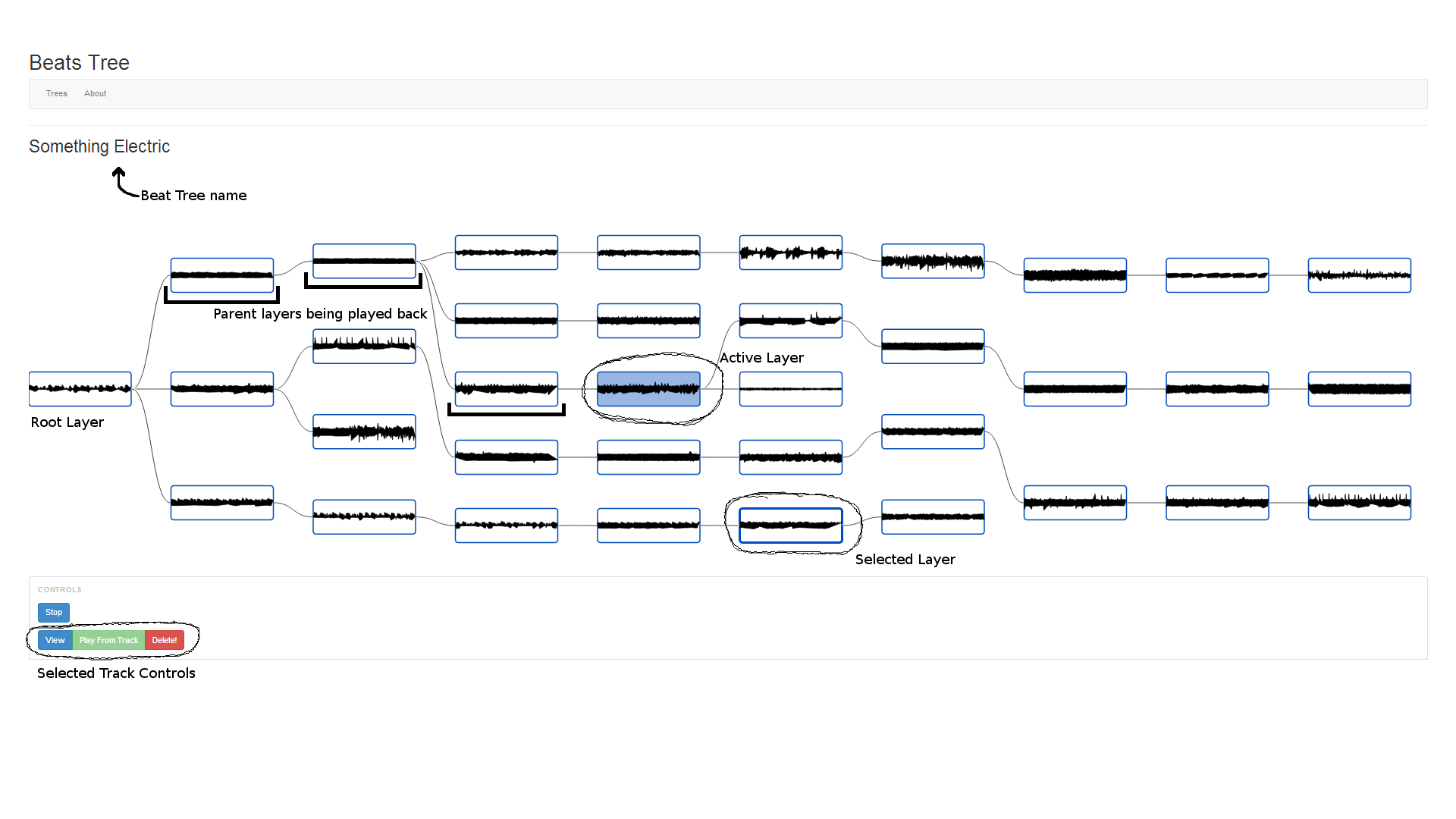

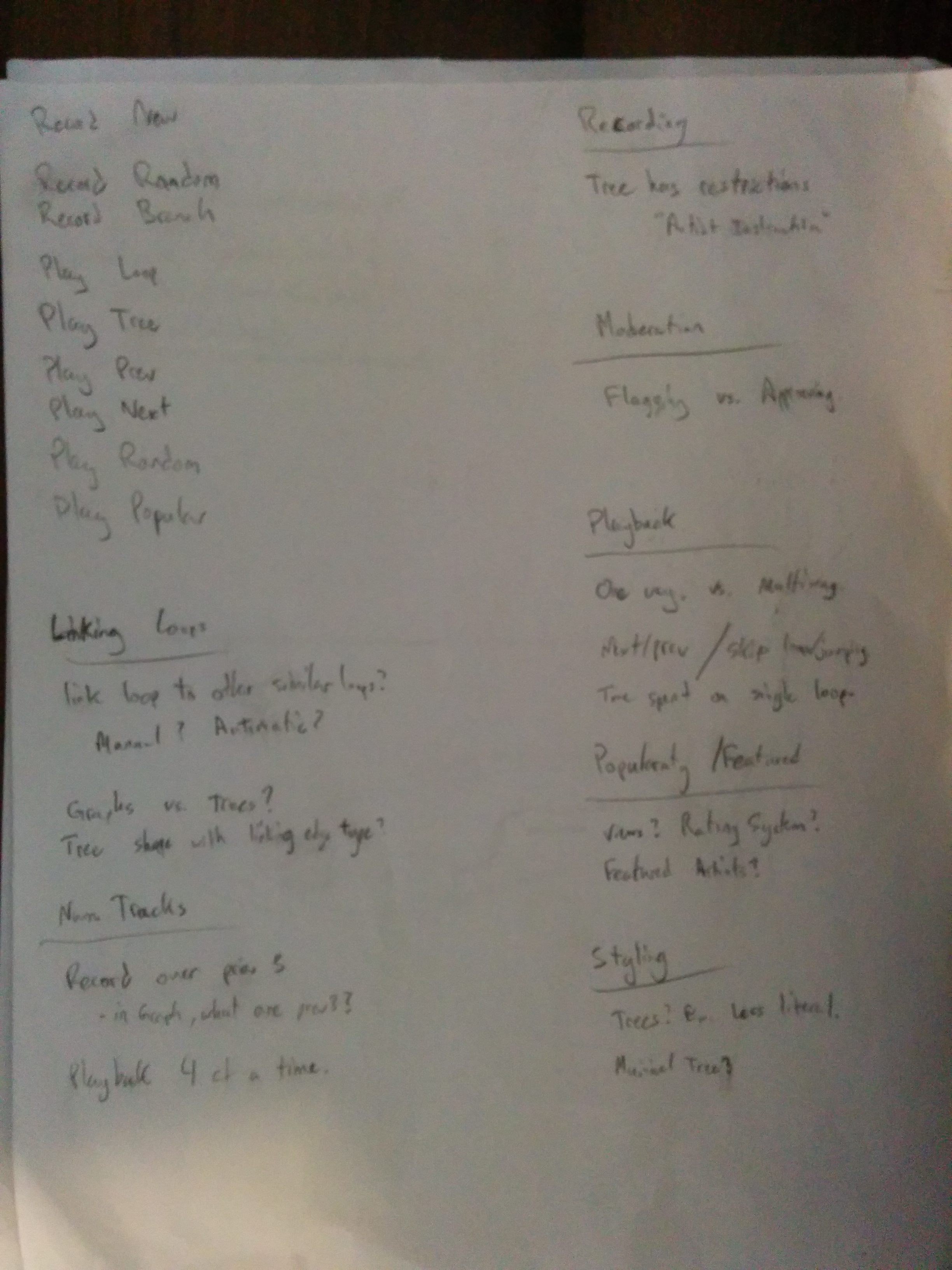

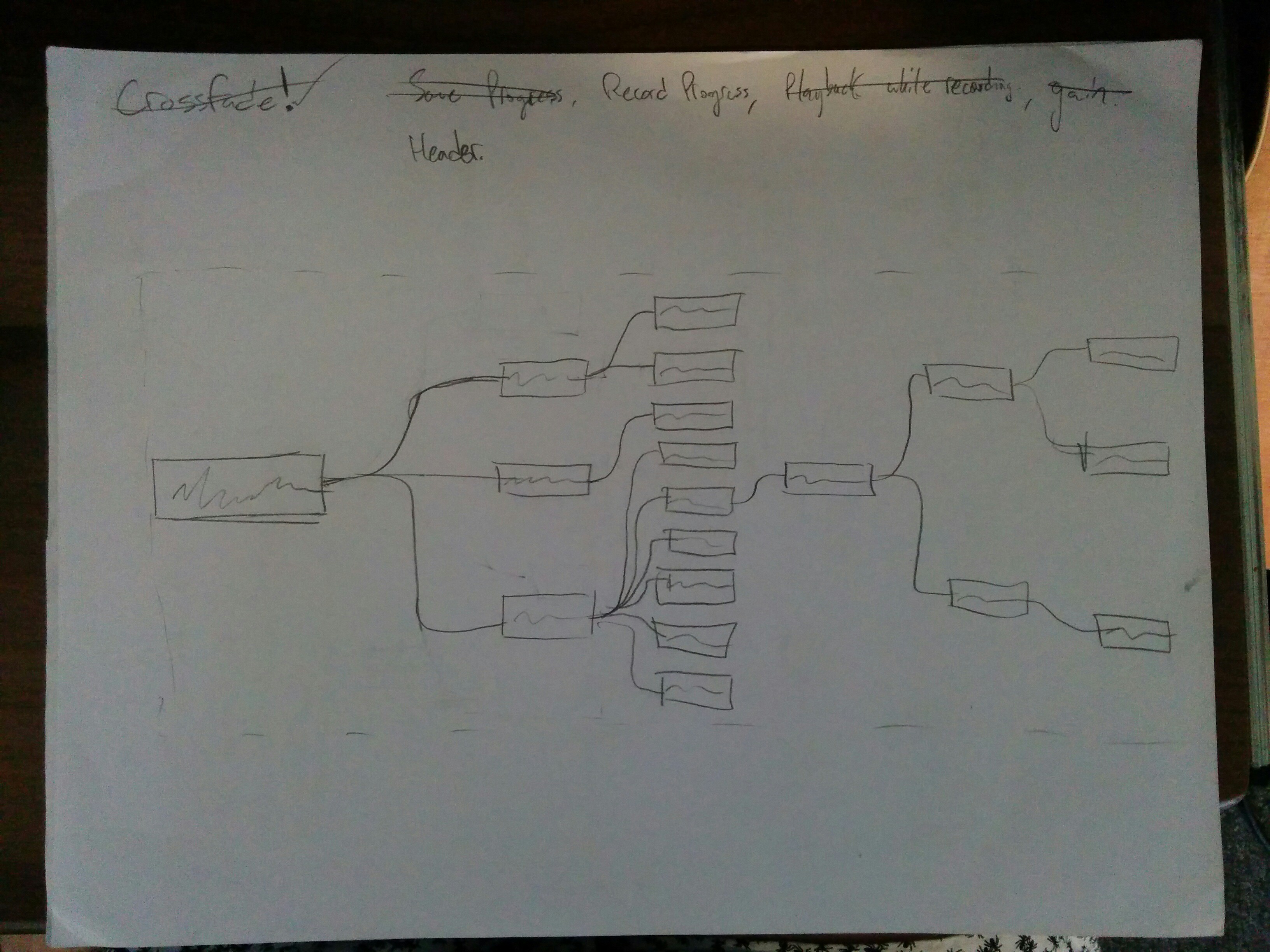

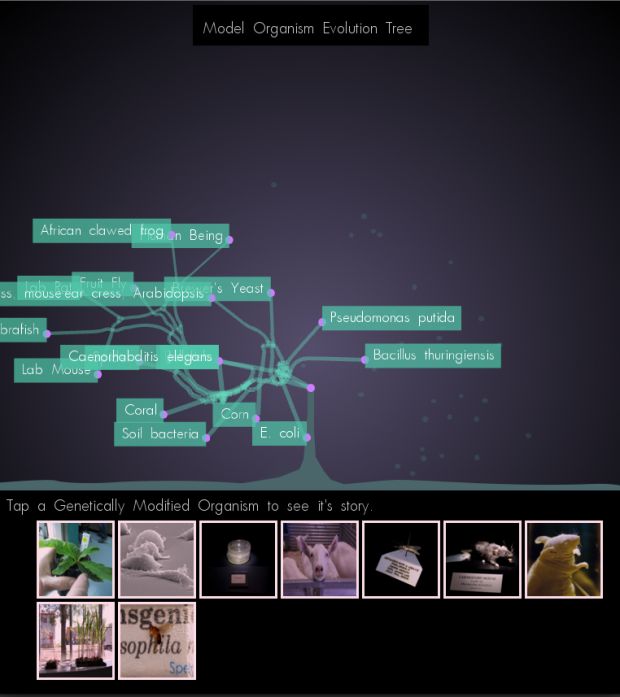

There was approximately a forty-eight hour span between deciding to take on the project and having a functioning prototype with the basic loop detection algorithm in place. Therefore, the vast majority of the time spent on development was dedicated to optimization and creating a fully-featured user interface. The galleries below show the progression of the user interface.

This first version of Loop Findr simply displayed the current frame that was being considered for the start of a loop. Any loops found were simply appended to the grid at the bottom right of the screen. Most of the major features were in place, including exporting GIFs.

- loopFindr v1 Screen 1

- loopFindr v1 Screen 2

The next iteration came with the addition of ofxTimeline and the ability to easily navigate to different parts of the video with the graphical interface. The other major addition was the ability to refine the loops found by moving the ends of the loops forward or backwards frame by frame.

- loopFindr v2 Screen 1

- loopFindr v2 Screen 2

In the latest version, the biggest change came with moving the processing of the video frames to an additional thread. The advantage of this was that it kept the user interface responsive at all times. This version also cleaned up the display of the found loops by creating a paginated grid.

- loopFindr v3 Screen 1

- loopFindr v3 Screen 2

Future Work:

Rather than focus on improving this openFrameworks implementation of Loop Findr, I will investigate the potential of implementing a web-based version so that it might reach as many people as possible. I envision a website where users might be able to just supply a youTube link and have any potential loops extracted and given back to them. Additionally, I would like to employ the algorithm along with some web crawling and find loops in video streams on the internet or perhaps just scrape popular video hosting websites for loops.