Tagline

ofxCorrectPerspective: Makes parallel lines parallel- an OF addon for auto 2d rectification

Abstract

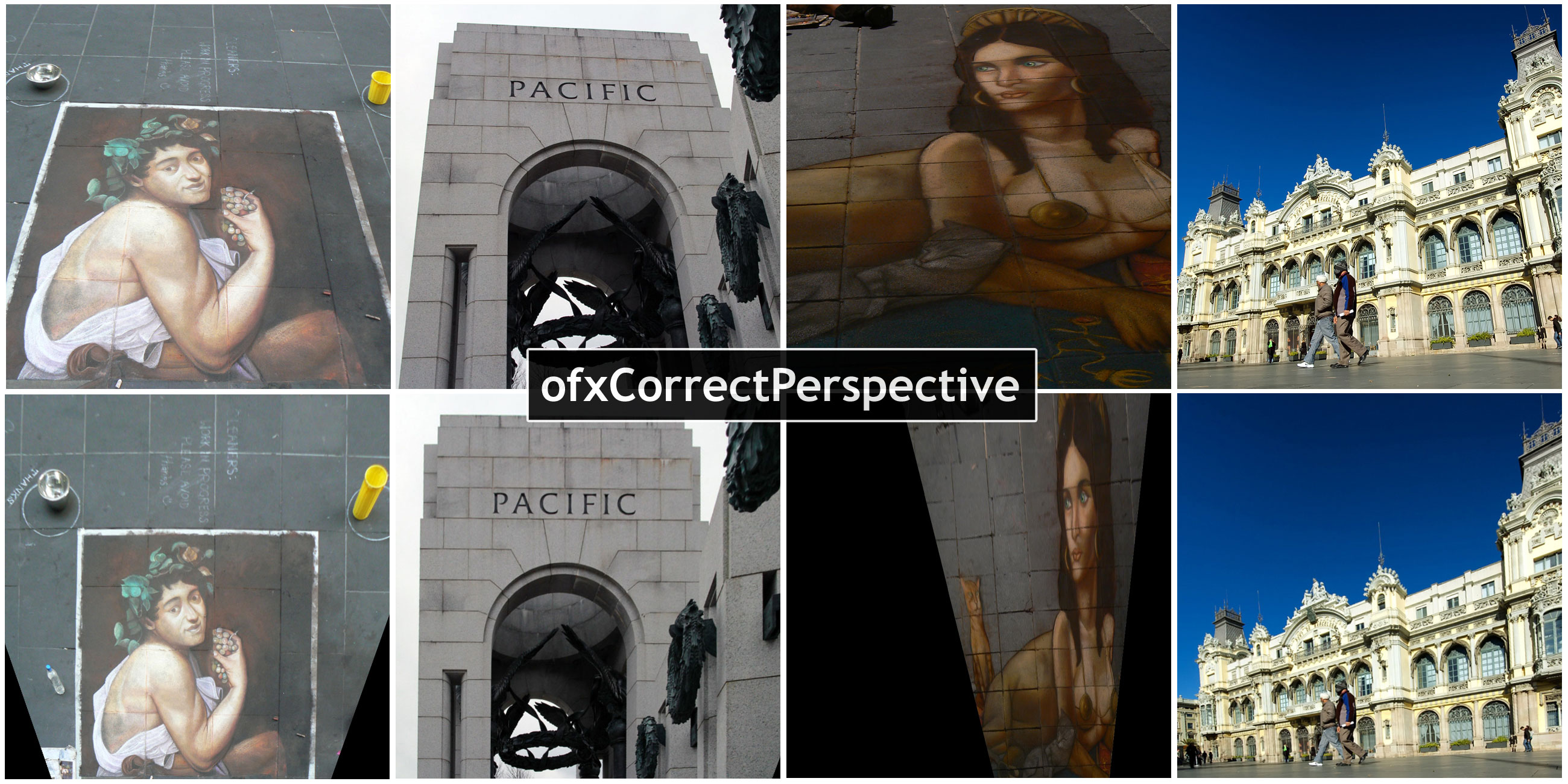

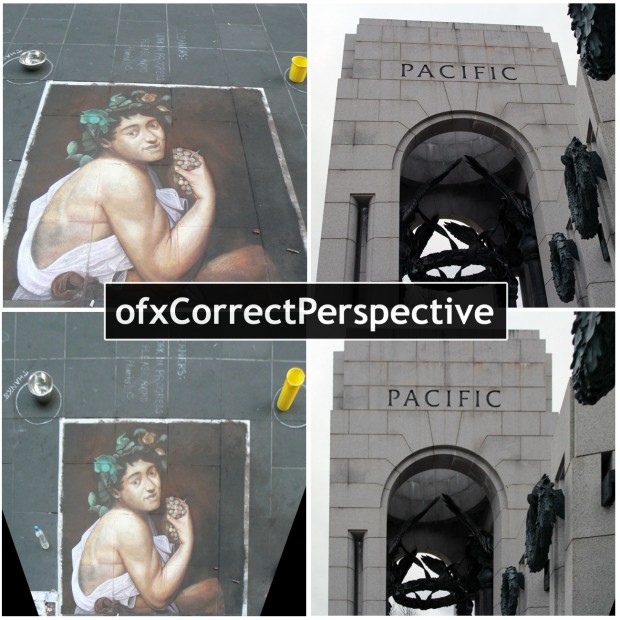

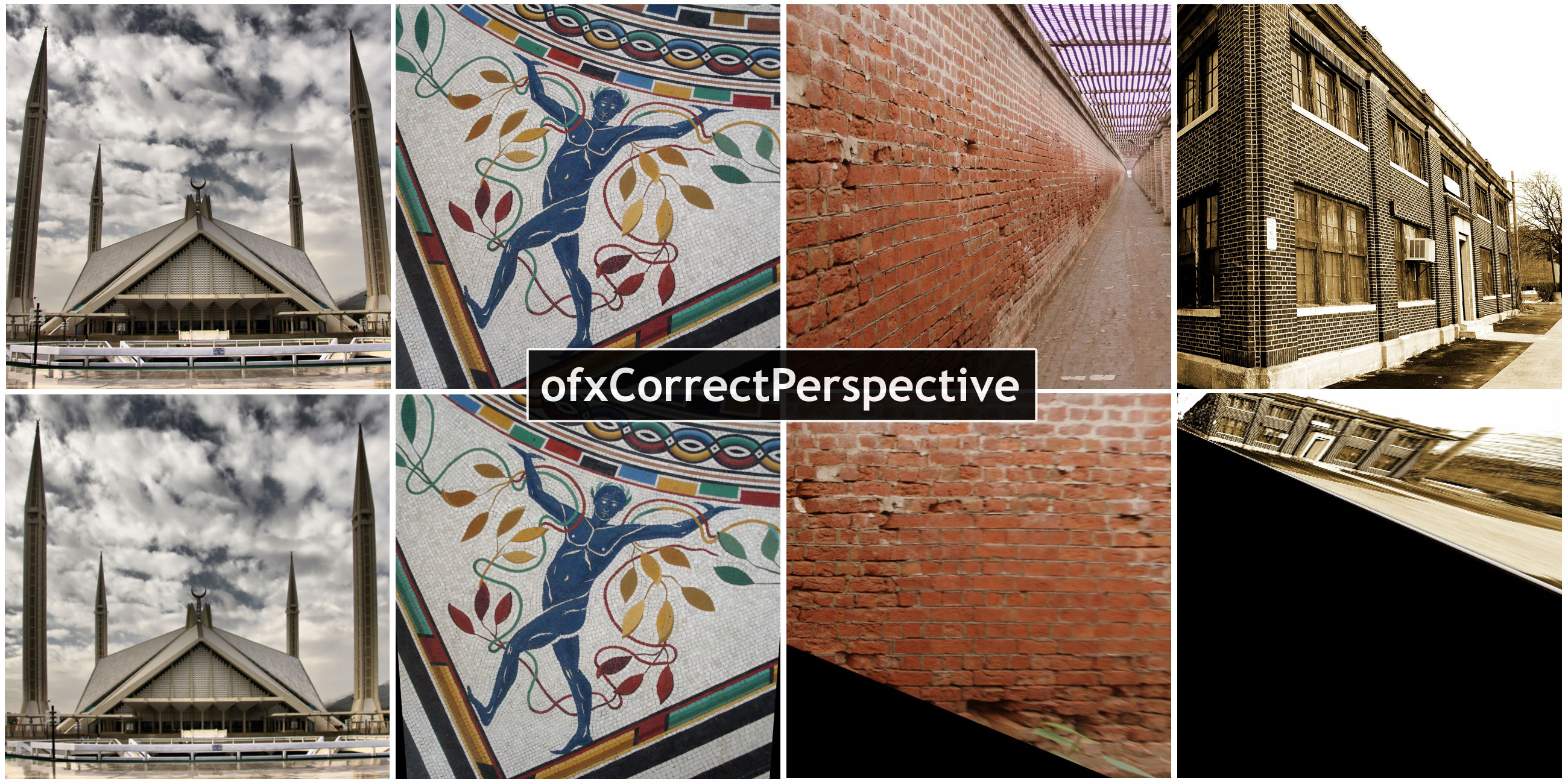

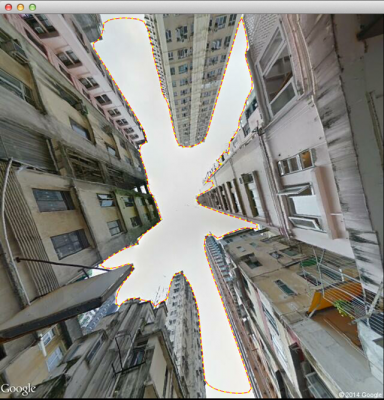

ofxCorrectPerspective is an OpenFrameworks add-on that performs automatic 2d rectification of images. It’s based on work done in “Shape from Angle Regularity” by Zaheer et al., ECCV 2012. Unlike previous methods of perspective correction, it does not require any user input (provided the image has EXIF data). Instead, it relies on the geometric constraint of ‘angle regularity’ where we leverage the fact that man-made designs are dominated by the 90 degree angle. It solves for the camera tilt and pan that maximizes the number of right angles, resulting in the fronto-parallel view of the most dominant plane in the image.

2d image rectification involves finding the homography that maps the current view of an image to its fronto-parallel view. It is usually required as an intermediate step for a number of applications- for example, to create disparity maps for stereo camera images, or to make projections over planes non-orthogonal to the projector. Current techniques of image 2d rectification require the user to either manually input corresponding points between stereo images, or adjust tilt and pan until a desired image is obtained. ofxCorrectPerspective aims to change all this.

How it Works

ofxCorrectPerspective automatically solves for the fronto-parallel view, without requiring any user input (if EXIF data is available, for Focal Length and Camera Model). Based on work by Zaheer et al., ofxCorrectPerspective uses ‘angular regularity’ to rectify images. Angle regularity is a geometric constraint which relies on the fact that in structures around us (buildings, floors, furniture etc.), straight lines meet at a particular angle. Predominantly this angle is 90 degrees. If we know the pairs of lines that meet at this angle, we can use the ‘distortion of this angle under projection’ as a constraint to solve for the camera tilt and pan that results in the fronto-parallel view of that image.

In order to learn about these pairs of lines, ofxCorrectPerspective starts by detecting lines using LSD (Line Segment Detector, RG von Gioi et al.). It then extends these lines, for robustness against noise, and computes an adjacency matrix. This adjacency matrix tells us what pairs we should consider, as pairs of lines ‘probably’ orthogonal to each other. After finding these probable pairs of lines, ofxCorrectPerspective uses RANSAC to separate the inlying and outlying pairs. An inlier pair of lines is one which minimizes distortion of right angles for all prospective pairs. Finally, the best RANSAC solution tells us the tilt and pan required for rectification.

Possible Applications

ofxCorrectPerspective can be used on photos, similar to how you’d use a tilt-shift lens. It can compute rectifying homography in a stereo image, to speed up the process of finding disparity maps. This homography can also be used to correct an image projected using a projector that is non-orthogonal to the screen. ofxCorrectPerspective can very robustly remove perspective from planar images, such as a paper scan attempted by a phone camera. It produces some interesting artifacts as well for example, it modulates a camera tilt or pan as a zoom (as shown in the demo video).

Limitations & Future Work

ofxCorrectPerspective works best on images that have a dominant plane, with a set of lines or patterns on it. It also works on multi-planar images but usually ends up rectifying one of the visible plane, as ‘angle regularity’ is a local constraint. One approach to customize this would be to apply some form of segmentation on the image, before running it through this add-on (as done by Zaheer et al.). Another approach could be to allow the user to select a particular area of the image, as the plane to be rectified.

About the Author

Haris Usmani is a grad student in the M.S. Music & Technology program at Carnegie Mellon University. He did his undergrad in Electrical Engineering from LUMS, Pakistan. In his senior year at LUMS, he worked at the CV Lab where he came across this publication.

www.harisusmani.com

Special Thanks To

Golan Levin

Aamer Zaheer

Muhammad Ahmed Riaz