Overview

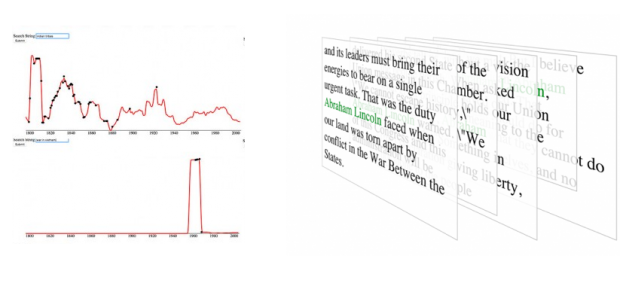

A Theatrical Device is an original play, seen through the front-facing cameras on laptops, desktops and cellphones held by the actors — these devices provide multiple, simultaneous perspectives of the same timeline across multiple rooms, showcasing the progression of different characters. Audiences find their own way through the play by exploring it in a 3D, virtual reality world (via the Oculus Rift)

Inspiration

It’s been fewer than five years since quality, front-facing cameras became standard on cell phones and tablets, and it’s no secret to anyone that a lot of our private lives are spent in front of them. The widespread fear that hackers or the NSA might be spying on us through our webcams speaks to just how much you could learn about someone from the perspective of their devices. As a theatre artist interested in new media, this interests me. I immediately wondered what kinds of stories one could tell with this very new and politically charged medium, and what sort of opportunities were allowed by the fact that these cameras were attached to handheld consumer devices, props that would blend seamlessly into the interior of a scene and perfectly cloak numerous cameras capturing the same action concurrently. In normal filmmaking, the idea of having a multi-camera view of the interior of a scene is an impossible dream. However, modern devices can do this effortlessly. It begs the question: now that we have this platform, what can we actually do?

I decided I would have a short play seen from the perspectives of cell phones held by the characters, which they would interact with as their own personal devices (I decided that the phones would be pointed realistically rather than stylistically. For one, I’m not a cinematographer. I wouldn’t even know where to start pointing cameras). These shots would be concurrent, and offer multiple views of the same timeline. Ideally, each perspective would reveal different information, such that audiences who watched the piece from multiple perspectives would walk away with different opinions on who the star had been and what had motivated the characters. I decided it would be interactive, and audiences would be allowed to select and switch the device from which the story was being viewed.

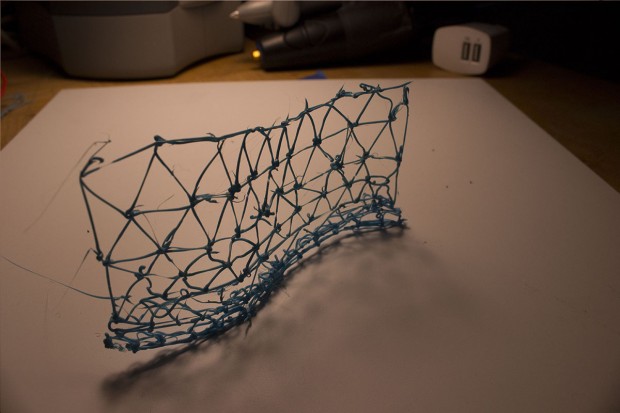

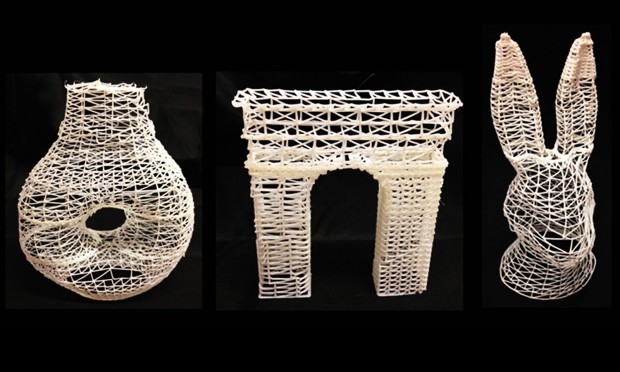

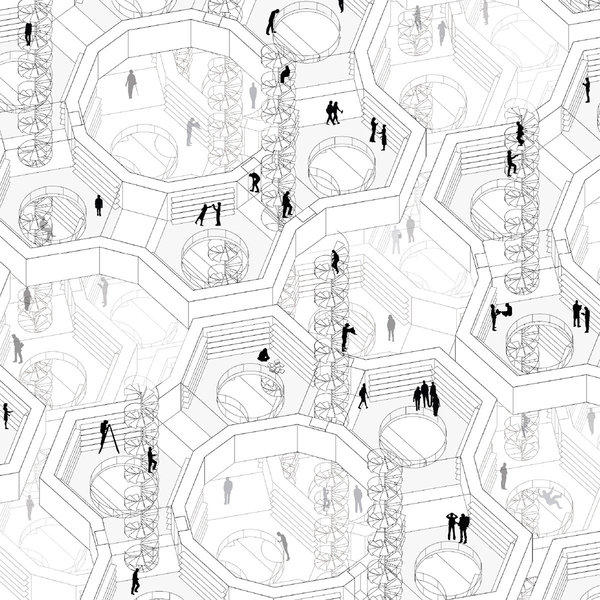

This, then, posed the challenge of how the audience would interact with these choices. To be honest, I was mostly inspired by the prospect of writing my first VR application. In any case, I decided that a virtual world would have more versatility for choosing an interface than the physical one. At its best, it would allow me to represent my footage in ways that would not be possible in the physical world. At the very least, it would give me the tools to create any kind of 3d installation I wanted without having to worry about practicality or expense — naturally this is something we never get to do in theatre, so that was exciting.

Finally, I decided the script would be an original one, written to be told across multiple rooms through the device’s cameras. The project, then, had three components: writing the story, shooting the film, and creating a VR world to view it in.

Story

I ended up spending a lot of time deliberating over what sort of story would be aided by my platform. I wanted something where following different characters would lead to drastically different views of what the play had been about, and yet tie together in a way that wouldn’t feel awkward. Furthermore, it needed a cast exclusively of college-age characters (unlikely I could find anyone else) and needed to be set somewhere accessible. Finally, there needed to be some core narrative, some takeaway that could demonstrate that the platform was allowing new kinds of stories to be told.

At the end of the day, playwriting is hard, my attempt was amateur at best. But I think what I came up with could be a promising start:

The play’s action centers around a group of college friends who, after two years, are visiting the campus and the home of their friend and former classmate, Hattie. Hattie has failed to graduate with the rest of them, and still struggles with her classes following a severe emotional breakdown. Hattie, her former classmate David and her abrasive ex-boyfriend James all begin the play in the living room (Jaz and Charlie, other characters, enter later). The action separates, however, when James makes a comment that “triggers” Hattie, and she feels compelled to leave to a bedroom. While an audience member watching Hattie might feel sympathetic to her trauma as it is revealed and perhaps consider the end of the play a tragedy, from the vantage point of James or David, the play is more about dealing with an unwanted emotional outbreak in a casual setting, what it means to be sensitive, and the challenges of understanding someone’s invisible anguish. James wonders if his philosophies on sensitivity actually do him any good, while David struggles to balance conflicting philosophies about how to interact with women. Actors move between rooms and, generally, there are two active rooms between four (of five) characters. The conclusion for each of these storylines then culminates in the final scene, again, in the living room. Running time is approximately 20 minutes

I tried to give the characters excuses to interact with their phones — Hattie uses hers as a mask for her tears, James is waiting for his girlfriend to text back, Jaz sends instructive texts to James, and both they and David look things up on their phones to show to others.

All in all, this setup seemed like a decent way of introducing devices, movement and selective narrative into my scene. I liked how a tragedy to one character’s perspective might feel like a comedy to another (honestly, mental illness is an easy way to get this sort of thing across). I’d love to write another script where this dynamic is more extreme.

Shooting

In the end, my script called for five actors, and was set in a home that was conveniently a dead ringer for my own. I initially hired five actors for a total of 2-3 rehearsals and a shooting day over the course of a week. During this time, one actor managed a broken leg, one dropped, and one was sick enough that she fell asleep and missed shooting. As a director, I’m supposed to anticipate these things, but in any case, it made the short production schedule a headache.

I thought a lot about how to direct the motion of the cameras. I decided on having the actors pilot them with some amount of verisimilitude, not making attempts to “get shots” or position the phones in front of faces. Sometimes, they’re pointed at the ceiling and only capture audio. This worked well for my tight rehearsal schedule, and also led to some interesting discoveries.

The main challenge of shooting with a bunch of devices (all of which were provided by either me or the cast) was balancing synchronization with battery life with memory. In a given take, actors across multiple scenes had to get the content right, and, in the final hours of shooting, we’d frequently end a great shot to discover that a device had run out of memory or battery. Memory was a problem I could have foreseen and accommodated better. At the end of the day, we only had enough room across all our memory options for a little over one take of each scene. Which meant whatever we ended up with had to be workable.

To some extent, having an actress get sick last minute was nice, because I got inserted into the scene and could watch the progress — otherwise, I would have had to remain out of sight of the cameras, which is probably reasonable for a film director, but as a theatre director weirded me out.

I was lucky enough to have a very easygoing, flexible cast, who were willing to undergo the trials and tribulations of working with a weird, crude platform. What they left me with at the end of the day was roughly one decent take of each of the four scenes, and synchronization editing to be done in post.

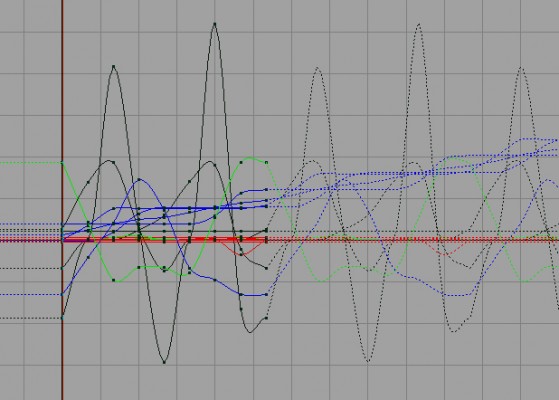

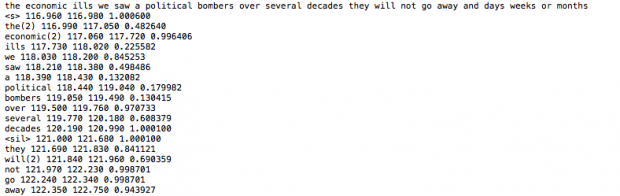

The editing to synchronize all the videos between each other was definitely a pain. It involved a lot of comparing between two videos in a scene, and making sure the tracks had a good audio match. In one case, we were forced to film two rooms of the same scene separately, which was annoying to edit because one had a middle bit whose interior ran longer than the other one. In the future, it’d be nice to build apps for these devices that would all synchronize against each other.

Virtual World

I’d never written a VR application before, and while the freedom of having any set my mind could dream was initially overwhelming, I ended up struggling to decide what I wanted. I needed to figure out a way audiences could interface with multiple perspectives that would justify using VR, and when I asked myself what interfaces are possible in a VR world that can’t be done in the physical one, I came up empty. I think VR gives us a lot of potential to interface with media in ways that would not have been possible previously, and that its future is going to be a lot of interesting new interactions. However, either a lack of experience or imagination prevented me from coming up with something that both seemed truly novel and actually helpful.

I settled on modeling my world after a multi-room gallery, where each room represents a scene. The scenes would have tv sets on the walls denoting the various screens, and giving focus to a screen would play the associated audio. Each room was different, ranging from a cozy living room setup that modeled the play’s own living room, to GIANT rooms full of towering TV sets (which ended up feeling genuinely monstrous and was a cool effect).

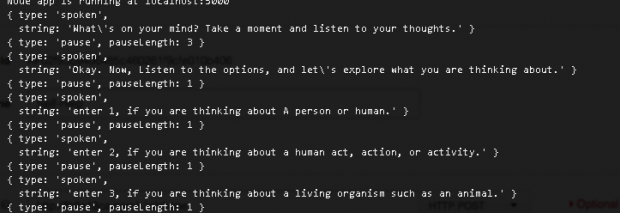

One major technical challenge was that of memory. Unity is notorious for using terrible black magic to play video. I was warned early into my development process that having more than five videos active at once might prove problematic, and quickly discovered that actually turning on audio for a clip would cause it to stall for about five seconds after loading so it could be properly synced (this is, of course, because unity videos have no way of seeking to a timestamp. Because such features would be ridiculous). These presented some interesting performance challenges for me. I ended up playing video only in the room a user was in, having the scene restart when a new room was entered — this saved me from having to deal with so many videos at once. For audio selection, my solution was to preload all audio and set each of the streams to mute — on devices that can’t handle this much media at once, I have a feature that preloads it during the selection process. While video memory was definitely a struggle at the beginning of my projects, this setup caused me approximately no problems.

The question of how audiences select video was an interesting one. I learned that a canonical method for VR apps was a cursor controlled by your head. I chose to have a cursor that, when directed at a TV screen, would load the audio if the user had been pointing at that screen for more than a few seconds. This made it harder to accidentally select a video, and also gave me time to preload audio when I needed. all in all, I thought it was a good option.

Developing with Unity was an interesting new challenge. I’d originally had no exposure to game engine programming, and learned a lot during this experience. I hope to continue to learn about what experiences and behaviors are possible in this space.

Future Work

At the end of the day, so much about this platform and process were new to me that I saw it very much as a first draft. Feeling like it all went pretty well, I’d love to try it all again with another iteration. First, I’d love to revise my script, integrating what I discover about how the many narratives were communicated. Second, I’d love to better organize my shooting process to avoid the deadly expense of phones without memory/battery, as well as the time spent synchronizing in post. Finally, I’d love to create a less amateur VR world, and make use of the technology on hand to create truly novel ways to experience media.

I wasn’t sure when I began how interesting or effective this platform would be. Now, I feel like it has a ton of power and potential. I’ll upload the final version of this iteration, soon, but I hope to have something even better for the future.

Inspired by Jorge Luis Borges’ short story “

Inspired by Jorge Luis Borges’ short story “