This was mostly an analytical project where I wrangled with much of the Teenie Harris archive and went from having a 1.5 TB unusable database to a manipulable database with some metadata.

Warmup

I received a 1.5 TB hard drive from the Carnegie Museum of Art with 60k digitized Teenie Harris images in tif format. Most were about 25MB in size – way too much to manipulate and do image processing on. My first task was to convert the database into a more manageable database.

I used the ImageMagick library to create 1024-, 640-, and 256-pixel PNG versions of the images for everyone else to use across a variety of use cases

Image Prep

With the lower-res images I ran two t-SNEs on a few “boxes” of Teenie Harris images that the museum had digitized. Apparently they digitized the older images first and preserved a naming convention that highlighted what images were stored together.

My first t-SNE was 6000×6000 pixels wide, comprising 60×60 images. Wordpress of course can’t load such an image:

Here, I found all the athletes grouped into a corner, as well as most of the portraits:

I also ran a second t-SNE 7000×7000 pixels wide and analyzes 70×70 images.

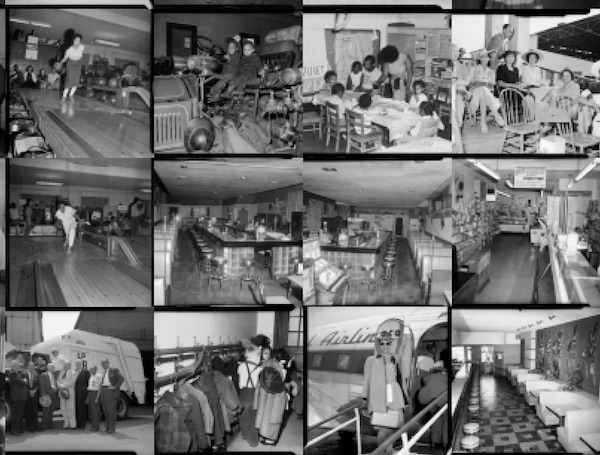

But you can see interesting clusters – all the quiet streets were groups together, as well as a subtle association of bowling alleys and empty diners.

Data Prep

For this project I wanted to test out a few computer vision libraries and see if I could add some metadata to the archive about objects and OCR tags in the text.

I used the Google Computer Vision library which offers text detection, and label detection if you run the label detection particularly on high resolution images around 640×640 pixels in size. It took me three days to collect the data (sending image data back and forth to google takes its time) but I ended up assembling it into a series of JSON files to be found here:

https://github.com/irealva/teenie-harris-archive-explorations/tree/master/image-data

Presentation

Finally, as an analysis tool I created a simple application that compiled all the tags across the 60,000 Teenie images and loads them efficiently into the browser. Below you will find a few examples of the sorts of tags and results that I compiled.

Unfortunately for now I will refrain from hosing a version of this app (with better UI) since that would require hosting all of the 60k images online as well. The repo for the code as well as a concatenated json with all of the data can be found here:

https://github.com/irealva/teenie-harris-archive-explorations/tree/master/visualize-images

As a next step, I’d like to explore the OCR data I collected – perhaps extracting all the image sections with text and running a tSNE on those particular image sections.

Example: searching for string instruments

A less accurate example of “film noir” label + an accurate example of “classic car” label.

Opening up the developer tools shows the image paths and names associated to each label.

Lessons learned

Any kind of data manipulation on 60k images or data points takes quite a while – even on powerful computers. It was useful to account for long hours and sometimes even days to process all the data.

I’d like to explore analyzing this data with other vision APIs to understand what kind of data each one might have been trained on. It’s not even clear how Google’s API might be different or similar to their Photos clustering service. On first notice, it seems like both services are using different models because the labels produced are quite different. Google Photos looks for similarities across photos – which might lead to better results. All in all, it’s a good reminder that all models have biases and one has to account for them when picking the right API/algorithm to tag your data.