I am interested in making a simple, poetic intervention/noise creation/corruption of the transactional, capitalist systems of desire production embedded into our everyday (digital) communications.

Here are a few pieces of research that I have found exploring this:

Rice describes this project as:

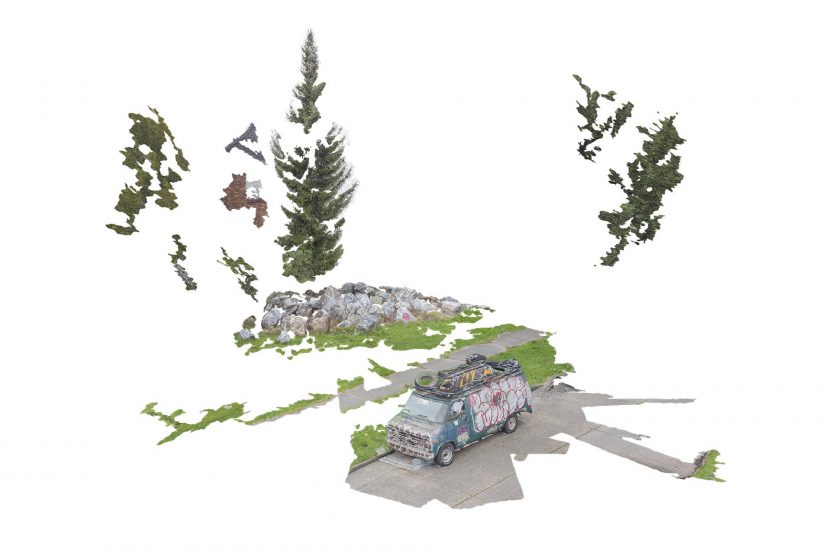

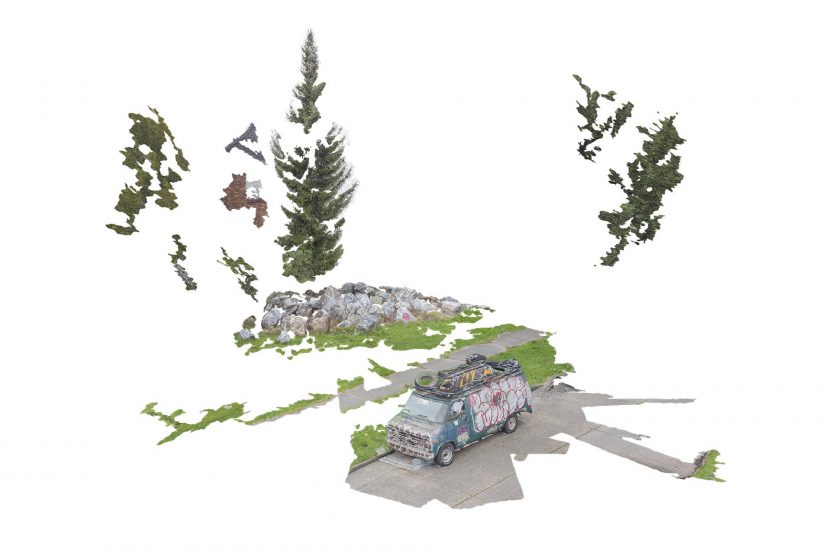

“These images and texts represent Rice’s studies of Seattle’s rapid change. As many sites and landscapes in the city disappear, a new kind of visuality emerges: one shaped by economic forces, the influx of tech, and developments that often favor these interests rather than those of the diverse communities that call Seattle home.

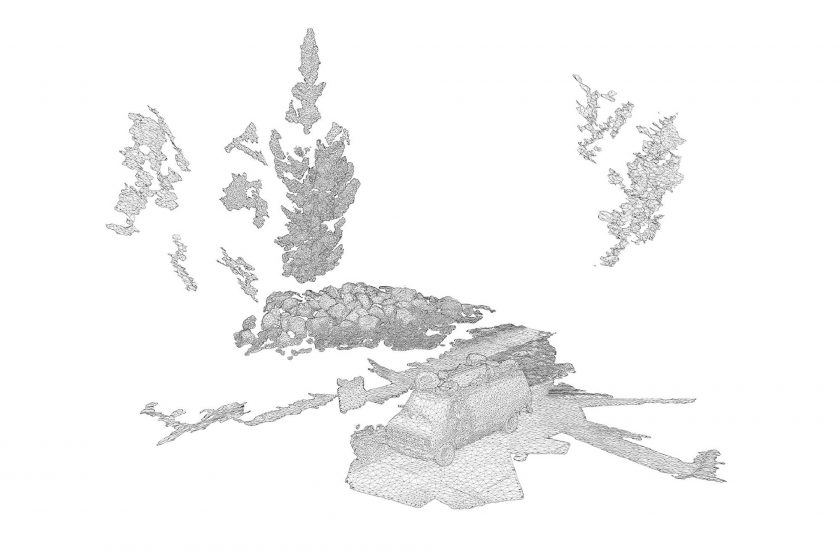

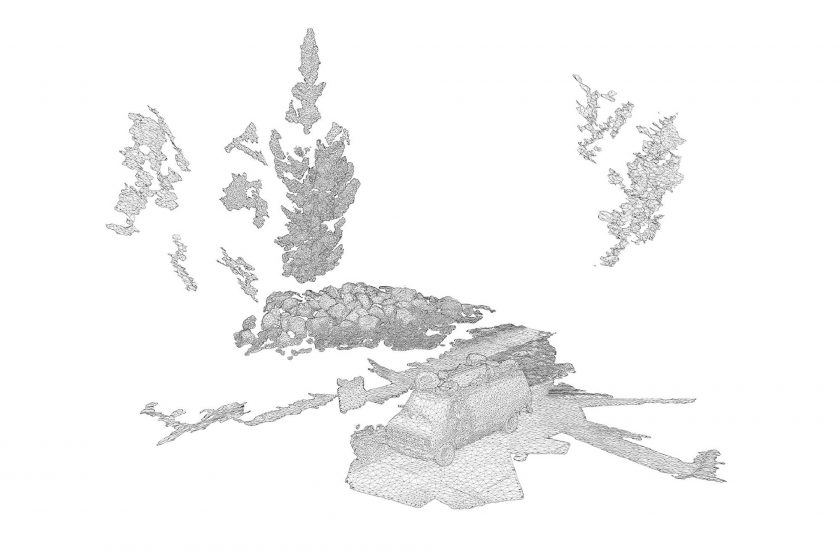

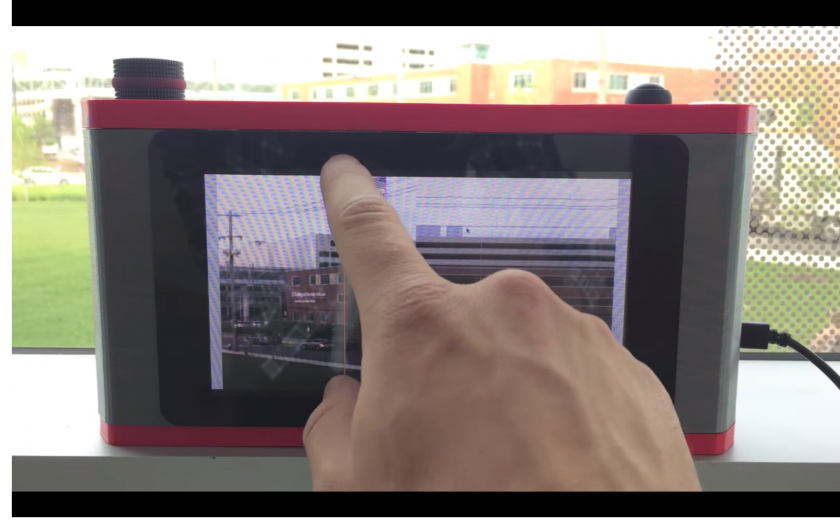

In Drone Triptychs, these scenes and locations are explored through a digital process – photogrammetry – which generates a virtual 3D model by analyzing hundreds of two-dimensional photos. In order to access all possible perspectives, many of the photos were captured using a drone, an airborne camera funded by 4Culture’s 2015 Tech Specific grant.

The models that result from photogrammetry can then be scaled, rotated, inverted, animated, textured, or rendered as a wireframe. This act of virtualizing a space, which often creates a glitchy, hollow, or flattened shell of the original site, seems similar to many of the large-scale image-making processes at work in the city: regrading, demolition, faux preservation, façadism.

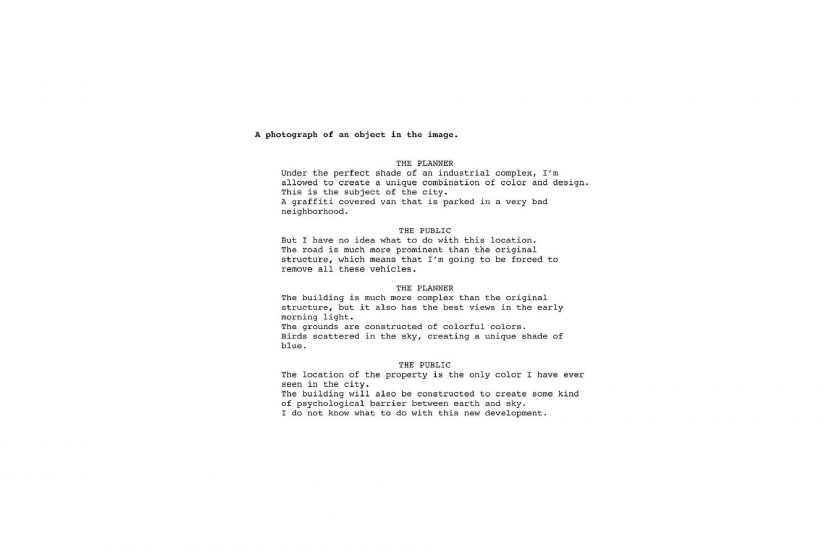

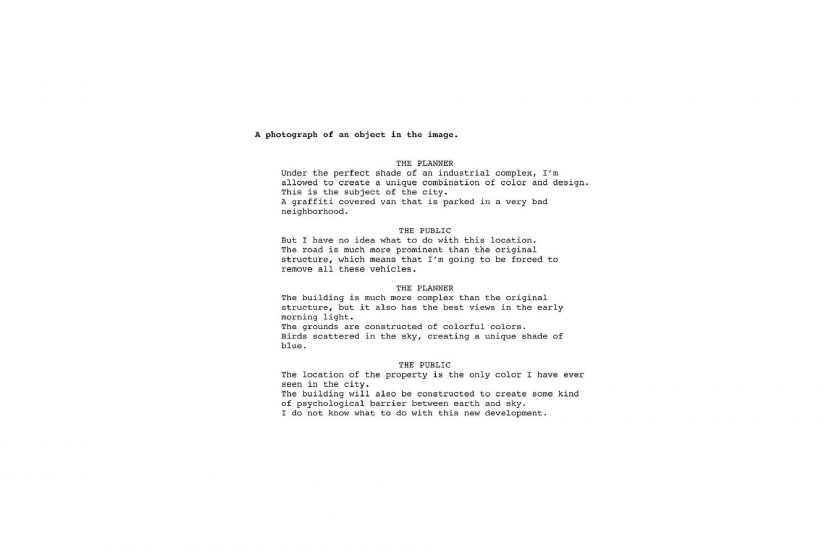

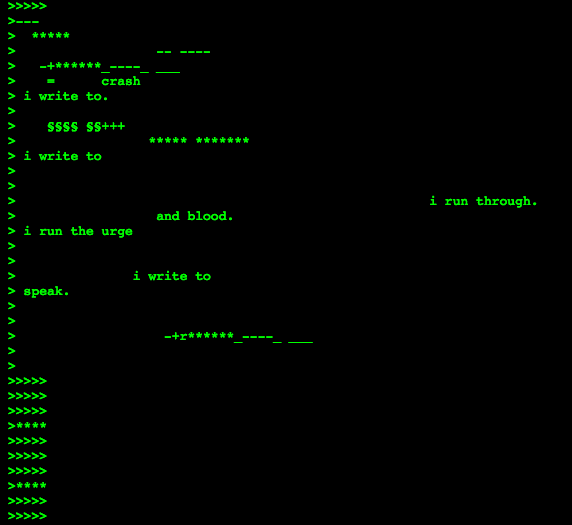

The accompanying texts further explore a virtual or uncanny representation of Seattle’s image. Working in collaboration with Google AMI – Artists and Machine Intelligence, a computer was trained to “speak” by analyzing over 250,000 documents from Seattle’s Department of Planning and Development. Ranging from design proposals and guidance, to public comments and protest, the vocabulary that resulted from this training was used by the software to automatically generate captions and short stories about each photo. In these stories, the “voices” of city planners and the public are put into a virtual dialogue (or argument) with each other as they describe each scene. ”

What I enjoy about this project is the pairing of disappearing visual landscapes with a poetic reinterpretation of the language that is out there acting as a force which is creating the disappearance.

Fifteen Unconventional Uses of Voice Technology

Article Link Here

This is an interesting article G0lan showed me about a course taught to explore creative uses in voice technology. The github and syllabus from the class are filled with interesting resources.

–Objects summoned in VR by voice in Aidan Nelson’s “Paradise Blues”–

tell an optimistic and uplifting experience.

tell an optimistic and uplifting experience.

This gives them a videogame-like 3rd person perspective of themselves and the world. I find this idea very interesting, because I often see myself in videos and in my memories as different than I do in the moment, and because people behave differently when they can see themselves (like in mirrors) than when they can’t. I’d be curious as to how people feel about wearing this, and how it affects their interactions with people (besides the obvious effects of wearing a backpack and VR headset around…).

This gives them a videogame-like 3rd person perspective of themselves and the world. I find this idea very interesting, because I often see myself in videos and in my memories as different than I do in the moment, and because people behave differently when they can see themselves (like in mirrors) than when they can’t. I’d be curious as to how people feel about wearing this, and how it affects their interactions with people (besides the obvious effects of wearing a backpack and VR headset around…).