Note: I HAVE CHANGED MY IDEA, but here is the looking outwards I made with an old idea.

Sleeping Speech Idea

Telematic Artwork! If you’re having a good conversation, why let sleep stop the fun?

Potential tools:

- OpenAI text generator GPT-2 (thank you Gray for the idea)

- iOs / android studio/ web

- firebase

# 1: Dreaming like Mad

Dion McGregor was a prolific sleep talker whose nighttime musings were so complex and bizarre that his friend made it his lifelong project to record his sleep talking. Decades later, the tapes resurfaced in the form of a mixtape!

“Dion never actually intended the world to hear his sleep mumbles, instead they were recorded by his songwriting partner Michael Barr, who was fascinated by them. And now the album has now been re-released, 50 years on, by Torpor Vigil Records, along with more recordings of Dion’s sleep stories.” – Dan Wilkinson @ vice

“When Milt Gabler of Decca was interviewed, he called the album ‘one of the biggest flops I ever put out!'” – Dan Wilkinson @ vice

The idea of remixing sleep talking is related to how I want to take sleep movements / noises and remix them into a conversation. I’m inspired by the idea of finding meaning from meaningless speech. However, I understand why every song/album created from this sleep talk was a flop… it’s only compelling to a point.

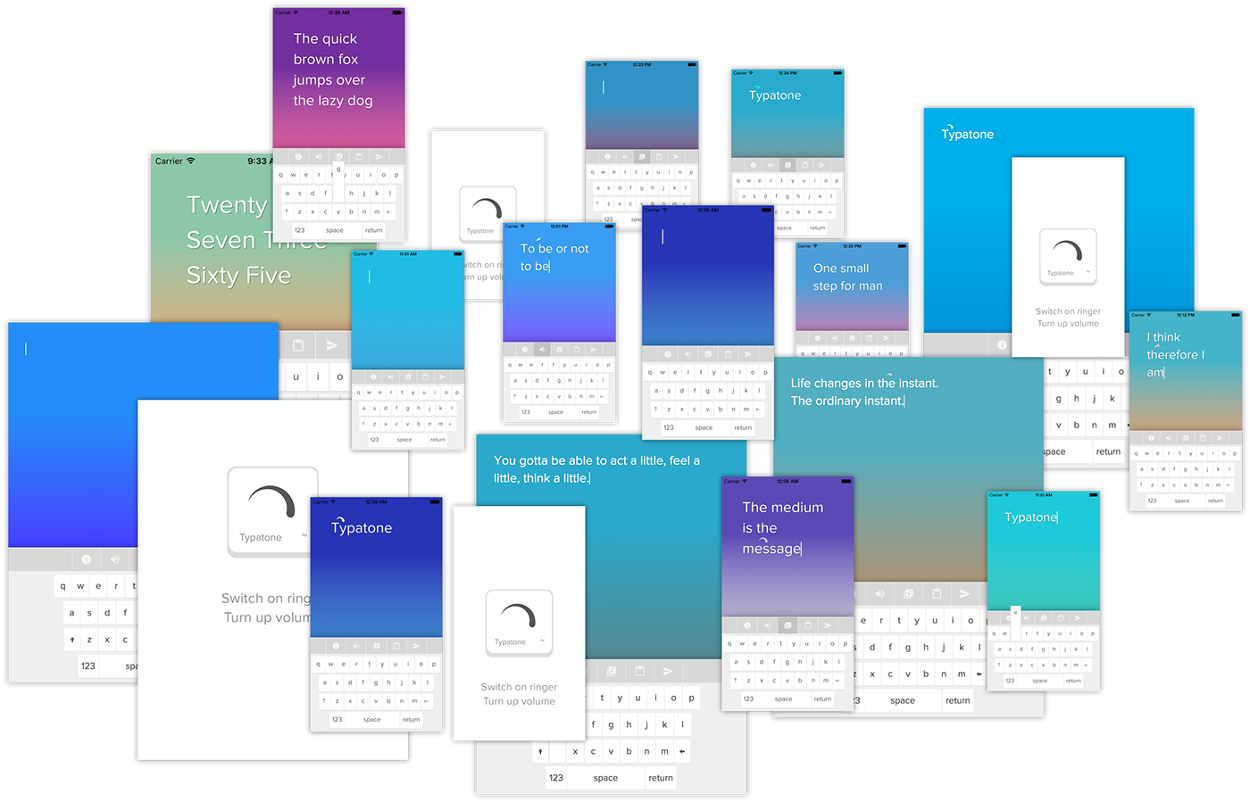

#2 Typatone: “Digital Typewriter that composes songs out of your writing”

“The act of writing has always been an art. Now, it can also be an act of music. Each letter you type corresponds to a specific musical note putting a new spin on your composition. Personalize your writing by choosing between six unique moods. Each mood changes speed, filter and color to each letter’s musical note. Easily import text written in other writing applications with a copy and paste interface. When you’ve finished writing, share it and download an audio version with a click of a button! Whether it’s a message, essay, story, or poem explore a new way of writing. Make music while you write.”

Not super related, but it takes text and turns it into music. I’d like to do a little bit of the opposite! Sound to text! I’m inspired by this simple interface and the clean and natural integration of the concept into this interface, although the amount of time one spends interacting with this project would be minimal in any situation because the interaction is rather one-dimensional and quick (I tried the demo in the article).

It was really hard to find good art that was related to my idea.. does anyone have any suggestions?

![[cut output image]](https://im4.ezgif.com/tmp/ezgif-4-ea51c06dedeb.gif)