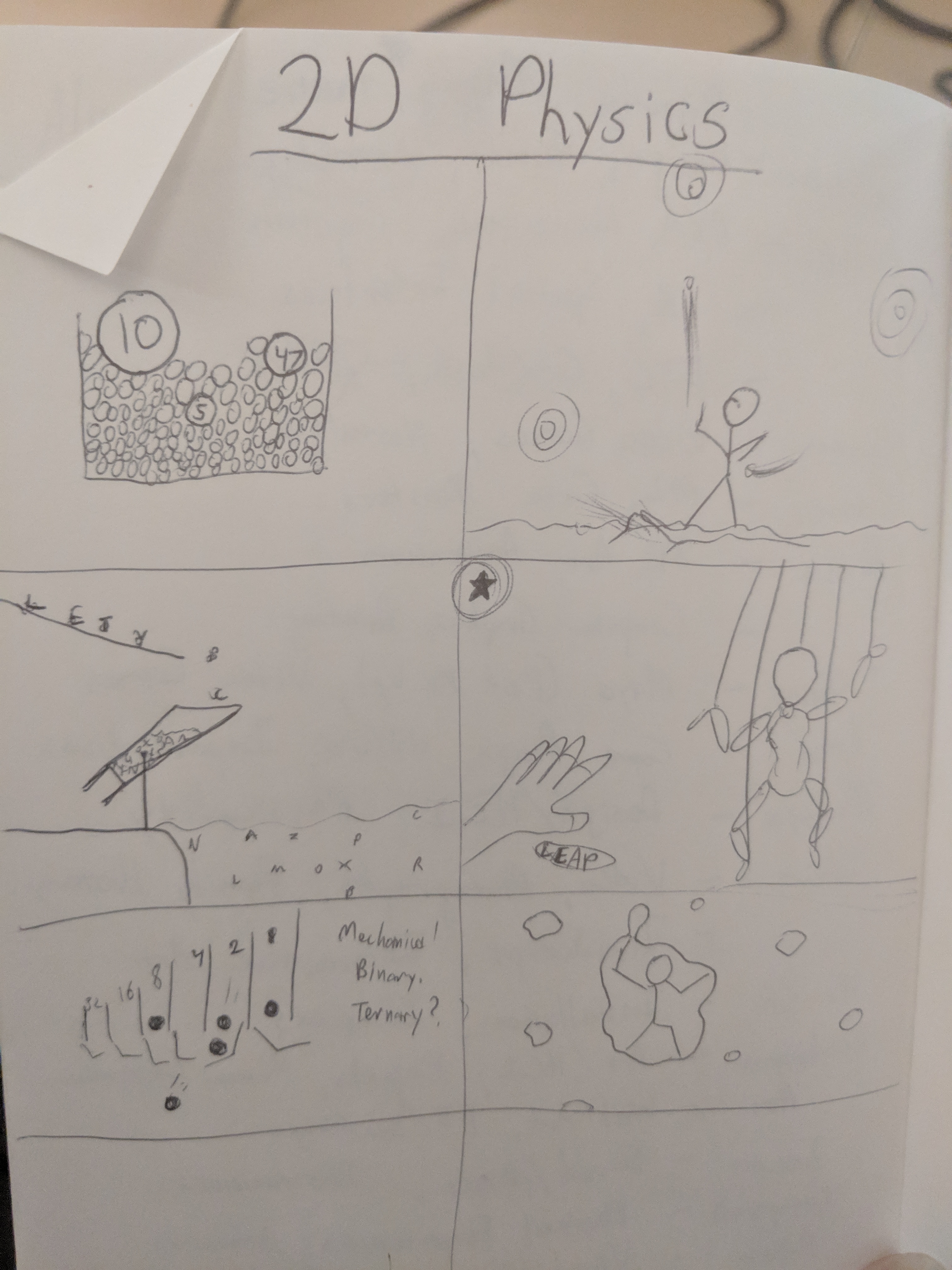

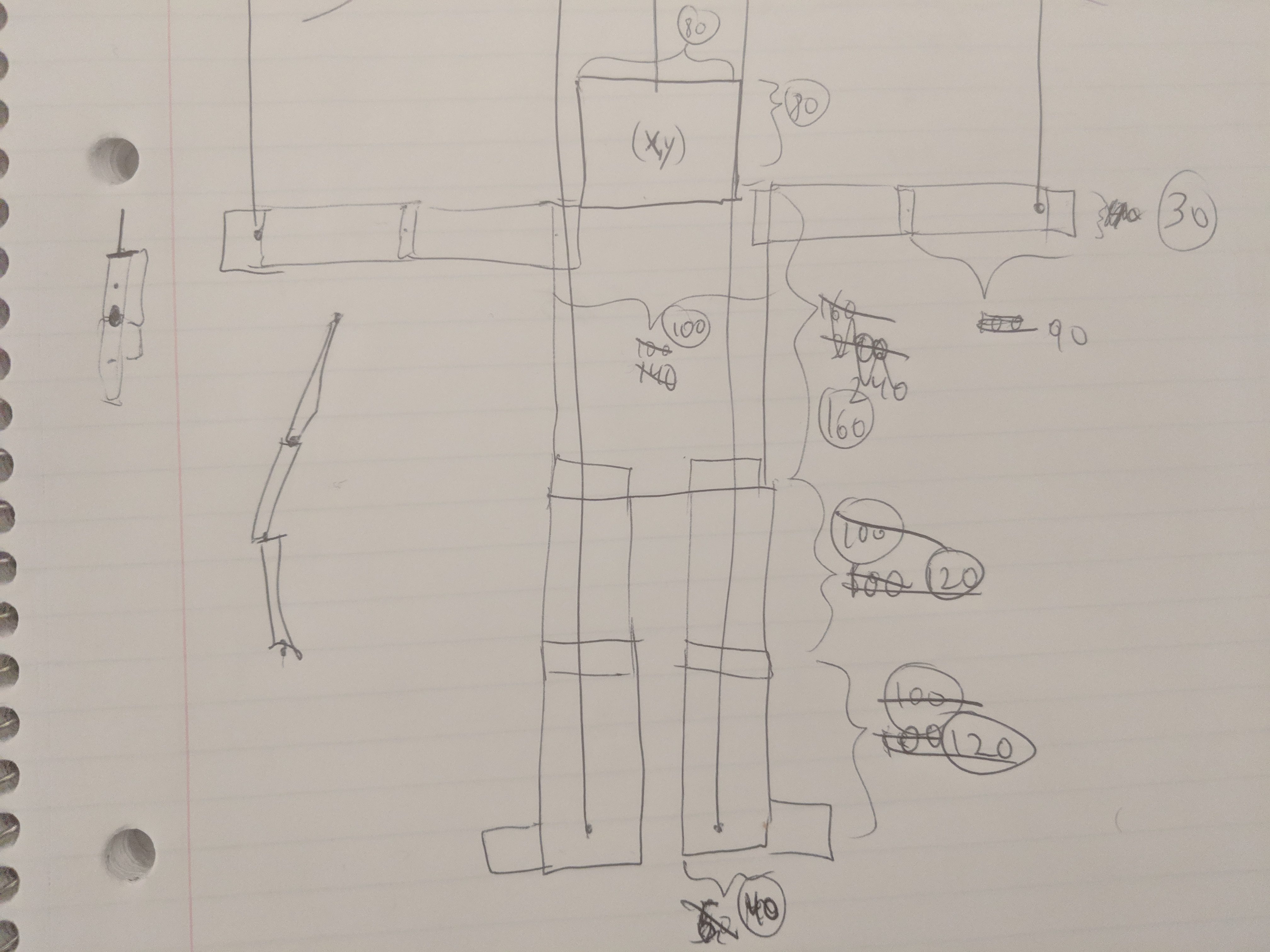

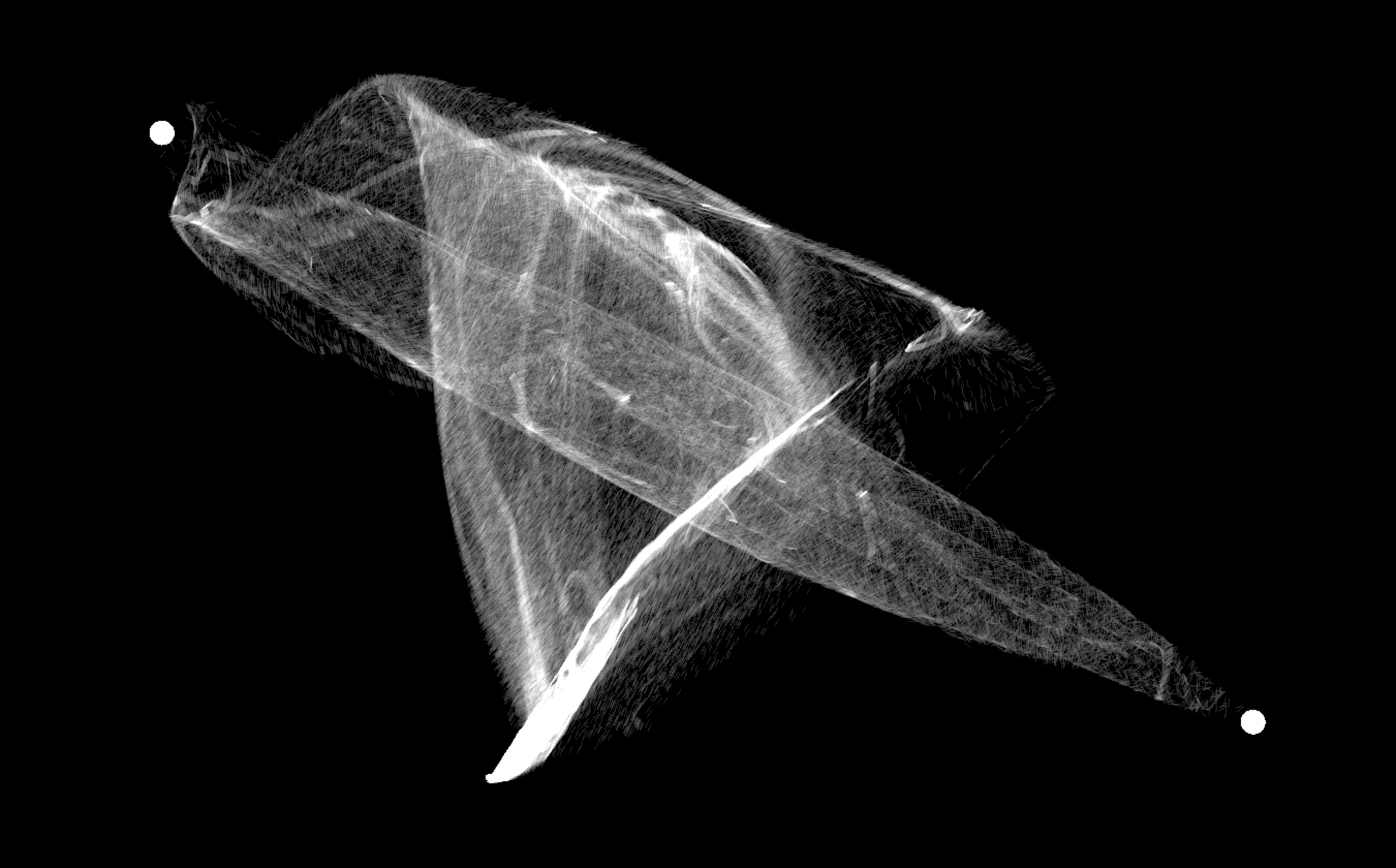

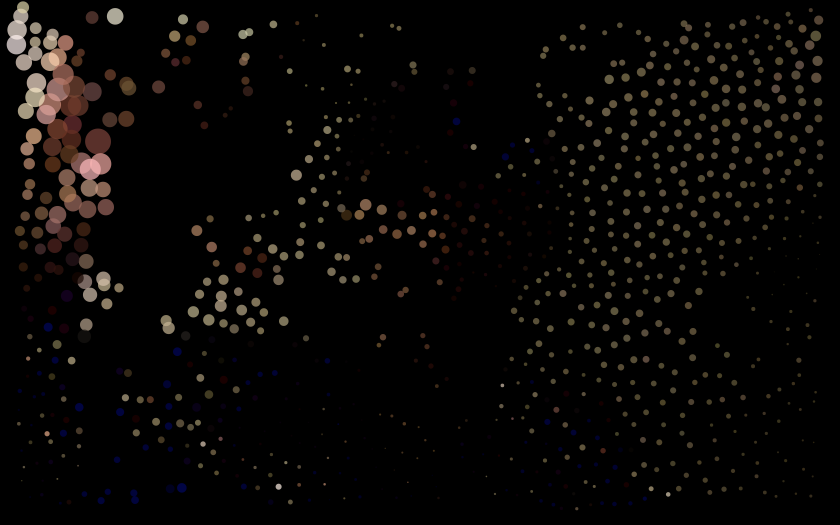

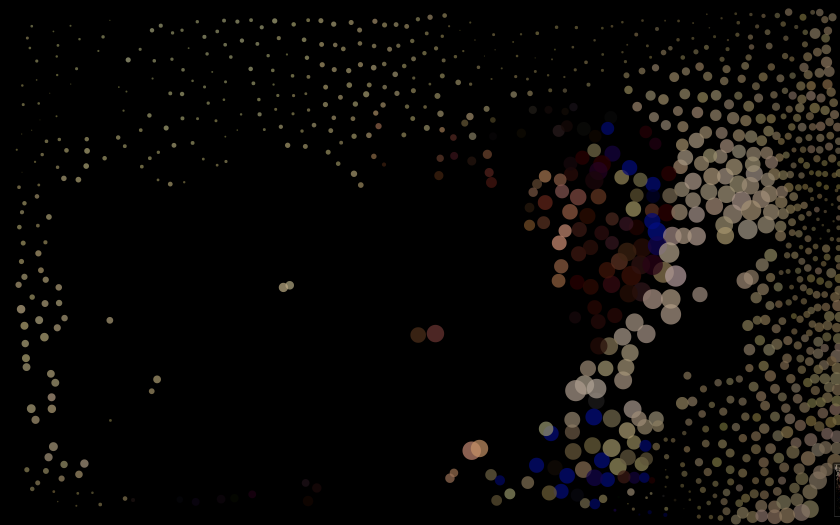

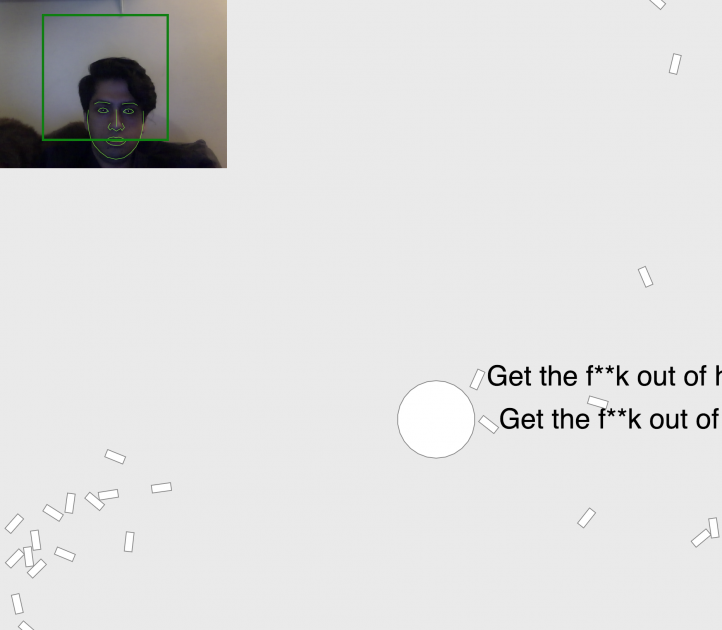

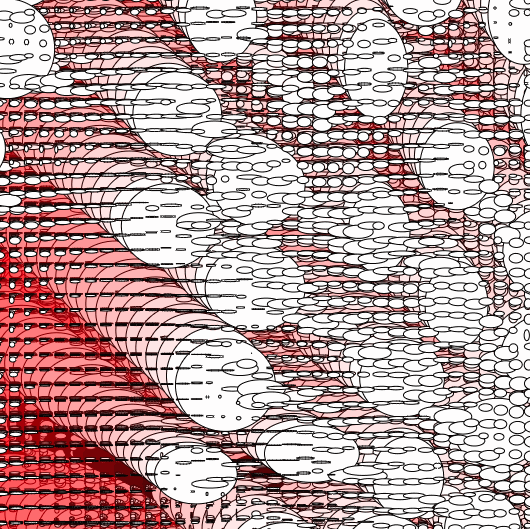

My goal for this project was to play with the concept of what 2D really is by showing that our eyes use certain cues such as size and shade to imply depth and 3D space even though the images are ultimately reduced to 2D images on our retinas. This fallacy of depth intrigued me and I wanted to see how I could use certain visual cues to my advantage in a way that made the viewer interact with a falsehood.

After a few iterations, I felt that the way that the balls grew and shrunk had a biological feel to it and seemed sinusoidal in nature, so I ended up spending hours to find the right pattern that would give a feeling of depth without seeming too rigid.

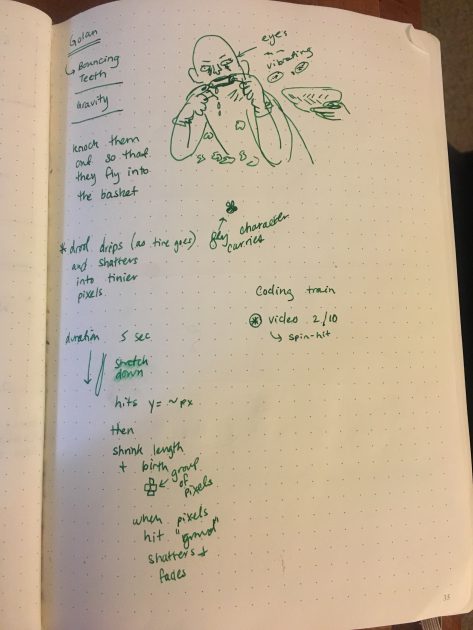

I tried to add another component where on a mouse click the underlying sinusoidal equation would be convolved with a Gaussian equation to give an added level of interaction, but the processing required to constantly apply the convolution was too computationally heavy for my machine.

This project was possible because of Dan Schiffman’s Box2D documentation