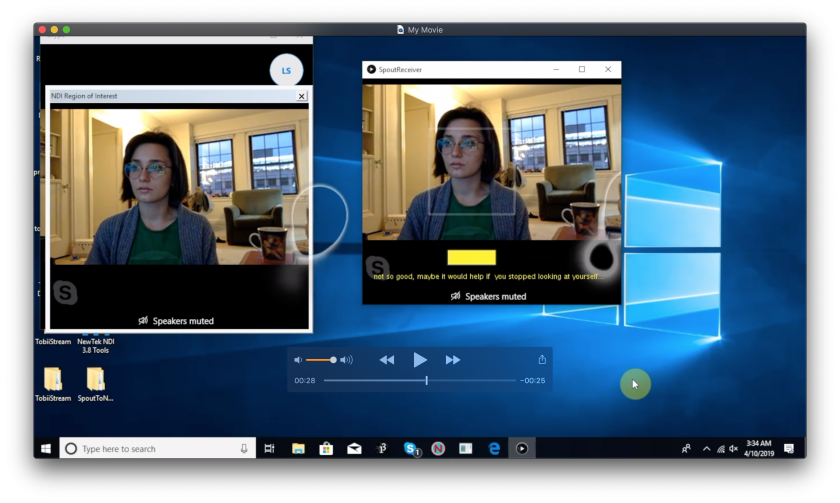

This project took quite some re-working from the start and in the end made for a very effective challenge for me with regard to learning how to navigate through a difficult backend/not well-documented issue of plumbing. It the process itself involved learning how to rip pixels from a screen and send the data over a local server into a program that would then commiunicate them to processing in a format that it could both understand, and replicate.

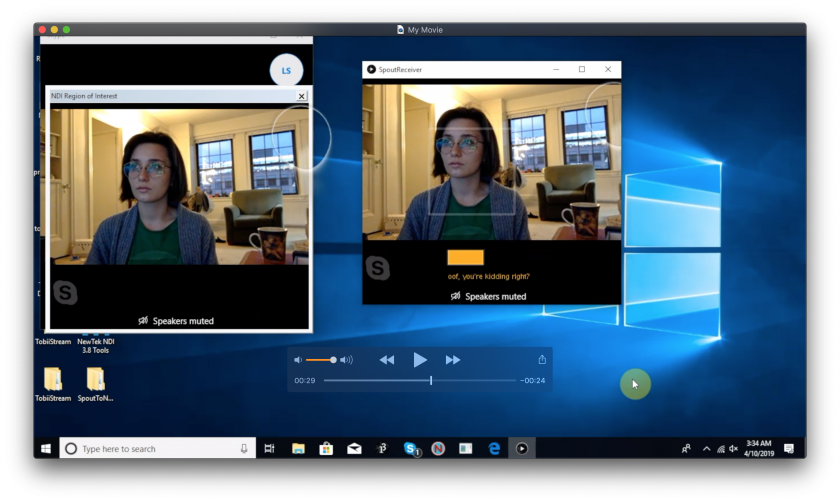

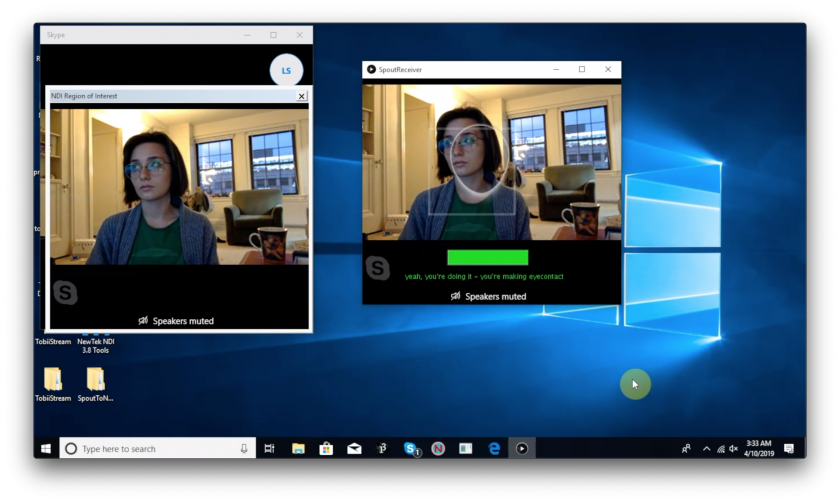

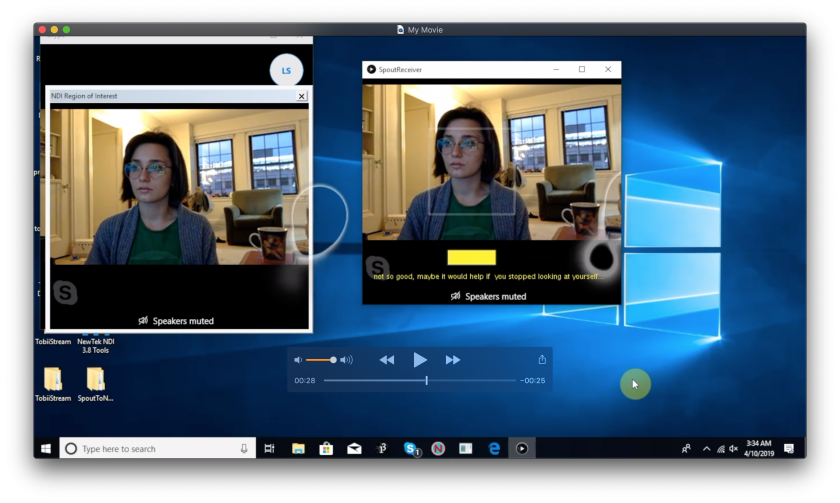

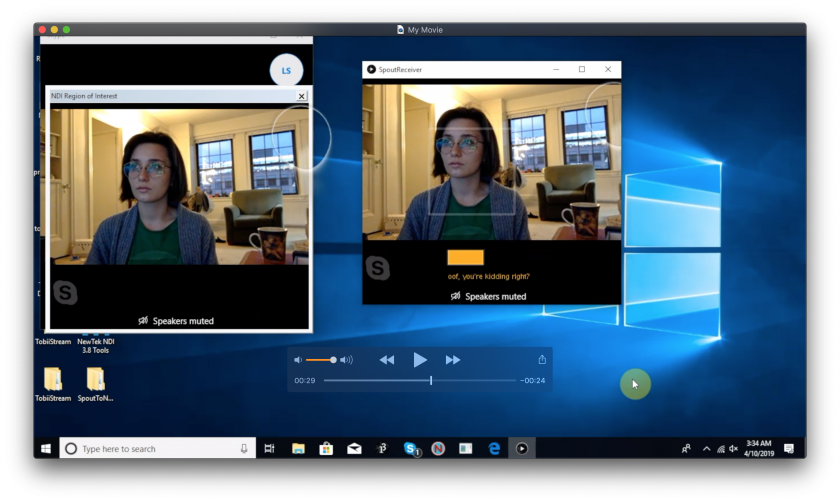

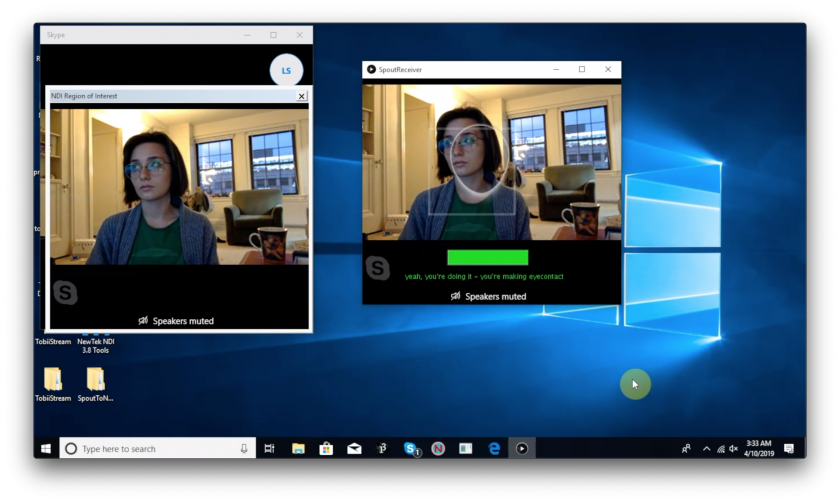

In the end, because I spent so much time on learning and working through the issue of trying to get the video data into processing, I had to Wizard of Oz the final interaction; seeing as how for some reason the pixel data was nit being recignized as video or photo readable by the face detecting library available to processing (OpenCV). Other than that small bug, the eye tracking data from the Tobii 4c, the video feed from Skype, and the sound, were all working perfectly well.

Beyind all of that however, I was able to successfully construct an imagined future wherein the potential for monitoring the gaze during video conferencing was realized and also allowed for a bit of play with th intervention I posed; a sort of meter that humorously rates the quality of eye contact you make with the form of the other person (and which would certainly work had it not been for the openCV issues…)

much of the the feedback I received during critique revolved around various other interventions I could include and every sense were quite helpful in generating ideas for how to continue this project if I so please. I was glad to have it looked at in this somewhat unfinished state because the quality of feedback was restricted to conceptual questions of what the world can ask in and of the world especially if existing in a context outside of class.