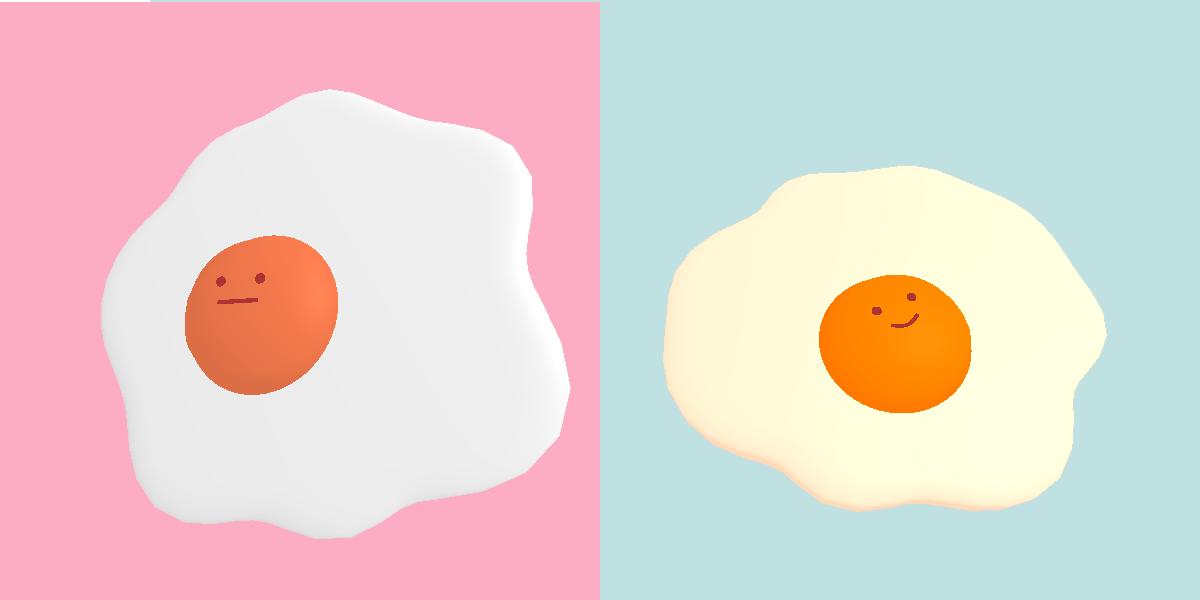

@good_egg_bot is a twitter bot that makes eggs.

The good_egg_bot is a benevolent twitter bot that will try its best to make the egg(s) you desire. Twitter users can tweet requests for certain colors, types, sizes, and amounts of egg to get an image of eggs in response. I made this project because I wanted to have a free and easily accessible way for people to get cute and customizable pictures of eggs. I was also excited by the prospect of having thousands of unique egg pictures at the end of it all.

I was inspired by numerous bots that I have seen on twitter that make generative artwork, such as the moth bot by Everest Pipkin and Loren Schmidt, and the Trainer Card Generator by xandjiji. I chose eggs as my subject matter because they are simple to model through code, and I like the way that they look.

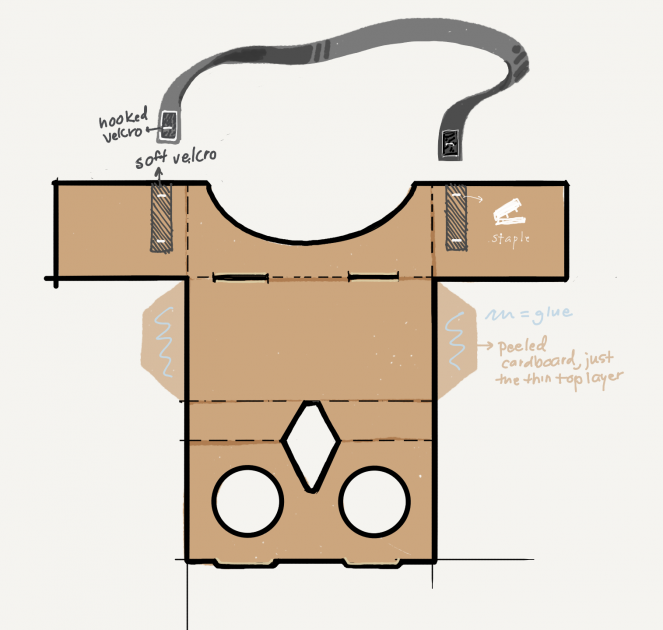

I used nlp-compromise to parse the request text, three.js to create the egg images, and the twitter node package to respond to tweets. I used headless-gl to render the images without a browser. Figuring out how to render them was really tricky, and I ended up having to revert to older versions of packages to it to work. The program is hosted on AWS so the egg bot will always be awake. I found Dan Shiffman’s Twitter tutorials to be really helpful, though some of it is outdated since Twitter has changed their API.

This project has a lot of room for more features. Many of people asked for poached eggs, which I’m not really sure how to make but maybe I’ll figure it out. Another suggestion was animating the eggs, which I will probably try to do in the future. Since it took so much effort to figure out how to render a 3D image with headless-gl, I think I should take advantage of the 3D-ness for animations. I like how, since the bot is on twitter, I have a record of how people are interacting with the bot so I can see what features people want. In my mind, this project will always be unfinished as there are so many ways for people to ask for eggs.

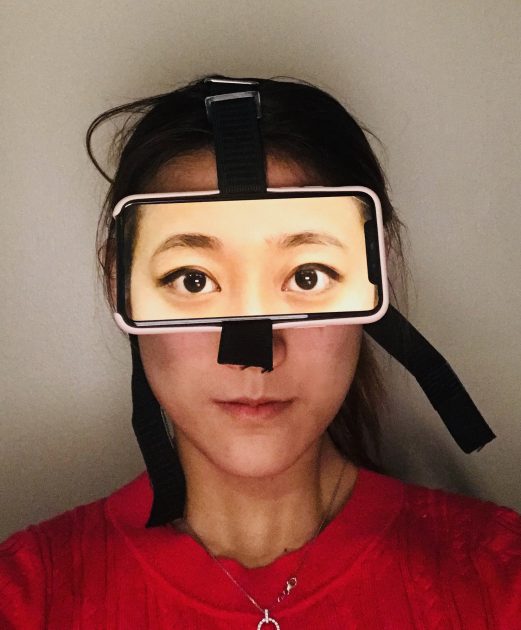

Here is a video tutorial on how to use the good_egg_bot:

, a novel which counts the tales of the travels of Marco Polo, told to the emperor Kublai Khan. “The majority of the book consists of brief prose poems describing 55 fictitious cities that are narrated by Polo, many of which can be read as parables or meditations on culture, language, time, memory, death, or the general nature of human experience.” (Thanks wikipedia)

, a novel which counts the tales of the travels of Marco Polo, told to the emperor Kublai Khan. “The majority of the book consists of brief prose poems describing 55 fictitious cities that are narrated by Polo, many of which can be read as parables or meditations on culture, language, time, memory, death, or the general nature of human experience.” (Thanks wikipedia)