FINAL:

so. I didn’t end up liking any of my iterations or branches well enough to own up to them. I took a pass when my other deadlines came up but I had a lot of fun during this process!

PROCESS

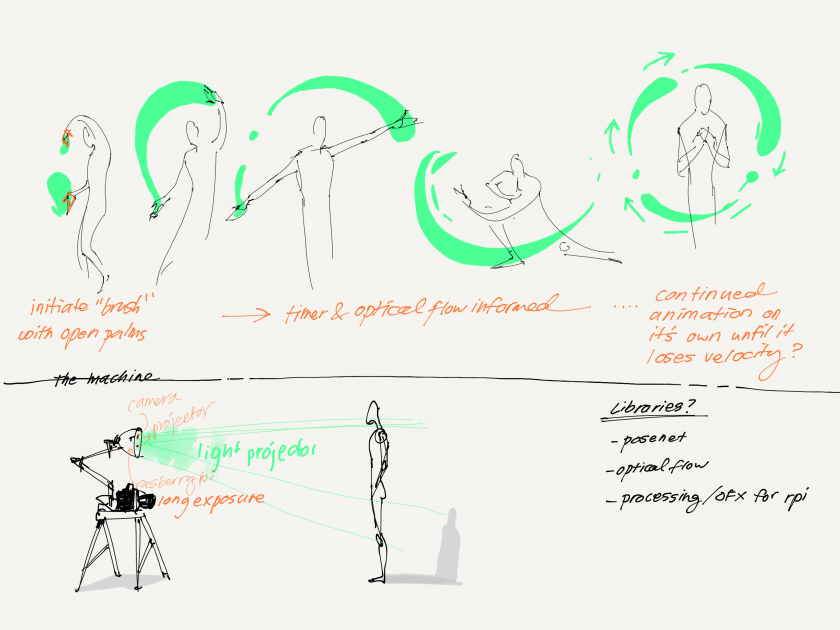

Some sketches —

Some resources —

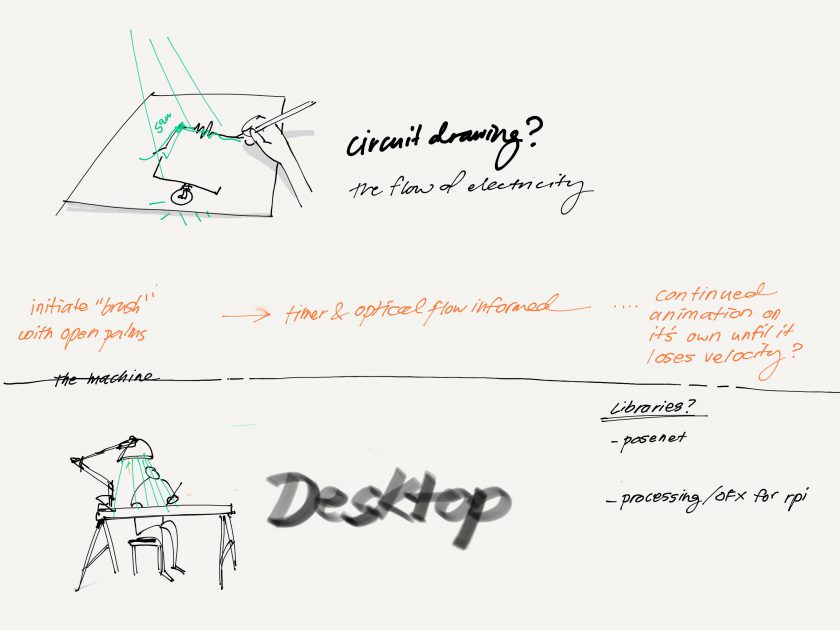

Potential physical machines…

1st prototype —

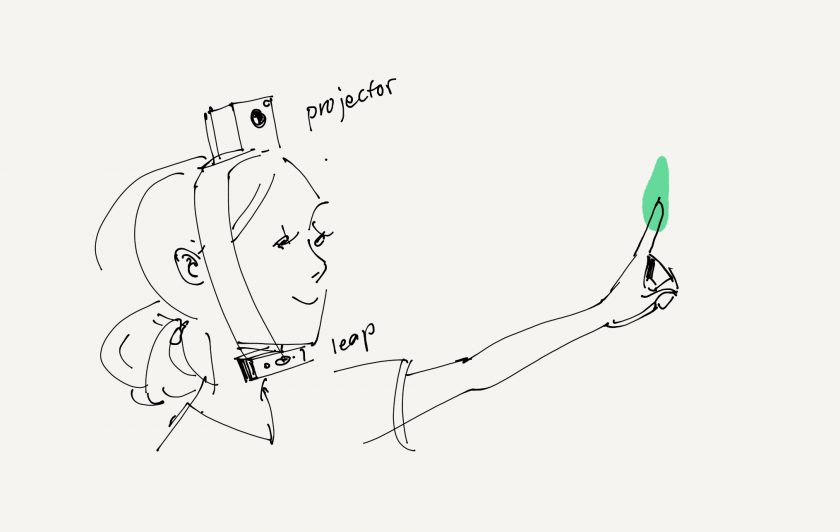

^ the above inspired by some MIT Media Lab work, including but not limited to —

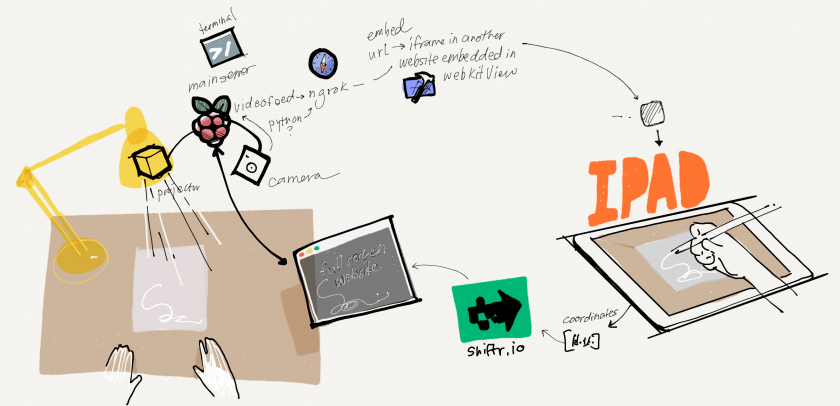

Some technical decisions made and remade:

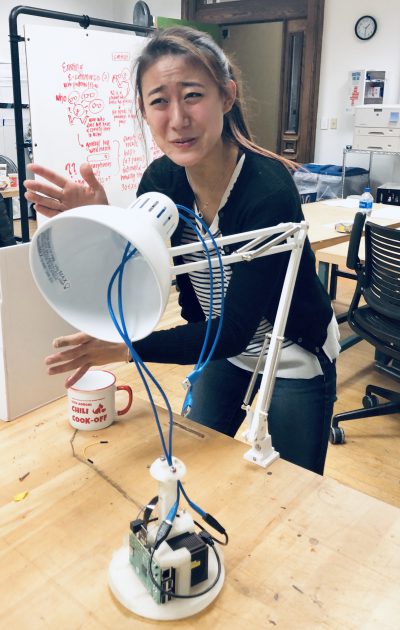

welp. I really liked the self-contained nature of a CV aided projector as my ‘machine’ for drawing so I gathered all 20+ parts —

printed somethings, lost a lot of screws…and decided my first prototype was technically a little jank. I wanted to try and be more robust so I got started looking for better libraries (WEBrtc) and platforms. I ended up flashing the Android Things Operating System (instead of raspbian) onto the Pi. This OS is one that Google has made specially for IoT projects with integration and control through a mobile android—

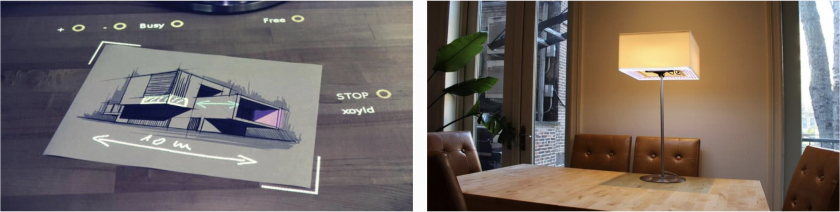

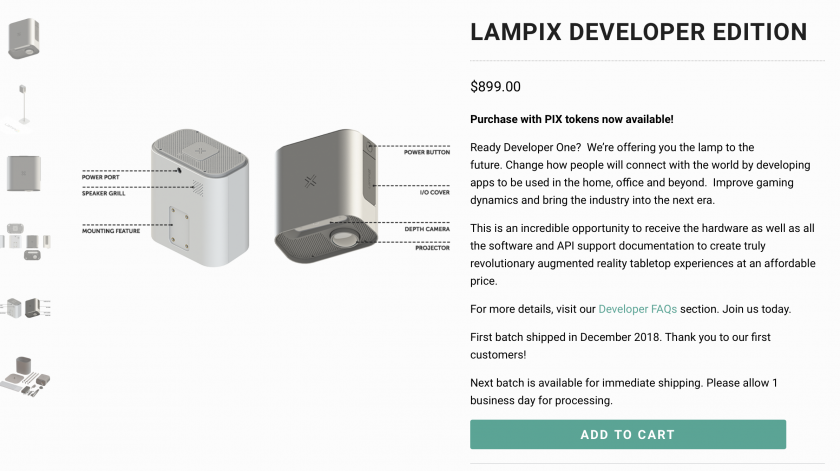

and then along the way I found a company that has already executed on the projection table lamp for productivity purposes —

LAMPIX — TABLE TOP AUGMENTED REALITY

^ turning point:

I had to really stop and think about what I hoped to achieve with this project because somewhere out in the world there was already a more robust system/product being produced. The idea wasn’t particularly novel even if I believed I could make some really good micro interactions and UX flows, so I wasn’t contributing to a collective imagination either. So what was left? The performance? But then I’d be relying on the artist’s drawing skills to provide merit to the performance, not my actual piece.

60 lumens from Marisa Lu on Vimeo.

…ok so it was back to the drawing board.

Some lessons learned:

- Worry about the hardware after the software interactions are MVP, UNLESS! Unless the hardware is specially made for a particular software purpose (i.e. PiXY Cam with firmware and optimized HSB detection on device)

ex: So. 60 Lumens didn’t mean anything to me before purchasing all the parts for this project, but I learned that the big boy projector used in the Miller for exhibitions is 1500+ lumens. My tiny laser projector does very poorly in the optimal OpenCV lighting settings, so I might have misspent a lot of effort trying to make everything a cohesive self-contained machine…haha.

ex: PixyCam is hardware optimized for HSB object detection!

HSB colored object detection from Marisa Lu on Vimeo.

- Some other library explorations

ex: So back to the fan brush idea testing some HSB detection and getting around to implementing a threshold based region growing algorithm for getting the exact shape…

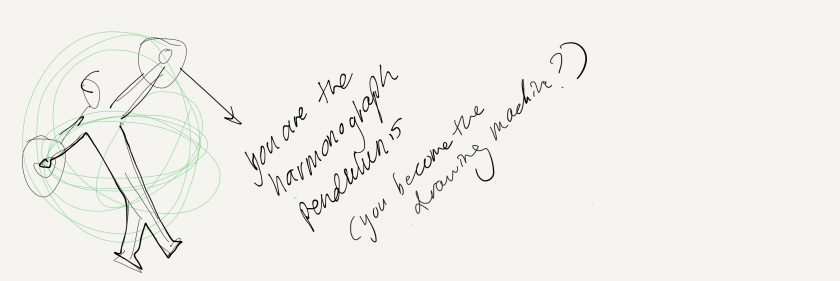

- Some romancing with math and geometry again

Gray showed me some of his research papers from his undergrad! Wow, such inspiration! I was bouncing out ideas for the body as a harmonographer or cycloid machine, and he suggested prototyping formulaic mutations, parameters, and animation in GeoGebra and life has been gucci ever since.