For this assignment, I created a musical instrument using my face. While not strictly a visual mask, I liked the idea of an aural mask augmenting audio input, transforming the human voice into something unrecognizable.

The final result is a performance in which I use the movements of my mouth in order to control the audio effects that are manipulating the sounds from my mouth. An overall feedback delay is tied to the openness of my mouth, while tilting my head or smiling distorts the audio in different ways. Also I mapped the orientation of my face to the stereo pan, making the audio mix move left and right.

One interesting characteristic about real-world instruments as compared to purely digital ones is the interdependence of the parameters. While an electronic performer can map individual features of sound to independent knobs, controlling them separately, a piano player is given overlapped control over the tonality of the notes: hitting the keys harder will result in a brighter tone, but also an overall louder sound. While it may seem like an unnecessary constraint, this often results in performances with more perceived expression, as musicians must take extreme care if they intend to play a note in a certain way. I wanted to mimic this interdependence in my performance, so I purposefully overlapped multiple controls of my audio parameters to the same inputs on my face. Furthermore, the muscles on my face often are affected by one another, so this constrained the space of control that I was given to manipulate the sound. The end result is me performing some rather odd contortions of my face to get the sounds that I wanted.

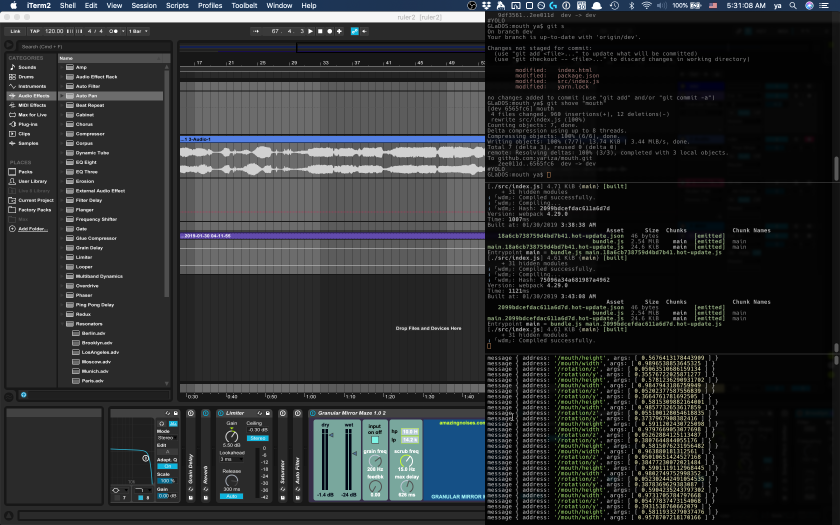

The performance setup is done using a mix of software and hardware setup. First, I attached a contact microphone to my throat, using a choker to secure it in place. The sound input is then routed to Ableton Live, where I run my audio effects. A browser-based Javascript environment is used to track and visualize the face from a webcam using handsfree.js, and send parameters of the face expression through OSC and WebSockets. Because Ableton Live can only receive UDP, however, a local server instance is used to pass the WebSocket OSC data over to a UDP connection, which Ableton can receive using custom Max for Live devices.

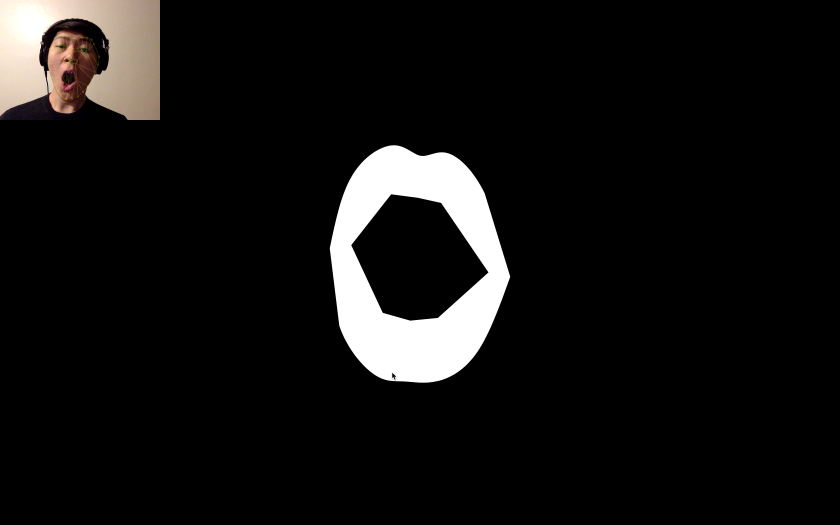

For the visuals of the piece, I wanted something simple that showed the mouth abstracted away and isolated from the rest of the face, as the mouth itself was the main instrument. I ended up using paper.js to draw smooth paths of the contours of my tracked lips, colored white and centered on a black background. For reference, I also included the webcam stream in the top corner; in a live setting, however, I would probably not show the webcam stream as it is redundant.