There are two components, due Wednesday 11/1 (Revised Deadline).

- Readings/Viewings (1 hour)

- Interactive Gesture Expander (11 hours)

Readings/Viewings

(45-60 minutes) Please watch/read the following media. This should take under an hour:

- Zach Lieberman, Más Que La Cara Overview (~12 minute read)

- Last Week Tonight: Face Recognition (21 minutes)

- Joy Buolamwini: How I’m fighting bias in algorithms (9 minutes)

In a brief Discord post in the #face-viewings channel, jot down an idea, fact, claim, or image that you came across in any of the above media that struck you. Write a sentence about why you found it interesting.

Interactive Gesture Expander

- Choice A: Costume/Puppet. An accompanying performance video is 40% of the grade.

- Choice B: Something other than a costume or puppet. An accompanying demonstration video is 20% of the grade.

(11 hours, due Wednesday 11/1, a revised deadline) For this project, you are asked to make and document software which creatively interprets, uses, or responds to the actions and gestures of the body. In other words: develop an interesting treatment of real-time data captured (with a camera) from a person’s face, hands, or other body parts.

Consider whether your treatment is a kind of entertainment, or whether it serves a ritual purpose, a practical purpose, or operates to some other end. You can create a game. Your project may be a musical instrument, or a drawing tool. It could be an abstract visual composition. It could be a vehicle that allows you to assume a new identity, inhabiting something nonhuman or even inanimate. It could interact with a simulated environment with articulated parts and dynamic behaviors. It may blur the line between self and others, or between self and not-self.

To share your project in documentation, you will record a short video in which you use it. Design your software for a specific “performance”, and plan your performance with your software in mind; be prepared to explain your creative decisions. Rehearse and record your performance.

Now:

- Create your project using p5.js. Some links to code for template projects are provided below. (Alternatively, if you would prefer to independently teach yourself GLSL shader programming, you may use ShaderBooth.)

- Enact a brief demonstration or performance that makes use of your software. Be deliberate about how you perform, demonstrate, or use your software. Consider how your demo-performance should be tailored to your software, and your software should be tailored to your performance. It may help to write a script for your performance or demo narration.

- Document your demo/performance with a video recording. Store this in an Unlisted (not Private) video on YouTube or Vimeo.

- If you create a costume or puppet, your accompanying video should be a recording of a performance, must be at least 30 seconds long, with sound, and have a beginning, middle, and end; the video will be 40% of your grade.

- If you create something other than a costume or puppet, your video should be a narrated documentation that explains what your software is, and how it is used; the video will be 20% of your grade.

- Write a post in the #gesture-expander channel on Discord. Include about 100-150 words that explain and evaluate your project.

- Include at least two screenshots; embed these in your Discord post.

- Link to your demo/performance video in the Discord post as well.

Code Templates

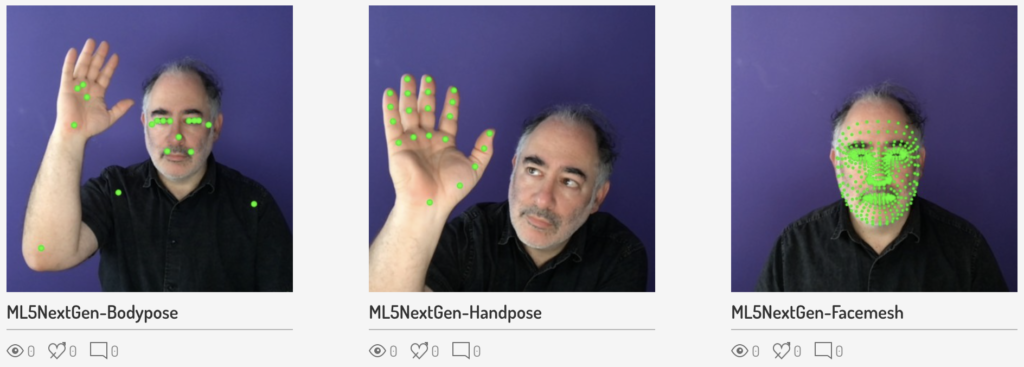

For this project, we will use the (very) new ML5-Next-Gen templates for face, body, and hand tracking. I have ported these to OpenProcessing so that you can easily work on your projects there. NOTE: If you need a template that provides multiple modalities, talk to the professor.

- ML5-NextGen Bodypose: @OpenProcessing.org | @Editor.p5js.org

- ML5-NextGen Facemesh: @OpenProcessing.org | @Editor.p5js.org

- ML5-NextGen Handpose: @OpenProcessing.org | @Editor.p5js.org

Also of possible interest:

- Pose, Hands, or Face with Metrics: 2023 MediaPipe+p5 (MediaPipe’s face metrics demo)

- Face+Hands+Body (Handsfree 2018+p5). Note: Handsfree is very slow to load.

- Face+Hands+Body (with Handsfree 2018+p5, Editor)

- Handpose Parts (ML5-NextGen) — imagine a game

- Gesture Recognition (Rock-Paper-Scissors with ML5-NextGen)

- Clown Face template (Handsfree 2018)

- Frog Face template (Handsfree 2018)

- FingerFingerNose template (Handsfree 2018)

- SimpleHandsFaceBody template (Handsfree 2018)

- HandPuppet template (Handsfree 2018)

Some Creative Opportunities

The following suggestions, which are by no means comprehensive, are intended to prompt you to appreciate the breadth of the conceptual space you may explore.

- You could make a body-controlled game.

- You could make a sound-responsive costume.

- You could make a creativity tool, like a drawing program.

- You may capture more than one person.

- You may focus on just part of the body.

- You may focus on how an environment is affected by a body.

- You may control the behavior of something non-human.

- You could make software which is analytic.

- You could make something altogether unexpected.