Marynel Vázquez – LookingOutwards – 5

Kinect and Wiimote and Nanotech Construction Kit (direct link here)

This guy is building a structure in 3D using a wii mote and separating himself from the background using the depth given by the Kinect. As he says in the video, there is still some work to do with the background subtraction operation. Nonetheless, the idea of building virtual structures on the fly is very appealing to me.

One thing that confuses me is the orientation of the triangles he’s putting together. At the beginning it seems like they are flat, but then they end up forming half of a sphere. How did that happen? Is it because the guy is rotating the cube view?

ShadowPlay: A Generative Model for Nonverbal Human-Robot Interaction (paper can be downloaded from here)NOTE: This is a paper by Eric Meisner, Selma Šabanovic, Volkan Isler, Linnda R. Caporael and Jeff Trinkle, published in HRI 2009. All pictures below were taken from the paper. Not sure if this work is what motivated the puppet prototype by Theo and Emily.

The abstract of the paper goes as follows:

Humans rely on a finely tuned ability to recognize and adapt to socially relevant patterns in their everyday face-to-face interactions. This allows them to anticipate the actions of others, coordinate their behaviors, and create shared meaning— in short, to communicate. Social robots must likewise be able to recognize and perform relevant social patterns, including interactional synchrony, imitation, and particular sequences of behaviors. We use existing empirical work in the social sciences and observations of human interaction to develop nonverbal interactive capabilities for a robot in the context of shadow puppet play, where people interact through shadows of hands cast against a wall. We show how information theoretic quantities can be used to model interaction be- tween humans and to generate interactive controllers for a robot. Finally, we evaluate the resulting model in an embodied human-robot interaction study. We show the benefit of modeling interaction as a joint process rather than modeling individual agents.

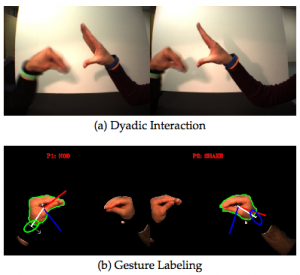

The authors interestingly study human-robot interaction through gestures. In the process they model hand gestures and use compute vision to receive human input:

A robot then interacts with a human using similar gestures:

The whole paper is very interesting, specially when thinking about the synchronization of actions between a human and a robot. I think this paper is now reproducible with the Kinect.. no more need for an expensive stereo camera :)

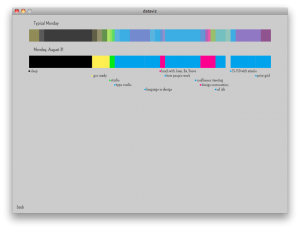

Adobe Interactive Installation (direct link here)This interaction is very traditional from my opinion, but it’s a good example of the high level of engagement that these experiences produce. The system works using an infrared camera, and tracks the position of people passing by. Different animations and drawings are displayed as viewers move in front of the screen. If I were playing with this installation, I would completely forget that Adobe is being sold to me…