Meg Richards – Project 2 Final

Network Usage Bubbles

The original incarnation of this project was inspired by the Good Morning! Twitter visualization created by Jer Thorp. A demonstration of CMU network traffic flows, it would show causal links for the available snapshot of the network traffic. All internal IP addresses had to be anonymized, making the internal traffic less meaningful. Focusing only on traffic with an endpoint outside of CMU was interesting, but distribution tended towards obeying the law of large numbers, albeit with a probability density function that favored Pittsburgh.

This forced me to consider what made network traffic interesting and valuable, and I settled on collecting my own HTTP traffic in real-time using tcpdump. I summarily rejected HTTPS traffic in order to be able to analyze the packet contents, from which I could extract the host, content type, and content length. Represented appropriately, those three items can provide an excellent picture of personal web traffic.

Implementation

The visualization has two major components: Collection and representation. Collection is performed by a bash script that calls tcpdump and passes the output to sed and awk for parsing. Parsed data is inserted into a mysql database. Representation is done by Processing and the mysql and jbox2d libraries for it.

Visualization Choices

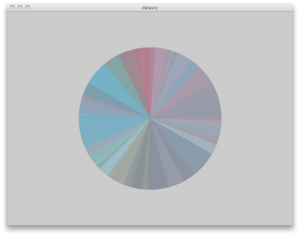

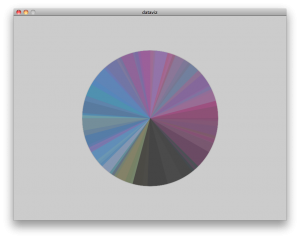

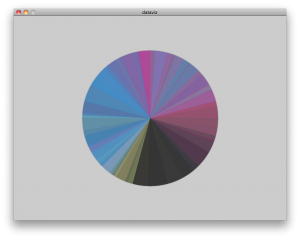

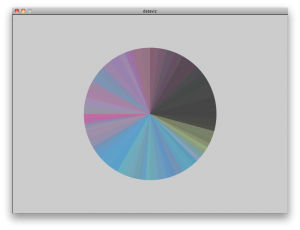

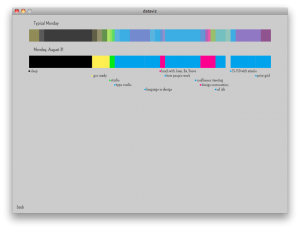

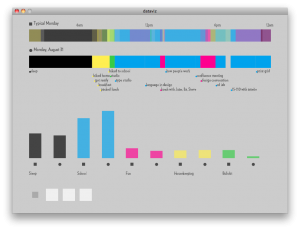

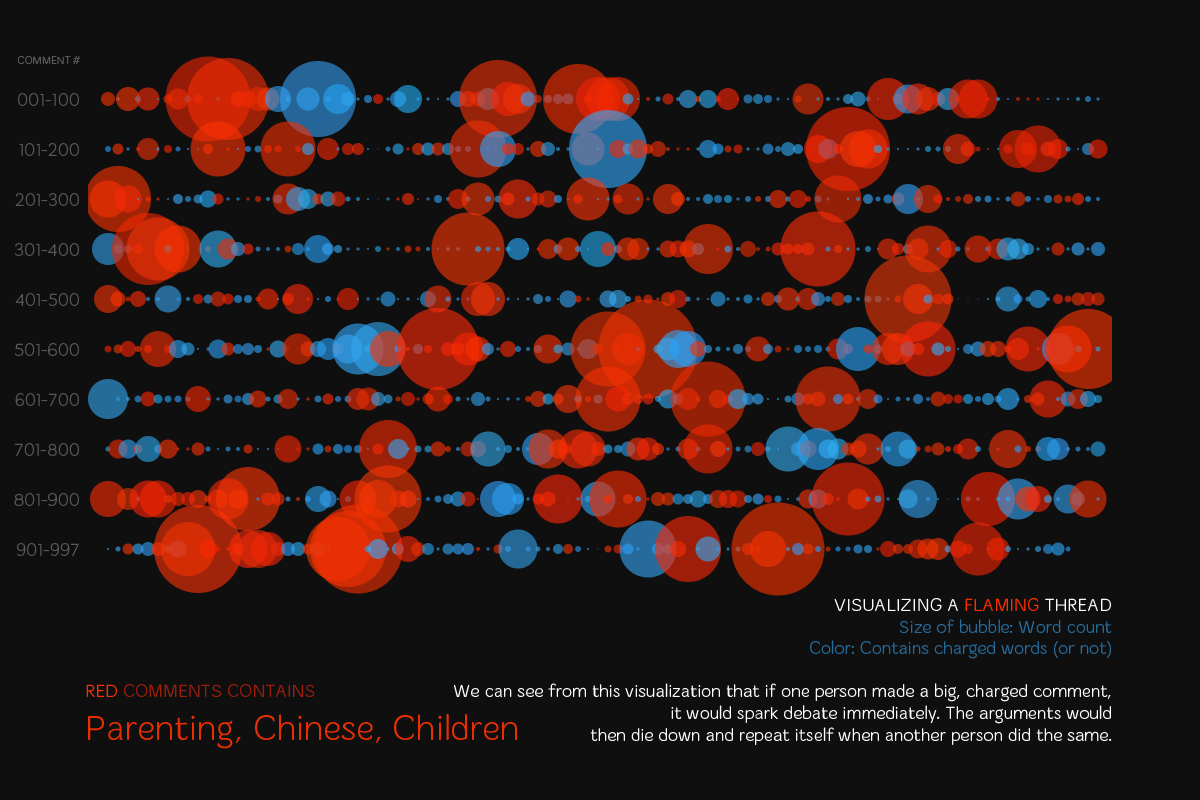

Each bubble is a single burst of inbound traffic, e.g. html, css, javascript, or image file. The size of the bubble is a function of the content size, in order to demonstrate the relative amount of tube it takes up to other site elements. Visiting a low-bandwidth site multiple times will increase the number of bubbles and thus the overall area of its bubbles will approach and potentially overcome the area of bubbles representing fewer visits to a bandwidth-intensive site. The bubbles are labeled by host and colored by the top level domain of the host. In retrospect, a better coloring scheme would have been the content type of the traffic. Bubble proximity to the center is directly proportional to how recently the element was fetched; elements decay as they approach the outer edge.

The example above shows site visits to www.cs.cmu.edu, www.facebook.com (and by extension, static.ak.fbcdn.net), www.activitiesboard.org, and finally www.cmu.edu, in that order.

Network Bubbles in Action

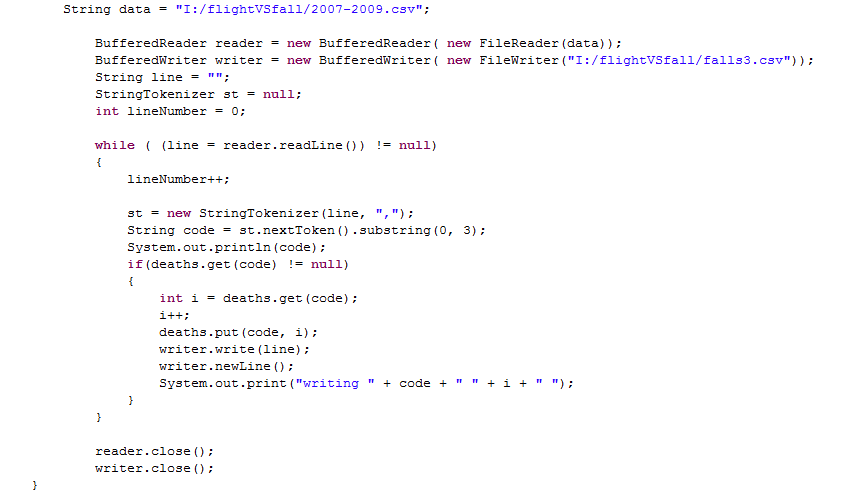

Code Snippets

Drawing Circles

Create a circle in the middle of the canvas (offset by a little bit of jitter on the x&y axes) for a radius that’s a function of the content length.

Body new_body = physics.createCircle(width/2+i, height/2+j,sqrt(msql.getInt(5)/PI) ); new_body.setUserData(msql.getString(4)); |

Host Label

If the radius of the circle is sufficiently large, label it with the hostname.

if (radius>7.0f) { textFont(metaBold, 15); text((String)body.getUserData(),wpoint.x-radius/2,wpoint.y); } |

tcpdump Processing

Feeding tcpdump input into sed

#!/bin/bash tcpdump -i wlan0 -An 'tcp port 80' | while read line do if [[ `echo $line |sed -n '/Host:/p'` ]]; then activehost=`echo $line | awk '{print $2}' | strings` ... fi |