by honray @ 2:16 am 10 May 2011

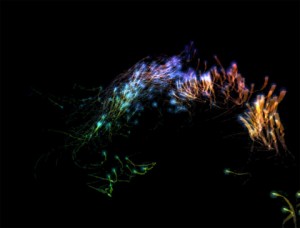

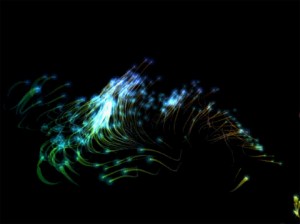

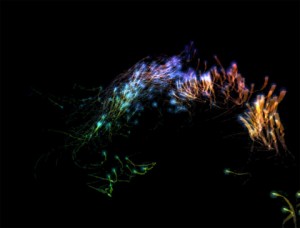

So, Alex & I decided to create a flocking effect with the Kinect, inspired by Robert Hodgin’s previous work in this area.

For this project, I was involved in the more low-level technical aspects, such as getting the Kinect up and running and finding out how to get the user data from the api. We spent a fair amount of time figuring out how to get the Kinect to work on the PC with a library that would give us both depth and rgb data on the detected user. After trying to use a processing library which eventually did not work, we opted for using OpenNI & nite. Since we both had PC laptops, this was arguably our best bet. Getting the project to work under Visual Basic was a hurdle, since there was poor documentation for the OpenNI library and how to get it up and running on VB.

Once we did get it running, I wrote a wrapper class to detect users, calibrate, and then parse the user and depth pixels so that Alex could easily access them using her particle simulator. We iterated on the code, and looked for ways to improve the flocking algorithm so that it would look more realistic and work better. We also spent a lot of time tweaking parameters and finding out how to represent the depth of the user as seen from the camera. The flocking behavior didn’t look quite as nice when we first implemented it in 3D. As such, we decided to use color to help represent the depth data.

And this is what we ended up with:

Comments Off on P3 final blog post

by Ben Gotow @ 8:54 am 23 March 2011

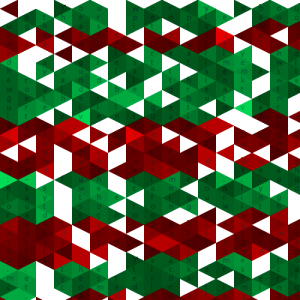

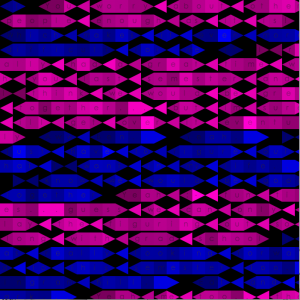

I threw around a lot of ideas for this assignment. I wanted to create a generative art piece that was static and large–something that could be printed on canvas and placed on a wall. I also wanted to revisit the SMS dataset I used in my first assignment, because I felt I hadn’t sufficiently explored it. I eventually settled on modeling something after this “Triangles” piece on OpenProcessing. It seemed relatively simple and it was very abstract.

I combined the concept from the Triangles piece with code that scored characters in a conversation based on the likelihood that they would follow the previous characters. This was accomplished by generating a Markov chain and a character frequency table using combinations of two characters pulled from the full text of 2,500 text messages. The triangles generated to represent the conversation were colorized so that more likely characters were shown inside brighter triangles.

Process:

I started by printing out part of an SMS conversation, with each character drawn within a triangle. The triangles were colorize based on whether the message was sent or received, and the individual letter brightnesses were modulated based on the likelihood that the characters would be adjacent to each other in a typical text message.

In the next few revisions, I decided to move away from simple triangles and make each word in the conversation a single unit. I also added some code that seeds the colors used in the visualization based on the properties of the conversation such as it’s length.

Final output – click to enlarge!

Comments Off on Ben Gotow-Generative Art

by Chong Han Chua @ 5:23 pm 27 February 2011

I am interested in various growth simulations forming into objects that we can use in our daily lives. Something similar to nervous attack but used in a way to aesthetically shape furniture.

A good example would be http://i.materialise.com/blog/entry/5-amazing-full-sized-furniture-pieces-made-with-3d-printing The entry where the stool takes the form of a flock is extremely interesting. I’m wondering if any work can be done in particle systems to simulate this.

In addition, while researching on fluid simulations, I came across this, http://memo.tv/ofxmsafluid marked here for reference just in case the fluid idea in my head connects

I’ve seen a few wonderful processing 3d printing simulation of organic forms. http://www.michael-hansmeyer.com/projects/project4.html aswell as this awe generating piece http://www.sabin-jones.com/arselectronica.html

Lastly, my buddy’s work at www.supabold.com

Comments Off on Looking Outwards – Simulations/Forms

by cdoomany @ 3:22 am 25 February 2011

Neurospasta (Greek word usually translated as “puppets”, which means “string-pulling”, from nervus, meaning either sinew, tendon, muscle, string, or wire, and span, to pull), is a game without any defined objective or goal, but rather a platform for experimentation and play.

Neurospasta is am interactive two-player game in which the player’s physical movements are mapped to a 2D avatar. The game’s graphic interface consists of a set of manipulation functions which enable players to interact with their avatar as well as the other player’s.

In terms of software, we used Open NI for the skeletal tracking and OpenFrameworks for the UI and texture mapping.

*For future consideration, Neurospasta would include a larger selection of manipulation functions and in an ideal environment would be able to capture and generate avatar textures for accommodating new player identities.

by susanlin @ 12:42 pm 23 February 2011