Madeline’s Revised House Prints

Placeholder for documentation.

A Stroke of Genius

Inspiration came to me in a flash for this assignment. As Evan was describing his excellent web project to me, an idea came like a thief in the night, spiriting away my fancy – A simulated environment where the user becomes a Rain God.

Let There Be Life

The concept was lofty, but straightforward. One hand would control the flow of a rainstorm, while another would serve as a platform for verdant plant growth. If the rain stopped or the hand serving as soil was taken away, the plants would wither, or at the very least, fall to pieces.

It would also be gorgeous. I would use bleak, grayscale aesthetics inspired by the video game Limbo, incorporating its fog and mystery and fragile interpretation of what it means to be alive. It would be art.

Godhood is Complicated…

Unfortunately… issues arose. I quickly found an excellent rain algorithm for processing and energetically proceeded with code. However, even the help of Legitimate Programmer Kaushul hacking away for two hours could not load the OpenNI library on my machine, and the functionality of Shiffman’s Kinect library left something to be desired. Additionally, I discovered (too late?) that the chosen rain algorithm didn’t play well with any other sort of visualization.

All Work and No Play…

As I slaved away in an attempt to salvage my masterpiece, yet another serendipitous inspiration struck. Shiffman’s Kinect program couldn’t do much, but there seemed to be a unique interaction opportunity posed by the constraint of only being able to track one hand at a time. Just as quickly as the first, this concept seized my fevered, sleep-deprived imagination. Time was running short, and the only choice was total commitment.

[vimeo=http://vimeo.com/39394932]

Takeaways

• Never base a project around a single element, especially one you’re appropriating from others.

• Don’t assume usability or compatibility from crowdsourced libraries.

• Plan interactions with limitations of the tools in mind.

• Don’t jump into coding too hastily.

• If fortune gifts you with inspiration, it’s never a bad thing to follow it.

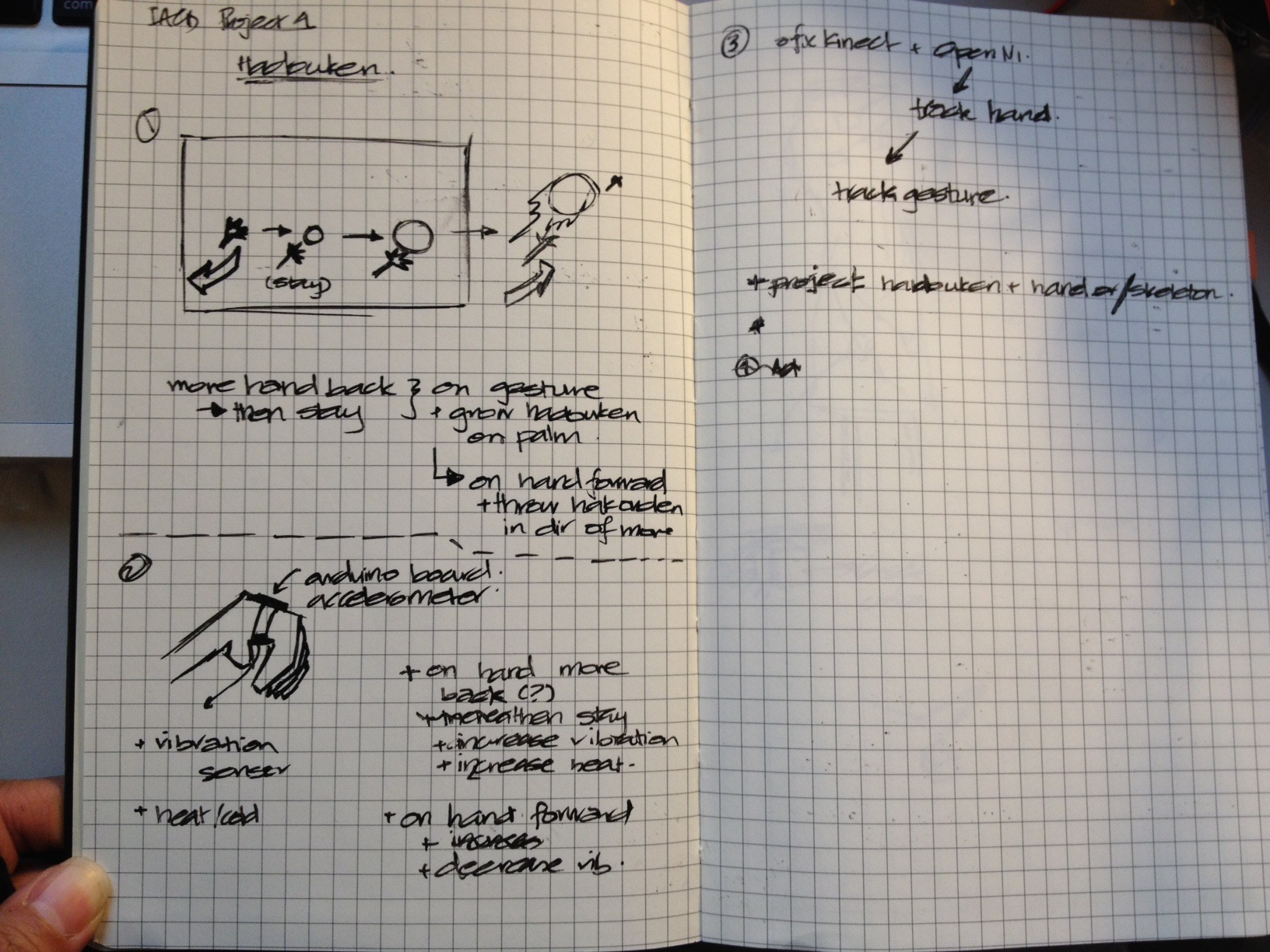

For this project, I wanted to investigate tangible feedback with the Kinect.

My initial idea was to have a glove with an Arduino that uses an accelerometer to actuate a small vibrating motor when a particular gesture is recognized and have this be detected simultaneously via the Kinect.

When I did some searching, I found that similar work had been done before for the Hug t-shirt and an audio generating glove (in my last post).

I figured creating a fireball/Hadouken should be simple enough to let the hand moving backward gesture signify an increase in energy (so vibration) and moving forward a decrease in energy (so a decrease in vibration). The goal was to investigate whether having that feedback had any effect at all.

I had issues with the accelerometer deteching gestures which turned out to be good. I get to thank Asim Mittal for suggesting using the Serial Port to communicate between the Arduino and machine. I ended up finding a library ofSerial & ofSerialSimple that let me send data bytes back and forth between my Arduino and OpenFrameworks. So visually, I didn’t spend much time on the Hadouken so this was pretty simple. But when I did first get the glove running, it was pretty cool because the motor feedback is effective.

So I dont have an in-class demo because the Lilypad did get fried and another one is on its way. But I do have a video I managed to take. Despite how I look, I actually did have fun with this project :) .

[youtube=http://www.youtube.com/watch?v=NsMf7qm_qx4]

I think this can go further. So, I just got a pulse sensor and I think getting that data back and plugging it in could be interesting. I also think the Hadouken/Fireball was great to start simple but I’d be interested in ideas about making the visuals more appealing and engaging with this sort of feedback.

In a remote data center, advanced computer vision software watches a live video feed looking for smoke signals emanating from a distant emergency signal box and is called upon to order rations for two feckless explorers, lost in the woods and famished.

The premise was to build a hypothetical communications link between an area of poor phone reception with a cell tower using smoke signals and a telephoto lens.

Although the outcome is speculative, everything in this video is real except that we loaded prerecorded video into Max rather than setting up a live video stream to monitor. A Max patch performing basic color analysis on the footage looked for particular colors of smoke: red, red/blue, or blue. When a particular signal was detected, it used a really super screen-automation scripting environment called Sikuli which dialed the number to papa john’s in Skype complete with the cool animation. The call recording was a real conversation (although it was a papa john’s in mountain view, CA–the only one open at the time we were creating this. Perhaps his patience to stay on the line was due to regular robotic pizza calls from silicon valley….)

By Craig and Luke

The idea for this project was developed in the context of a workshop I attended during last week called

In this workshop, I was part of a cluster called Reactive Acoustic Environments whose objective was to utilize the technological infrastructure of EMPAC, in Rensselaer Polytechnic Institute in Troy in order to develop reactive systems responding to acoustic energy.

cluster leaders: Zackery Belanger, J Seth Edwards, Guillermo Bernal, Eric Ameres

During the workshop acoustic simulations were developed by the team about the different shape configurations the canopy could take.

images source:

During the workshop I started working on the idea of making the canopy interactive in relation to the movement of the people below. By recognizing different crowd configurations the canopy would take different forms to accommodate different acoustic conditions.

I started working using processing and kinect. The libraries I initially used were SimpleOpenNI to extract the depth map and OpenCV to perform blob detection the 2-dimensional SceneImage kinect input. However this approach was a bit problematic, since it was not using the depth data for the blob detection; the result was that openCV regarded people that were standing next to each other as one blob.

1st attempt: PROCESSING + openNI + openCV

2nd attempt: PROCESSING + openNI

Later on, I realized that the OpenNI library actually already contains the blob detection information; thus I rewrote the processing script using only OpenNI. Since I do not have access any more to the actual canopy, I tested the blob detection script on a kinect video captured here at CMU and the interaction on a 3d model developed in grasshopper. Processing sends signals to Grasshopper via the oscP5 library. Grasshopper receives signals through the gHowl library. In Grasshopper the Weaverbird library is also used for the mesh geometry.

GRASSHOPPER + gHOWL

To determine the values according to which the canopy is moving, I used the data taken from the kinect imput for each of the detected people. For each person, the centroid point of its mass was used to compute the standard deviation of the distribution of detected people in the x and y direction. A small standard deviation represents a more dense crowd while a big standard deviation represents a sparse crowd. These values along with the position of the mean centroid point determined the movement of the canopy. Below some of the conditions attempted to include in the interaction behavior.

[vimeo http://vimeo.com/39389553 w=600&h=450]

My fourth project is Kinectrak, a natural user interface for enhancing Traktor DJ sets. Kinect allows DJs to use their body as a MIDI controller in a lightweight and unobtrusive way. The user can control effect parameters, apply low + high pass filters, crossfade, beatjump, or select track focus. This limited selection keeps the interface simple and easily approached.

[slideshare id=12200721&doc=p4pres-120328211310-phpapp02]

I used Synapse to get skeleton data from the Kinect (after several failed bouts with ofxOpenNI), sent over OSC to a custom Max patch. Max parses the data and uses it to drive a simple state machine. If the user’s hands are touching, the system routes its data to the Traktor filter panel, rather that FX. This gesture lets DJs push and pull frequencies around the spectrum; it feels really cool. Max maps the data to an appropriate range and forwards it to Traktor as MIDI commands. The following presentation describes the system in greater detail.

I’d like to end with a video of Skrilex (i know) and his new live set up by Vello Virkhaus.

I have always been interested in performative artwork, especially work that is both playful or technologically engaging, but also speaking to something greater than the individual performance.

McLaren’s work is great. He has a mastery of animation timing. In my favorite piece “Canon” McLaren confuses the audience first before revealing the pieces composition. And just before the viewers fully grasp the rhythm and situation, he ends the performance.

I am very attached to recreating “Tango” in hopes of collaborating with ZBIGNIEW. At the time he was working at the edge of technology to produce this piece through compositing and meticulous planning. But I feel the true strength of “Tango” is in this idea of transient space. Each ‘performer’ is unaware of one another and continues their life without care or contact with another person.

Why is this re-performance important:

My version is important because I will be able to computationally incorporate involuntary viewers into the piece. I imagine this piece being installed in a room or common place. My work differs in that the software will look for people and walking paths that can be incorporated into a looping pattern in a way that they never collide with each other. Like Zbigniew my goal is to fill an entire space in which participants do not see each other, nor collide.

Tech/learned skills:

-Potential and importance of kinect imaging as opposed to standard camera vision for tracking.

-Kinect’s ability to tag/track people based on body gestures.

-How to organize 3D space in 2D visualization.

“Tango” by ZBIGNIEW RYBCZYNSKI

http://vodpod.com/watch/3791700-zbigniew-rybczynski-tango-1983

[youtube http://www.youtube.com/watch?v=pTD6sQa5Nec&w=960&h=720]

[youtube http://www.youtube.com/watch?v=rBZrdO3fU8Y&w=640&h=360]

[youtube http://www.youtube.com/watch?v=Ib_X7PDfx4E&w=640&h=480]

[youtube http://www.youtube.com/watch?v=kZQ11VhXCP8&w=640&h=360]

I wanted to use this project as a chance to make a proto-type for a larger installation I am working on centered around the theme of mapping Suburbia. I learned a lot from going through the process of making this project (programming-wise) as well as realizing that warping is not the motion I want to use for the final piece. I think it makes a lot of sense for the imagery I am using and to focus one more of a sprawl simulation/motion.

Once I discovered this, I knew I didn’t have enough time to try and redo it. However, I thought of another similar project that I thought would be fairly simple to do… I was thinking about the consumer culture that became especially prevalent with the rise of the suburbs. I wanted pictures of household items to appear and accumulate in excess amounts on the ground (projected) when a person would walk through or linger in a space. The program would run for about a minute or less and then crash due to the amount of images being drawn. If anyone knows how I can fix this, it would be much appreciated! I’d love to get it working.

Below is a video of the Imaging Warping Program and a brief demonstration of the other project attempt failing (and with recycled chair imagery from my last project instead of house hold items).

[youtube http://www.youtube.com/watch?v=cmEOodiV6cw&w=420&h=315]

[youtube http://www.youtube.com/watch?v=dxqpaAG_utw&w=420&h=315]

[youtube http://www.youtube.com/watch?v=5MmeCvh5fds&w=420&h=315]

Thanks!

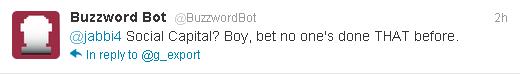

The Social Media Buzzword Bot (SMBB) is a platform-agnostic twitter bot that detects social media keywords by polling twitter. It then is supposed to respond if the tweeter is deemed an influencer (as determined by their Klout score, which as we all know, is the ultimate value of one’s worth as a human being). The SMBB is interactive, with a focus on dynamic responses based on user-generated content. The content of the responses is constructed from a series of crowdsourced responses to ensure authenticity. SYNERGY SYNERGY SYNERGY, SYNERGY SYNERGY, SYNERGY SYNERGY.

The Social Media Buzzword Bot (@BuzzwordBot on twitter) searches twitter for any of the following keywords:

influencers

go viral, going viral

crowdsourcing

user-generated content

earned media

authenticity, authentic branding

synergy

platform-agnostic

monetization

conversion rate

twitter ROI

facebook ROI

web 2.0

information superhighway

organic conversation

cloud computing

government 2.0

social capital

linkbuilding

Then, @BuzzwordBot decides whether or not tweet back. If it does, it has a 20% chance of tweeting a generic response: such as

Here, it inserts the keyword searched for into one of three generic phrases. Otherwise, it goes into a more specific response, where it chooses randomly between a couple responses customized to the keyword. I’ve selected a few below:

There are few things I hate in this world. Dictatorships. Censorship. Internet Marketing Buzzwords.

When my first concept for an AR system proved to gimmicky to implement, I looked around a bit for inspiration to do some sort of interactive social commentary project. Over break, a lot of my friends went to SXSW, which meant that my twitter feed was suddenly clogged with buzzword-filled retweets of various social media and internet marketing firms, people talking about Klout scores, etc, etc.

I decided to build a commentary on internet marketing buzzwords using as many social media buzzwords as possible. I started by picking a platform (twitter a natural choice), then decided I would automate some system to comment snarky things about the overuse of buzzwords on twitter. I also wanted to ensure I would be using as many buzzwords as possible in the implementation of my project. I began with some nice crowdsourcing.

I’m not sure whether the snark is enough to make this really work. Now that people have started to respond the the bot, things are pretty good. Definitely going to keep this running until I get reported for spamming the crowdsourcing.org twitter account (can I help it if EVERY tweet you send has a buzzword in it?). I also wish I had a larger response base, which given the quick nature of the hack, was an impossibility. I’ve decided against crowdsourcing the remaining responses, because I feel like the level of snark would drop too far. The code needs some more improvements to stop from tweeting on accidental use of phrases.

It appears the reign of the twitterbot has ended: but not after making some waves (not to mention providing a few laughs).

1314

36

24

16

927

approx. 16153

My satisfaction with the project has increased quite a bit since my first launch. After people starting interacting with the bot, applying their own meanings and insights into its actions, BuzzwordBot became more than me being snarky to random people and became an actual piece of interactive art. The fact that so many people saw Buzzword (and that some of them actually liked it) really made me happy.

Hello, this is Playing With Fire

Concept: I wanted to generate an interactive 3D Flame. I haven’t had much experience with 3D programming, let alone using the types of technology I wanted to use, so I knew this would be interesting.

Inspiration: Have you ever seen fire dance? Well, I have and…

[youtube http://www.youtube.com/watch?v=lbL29DFoGfM]

…now you have to. Videos like this had always intrigued me simply because of the way the flames moved. I don’t know, maybe it was the elegant movement of such a powerful force–fire.

Either way, I wanted to explore a new field of interactivity for this project, so not really knowing my way around the majority of interaction-based programming libraries, I delved into AR toolkit after seeing images of people making cool floating shapes or figures magically appear on screen like this:

But how could I make something like this interactive? Could I make something look good?

Concepts: Over the last two weeks, I had been playing around with ideas involving “light” interactivity or possibly “iPad” interactivity, but none of those ideas ever really found a feasible groundwork to really take off. However, when choosing between Kinect, ARtoolkit, and Reactable, I found the assignment to be more managable (Thanks Golan!). While the Kinect is something I’ve really wanted to play with, I knew that I needed something I could actually manage, in terms of scope, rather than somthing that was just me playing around with cameras. Additionally, Reactables didn’t really interest me, so I decided to go with ARtoolkit.

I wanted to make a flame that responded to sound. An audio campfire. A microphone based fire. To me, this is interesting because of its implications and possibilities. When I think of a campfire, I think of personal reflection. I personally feel that deep reflection and meditation can sometimes be ruined by the noisy environment we live in. So I planned to make a fire that would be diminish if the sound in the user’s environment reached a certain threshhold.

Some early sketches of what I wanted to do with this “flame” idea are below.

Initial Work:

Working with AR Toolkit, for me, wasn’t as smooth-sailing as I thought it’d be to get everything going. I spent numerous hours just trying to figure out how to run the damn thing, and it was a bit frustrating. However, after I got the gist of it, it became manageable. I mean, I really had no prior experience with this sort of thing, so I played around a lot with general tutorials and shapes and devices using my Macbook’s camera. Here are some screenshots:

Pretty interesting stuff happened when I began working with the ARToolkit and Processing. For instance, the AR objects would suddenly fly away from the AR marker—so you know, that was a thing I had to deal with. Additionally, the camera kept reading my white iphone (and black screen) as an AR marker, and sometimes I’d get AR objects just breaking through my screen.

Eventually, after several, several hours of getting kinks worked out and really exploring the libraries and source of the AR Toolkit package, I was able to get a fire going. After I got the fire going, I worked out the math behind making the “logs/wood” underneath the flame—so as to give it a genuine flame feel.

But the most important part to me, was the sound—how could I get sound to be interactive? Well as you may have seen in my sketches, I wanted my flame’s maximum height and noise patterns to be directly affected by my microphone. So I used this cool library called Minim that can use the sound from your stereo mic and convert them to numbers based in an array of the sound pattern in Processing. Sound cool? Good.

Demonstration:

This is what I came up with. Notice the flame reacting with the sound intensity of the song being played through my speakers. Making the allusion of “dancing” with the beat of the music.

[youtube http://www.youtube.com/watch?v=0s7F8WNPtHE]

Going Forward:

Yes, there’s more I can do with it and I plan on it. I would like to make the flame more realistic. I really want to get several flames, with different coded structures with varying reactions to sound, and line them up and play the song again. The only reason I used music on this was that I didn’t have multiple people around me to engage in a conversation that would make the flame react appropriately. Imagine sitting around this fire with glasses that could view the AR toolkit. Illumination when there is peace and quiet, and darkness when it get’s to loud. A perfect place to sit and reflect.

Conclusion:

My programming skills have been really pushed throughout the semester. Often times, I get too many creative ideas and don’t know how to implement them in proper syntax. I know my work may not be the most awesome interaction ever, but even if it’s a botched experiment to some, I feel it is a well documented exploration into a programming territory I didn’t even know existed a few weeks back. To be honest, I’m extremely proud of my ability to stick with this AR Toolkit stuff instead of giving up. I have a long way to go, but I truly feel that IACD has really given me some invaluable lessons in programming, life, and creativity.

Things I learned include:

– AR Toolkit is serious business.

– Creating shape classes is mad useful.

– Perlin noise is AWESOME, but tricky.

– Don’t give up; take a break, come back, and work.

– Never underestimate your code.