Final Project – Luci Laffitte – Intersecta: Pollination Wall

Intersecta is an experimental exhibit, currently being developed for the Carnegie Museum of Natural History, which explores the connection between insects and people. The project uses Kinect technology to allow visitors to discover what insects do for us — by highlighting the human foods for which insects are crucial pollinators.

[youtube http://www.youtube.com/watch?v=AVHvlhy3rsk&w=600&h=335]

Overview:

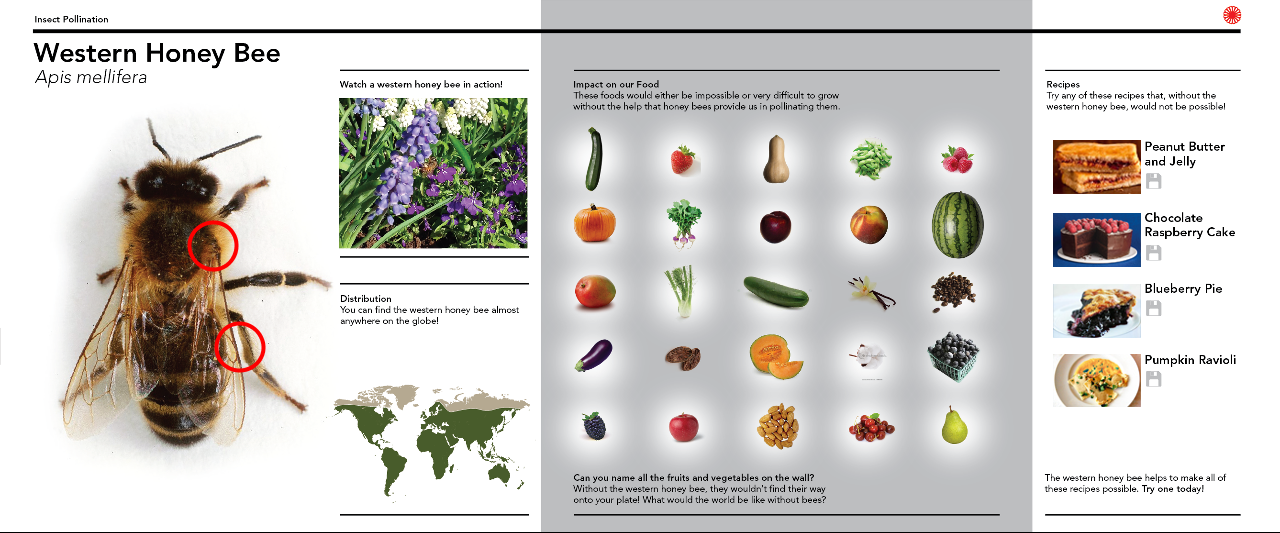

Intersecta is an experimental exhibit being developed for the Carnegie Museum of Natural History that explores the connection between insects and people (as part of my senior design capstone course). The exhibit will use kinect technology to allow large white walls to be interactive. For my project I am prototyping the Pollination wall. This piece of the exhibit allows visitors to explore what insects do for us by showing which foods that we eat insects are crucial to pollinating. An interactive gigapan image will show the different aspects of an insects’ anatomy that contribution to it’s role as a pollinator. In the second section, lights will illuminate which the fruits and veggies the insect pollinates. In the final section, visitors will see recipes made from the foods that the insect makes possible, and save specific ones to their bug block.

Motivation:

I wanted to do this project so I could display an example of the work my design teammates and I conducted over the course of the semester and prove that the technology is possible and affordable.

Process:

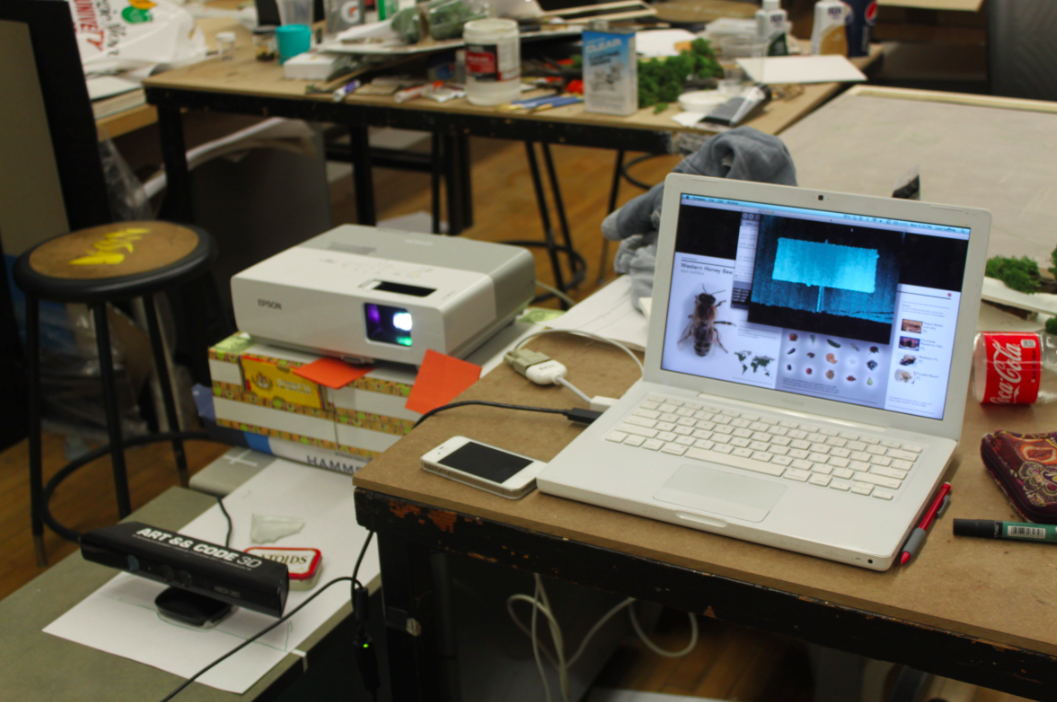

I struggled with deciding on my exact set-up for this project because our design in full-scale calls for a 5’x12′ wall with an advanced short throw projector. However using the one kinect I had access to did not allow me to sense users across the entire length of the wall. That being said, I decided that I would try a slightly scaled down version of the wall (about 7 feet long) with the kinect at different angles to try to get the best touch detection. I tried positioning the kinect above, to the side, and from behind. After several days of experimenting, and considering that my ultimate goal was the present this in a large open room at the museum, I settled on setting up the kinect from behind.

Method:

From there I used synapse for kinect and Dan Wilcox’s git hub example to track the skeleton of a user. By defining where hotspots are located on the board, and entering into the program the location of the four corners of the wall according to the kinect, I was able to reliably allow a user to interact with an interface.

The Interface:

The Setup

Images of User Interaction:

This image shows a friend interacting with the program with the Kinect from behind and a low-quality projector from behind. This is why part of the interface is projected on his hand. The green circle shows where the system thinks his hand is! Wooo!